Last updated on November 3rd, 2025 at 05:16 pm

Other articles in the series include:

How to Turn Music Data into Actionable Insights: This is an overview of the Data Pyramid and how it can be used in the music industry.

An Overview of Data in The Music Industry: This article gives a list of all types of metadata in the music industry.

Making Sense of Music Data – Data Visualizations: This article explores data visualizations as the second step of the pyramid and gives examples of visualizations in the music industry.

Benchmarking in the Music Industry – Knowledge Layer of the Data Pyramid: This article deals with Knowledge and how it is used to benchmark performance and set expectations.

Intelligence can be described as the ability to choose one decision out of a million other decisions based on knowledge of how these decisions might affect the outcome.

Intelligence can be generated by the machine, for example, a self-driving car is a form of intelligence that scans the environment and can predict the course of action for the next section of the road. In the music industry, intelligent decisions are still, for the most part, made by humans by examining information, reading graphs and charts, memorizing past outcomes, and monitoring real-time data. In this article, we’ll explore some of the emerging intelligence technology in the music field so keep reading to find out more.

1. Recommendation systems that learn and adapt effectively to individual users’ preferences

Recommendation systems already use some sort of prescriptive analytics when they make a selection of songs based on past user behavior. Recommendation systems can also take into account the sequence of songs and context that affect the enjoyment level of the playlist as a whole. As previously played songs influence the perception of the next song, the playlist can be adjusted accordingly. The ability to prescribe a listening experience by recommendation systems is, perhaps, the most common and well-developed example of intelligence in the music industry.

Additionally, recommendation systems can prescribe music that directly affects user behavior. This project, for example, uses data from running exercises, predicts the future running performance, and recommends songs that maximize running results. It does so continuously, as the system stores and learns from each updated running exercise record.

To learn more about different types of recommendation systems, check out the article How Do AI Music Recommendation Systems Work.

Photo at Unsplash @skabrera

2. Automatic playlist generation based on context

Generating music or suggesting existing music based on the context is an analog of a self-driving car in the music industry. The music adapts to the listening situation to amplify the current experience. For instance in video games, where music adjusts to the plot as the user progresses through various levels of the game. More on that in our article on Omniphony engine that explores adaptive soundtracks and music context in game development.

Such systems are also used as location-aware music recommendations for travel destinations (when music is chosen based on the sightseeing place you visit), or computer vision systems for museum experiences (when the artwork dictates the audio choice). In these cases, the constantly changing environment serves as the basis for recommendations.

Another example of intelligence in this field is generating music in the metaverse which is a virtual environment, that includes augmented reality. The metaverse can be accessed through Oculus headsets and a smartphone. Currently, virtual streams and concerts are already conducted in the metaverse, so it is only a matter of time till the curated immersive experiences that can adjust to the audience’s needs will be delivered using intelligence.

3. Prescriptive curatorship – What’s going to be hot next?

Prescriptive curatorship entails an understanding of how up-and-coming artists and tracks will perform and who is more likely to break in the near future. In the past, platforms like Hype Machine indexed music sites and helped find the best new music.

Nowadays, there are systems that can predict future hits and breaking artists automatically. For example, Spotify is developing algorithms that can predict future-breaking artists. The algorithm takes into account the preferences of the early adopters and then determines whether the artist can be considered breaking. This data can then be used to sign deals with the artist at a very early stage.

Photo at Unsplash @jhjowen

4. Tracking changes in music preference distribution – making music that hits the current preferences or even future preferences

Unlike prescriptive curatorship that relies on a group of experts, music preference distribution numbers serve artists to show how their chosen genre and formats fit audience demographics and how music can be changed for current or future preferences. The general consensus in the music industry is that music preference algorithms come after the music is produced, otherwise all music will end up sounding the same to mimic popular artists.

There is not yet a system that would automatically recommend changing the content of the song based on what users prefer. Nevertheless, attempts to use the numbers to create songs people will like are still being made.

5. Royalty Advances

Royalty advances are a complex task that requires comprehensive tracking of music consumption across all platforms. Distributors such as Amuse and iGroove offer a royalty advance service that is able to predict upcoming payout amounts so that artists can invest in their music long before the actual royalties kick in. These systems analyze streaming data to calculate upcoming earnings.

Recently the topic got even more attention through the hype of NFTs. Crypto-investors want to predict future royalty payouts and the value of their asset.

Future platforms most likely will be able to prescribe a course of action regarding which distribution platform to focus on based on the predicted royalty amounts.

The main concern in the industry is how far can one go with technological intelligence considering that music is a creative activity and the human element is still largely prevalent. An intelligence machine that can tell which music to produce based on a prediction of future user preferences generally prompts an adverse reaction in the industry.

Nevertheless, intelligent decisions to adjust the content of songs or to sign future-breaking artists identified by the AI can already be made by the artists and labels based on available data.

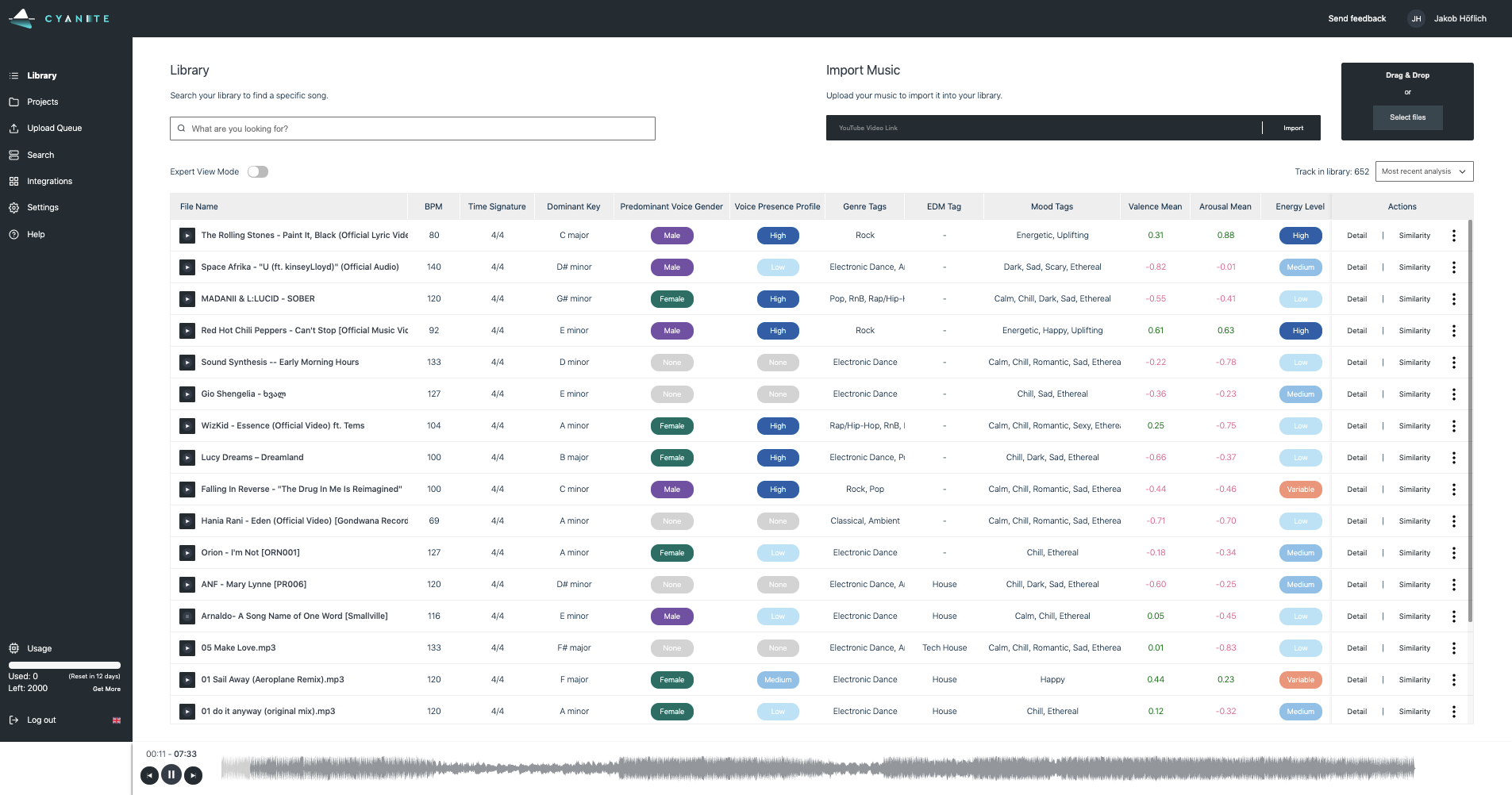

At Cyanite, we provide our API for access to data and the development of any kind of intelligence engines. As always, at each level of the pyramid, the quality of data plays a vital role. Cyanite generates data about each music track such as bpm, dominant key, predominant voice gender, voice presence profile, genre, mood, energy level, emotional profile, energy dynamics, emotional dynamics, instruments, and more.

Cyanite Library view

I want to analyze my music data with Cyanite – how can I get started?

If you want to get the first grip on Cyanite’s technology, you can also register for our free web app to analyze music and try similarity searches without any coding needed.