Empowering Researchers with Free AI Music Analysis – Cyanite for Innovators Spotlight

Last updated on March 6th, 2025 at 02:14 pm

We believe in the power of our AI music analysis tools to fuel creativity across diverse fields, from the arts and research to grassroots movements and creative coding.

That’s why we launched Cyanite for Innovators in 2023, a support programme designed to empower individuals and teams working on non-commercial projects across the arts, research and creative coding communities. Participants gain access to our AI music analysis tools, opening the door for groundbreaking experimentation in areas like interactive art, digital installations, and AI-driven research.

So far, we’ve received 20+ applications, five of which have been selected for the programme:

Are you working on a groundbreaking AI-driven project? Apply now and join a community of innovators shaping the future.

How to Apply

1. Click the button below.

2. Submit a detailed proposal outlining your project’s objectives, timeline, and expected outcomes.

3. Allow us 4-8 weeks to review your application and get back to you.

Music and Dance Visualizer by Dilucious

Agustin Di Luciano, a digital artist and developer, is pushing the limits of real-time interactivity with audio processing, motion capture, procedural generation, and Cyanite’s AI music analysis /ML technology.

His project creates immersive, AI-powered sensory landscapes, transforming movement and sound into stunning real-time visuals. These images are from his recent exhibition at the Art Basel Miami where he showed his Music Visualizer to Miami’s top tech entrepreneurs as well as notable Latin American art collectors.

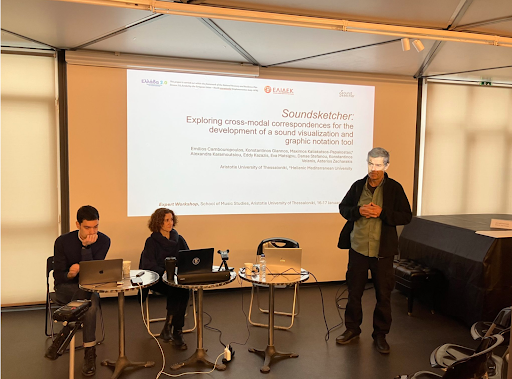

Soundsketcher

Led by Asteris Zacharakis, PhD and funded by the Hellenic Foundation for Research and Innovation, Soundsketcher (2024–2025) is a cutting-edge research project that blends computational, cognitive, and arts-based methodologies to develop an application that automatically converts audio into graphic scores.

This technology assists users in learning, analyzing, and co-creating music, making music notation and composition more accessible and intuitive.

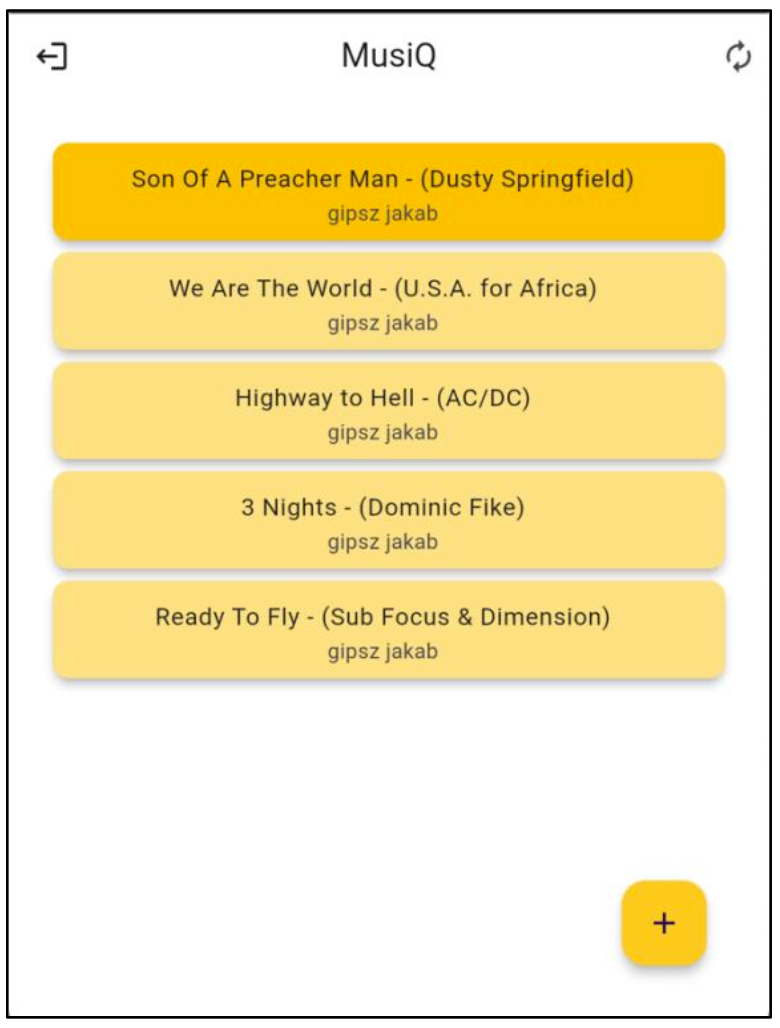

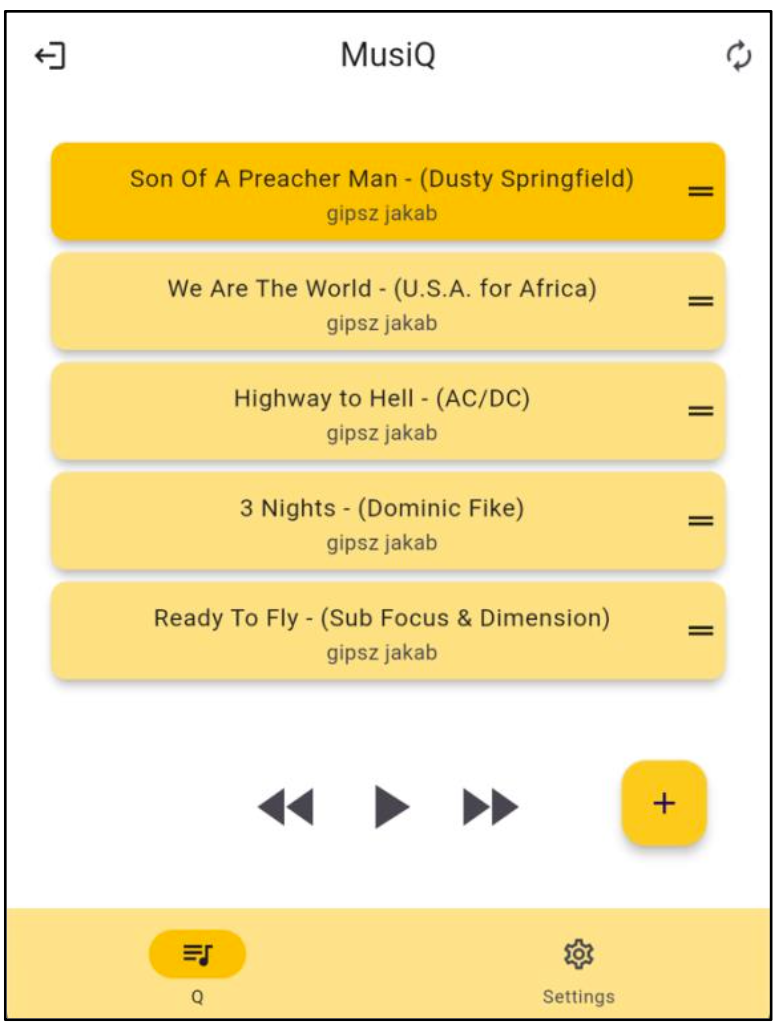

MusiQ

Kristóf Kasza developed MusiQ for his thesis at Budapest University of Technology and Economics, a multi-user service that sorts song requests based on parameters analyzed by Cyanite, using a FastAPI backend with Docker and a Flutter-based frontend.

Cyanite’s AI music analysis API enabled precise music sorting by key, genre, BPM, and mood, contributing to the project’s success, which earned him top marks, and while he has since started a full-time software development job, he looks forward to further enhancing MusiQ in the future.

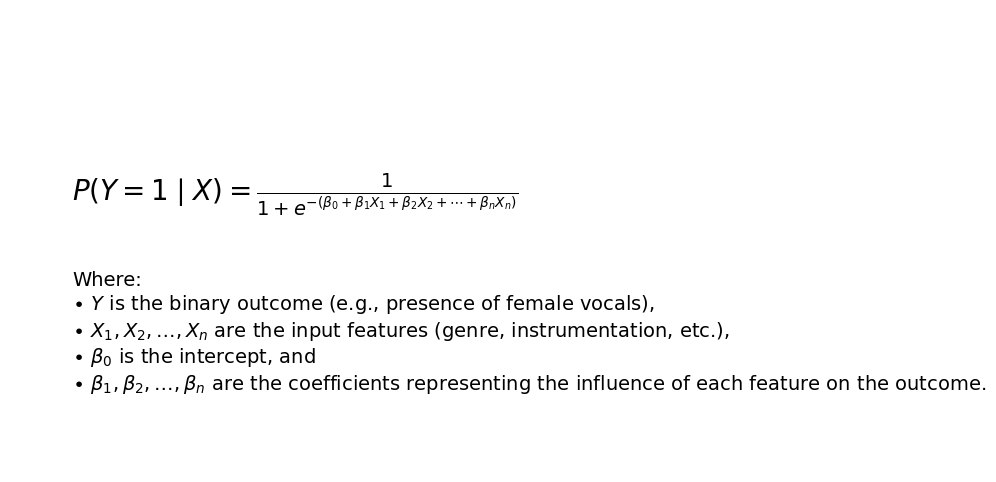

Expanding the Boundaries of Queer Music Analysis: A Comparative Study with AI Insights

A research team arround Dr. Jörg Mühlhans from the University of Vienna, Institute of Musicology is conducting a large-scale quantitative analysis of 125 queer music songs, revealing key trends in emotional tone and queer representation, and aims to integrate Cyanite’s AI to validate, expand, and refine these insights for future research.

Thesis: What is the greatest factor in making a timeless song?

Huw Lloyd is conducting primary research for his dissertation to investigate the key musical, historical/cultural, and economic factors that contribute to a “timeless song”—one that resonates across generations—aiming to determine the most influential elements and provide insights for musicians seeking to understand and apply these trends.

Feel inspired?

If you have a research project in mind or would like to try out the technology that supports these innovative projects, click the button below to analyze your own songs!