AI Panel: Using AI Music Search in a Co-Creative Approach between Human and Machine

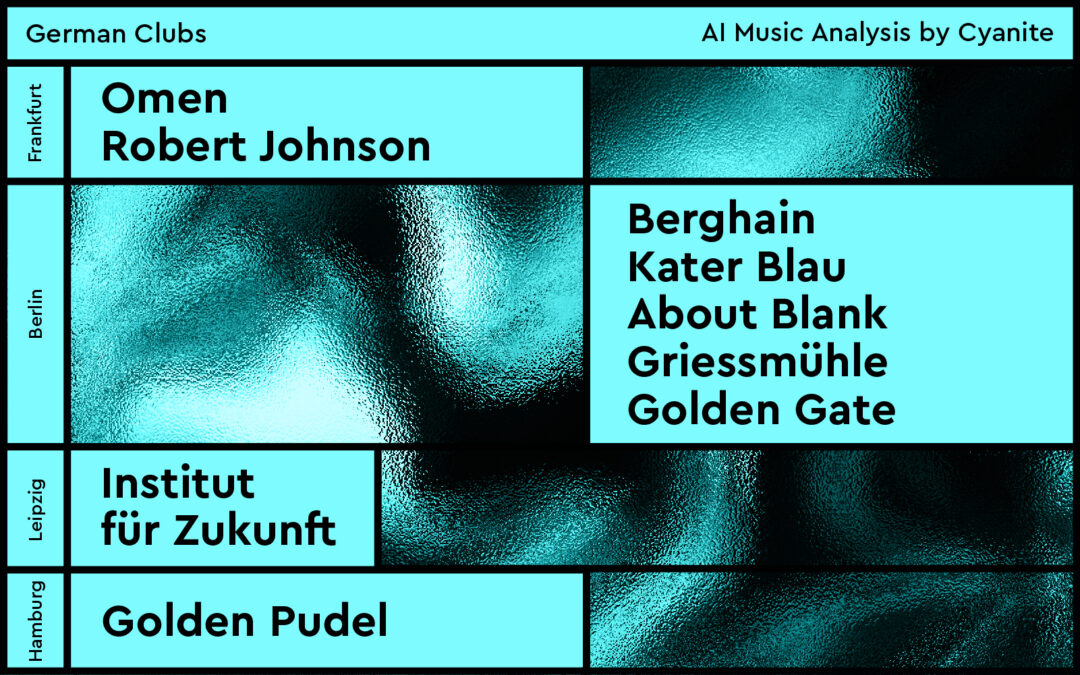

In September 2022, Cyanite co-founder, Markus took part in a panel discussion at Production Music Conference 2022 in Los Angeles.

The panel topic was to discuss the role of AI in a co-creative approach between humans and machines. The panel participants included Bruce Anderson (APM Music), Markus Schwarzer (Cyanite), Nick Venti (PlusMusic), Philippe Guillaud (MatchTune), and Einar M. Helde (AIMS API).

The panel raised pressing discussion points on the future of AI so we decided to publish our takeaways here. To watch the full video of the panel, scroll down to the middle of the article. Enjoy the read!

Human-Machine Co-creativity

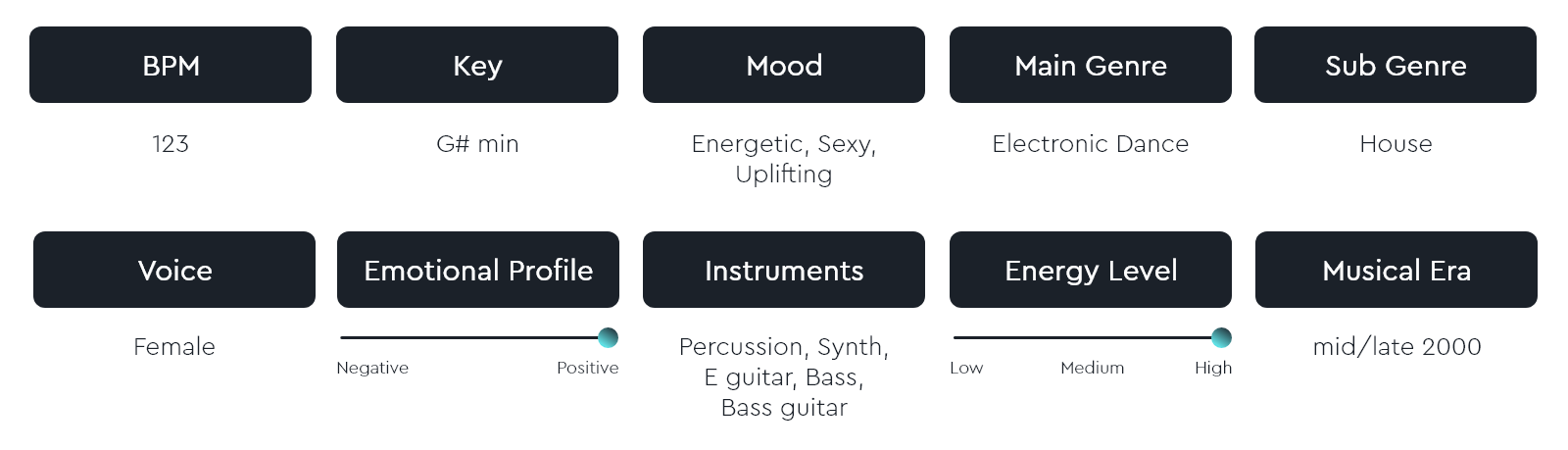

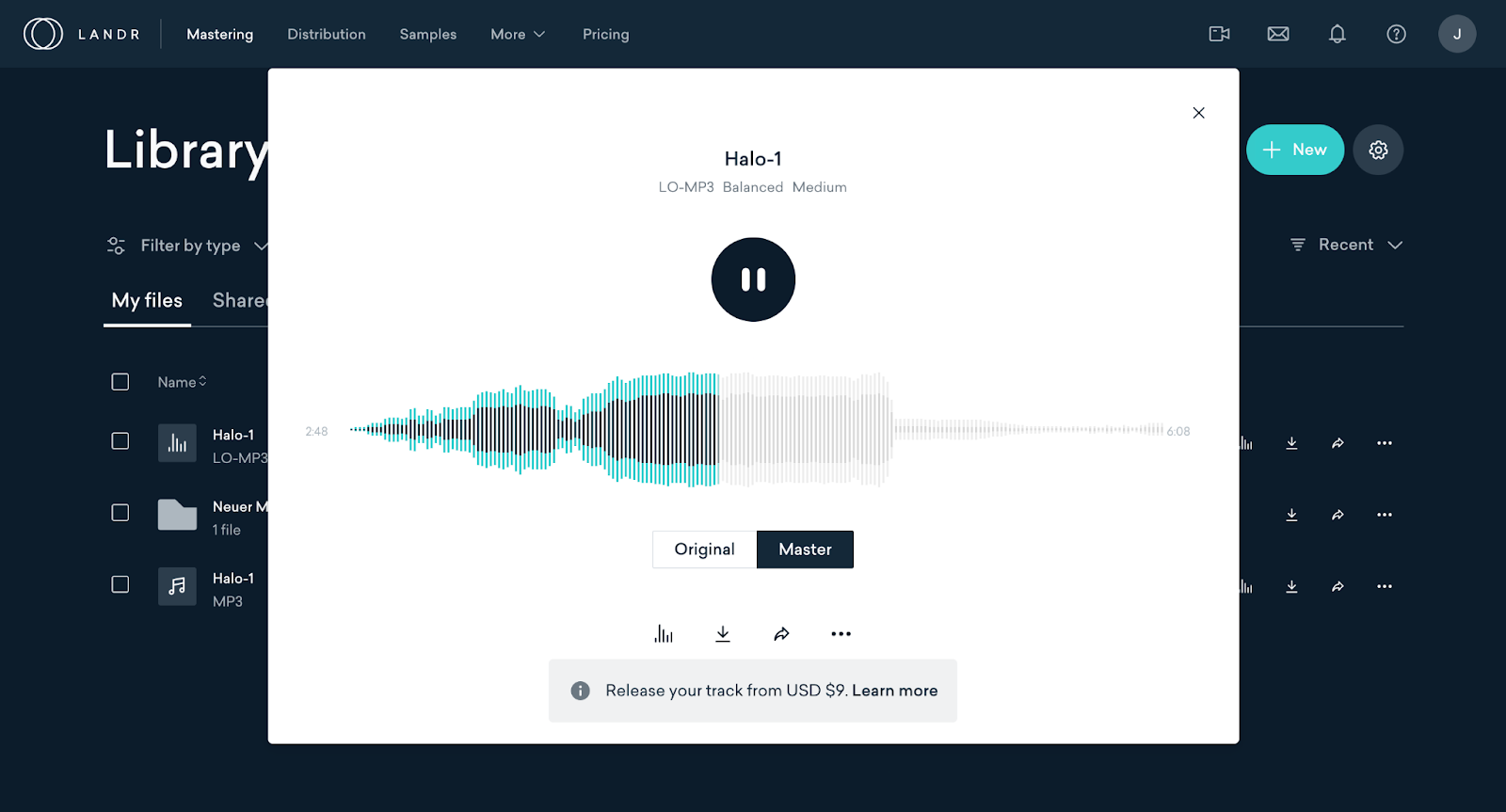

AI performs many tasks that are usually difficult for people, such as analyzing song data, extracting information, searching music, and creating completely new tracks. As AI usage increases, questions of AI’s potential and its ability to create with humans or create on their own have been raised. The possibility of AI replacing humans is, perhaps, one of the most contradicting topics.

The PMC 2022 panel focused on the topic of co-creativity. Some AI can create on their own, but co-creativity represents creativity between the human and the machine.

So it is not the sum of individual creativity, rather it is the emergence of various new forms of interactions between humans and machines. To find out all the different ways AI music search can be co-creative, let’s dive into the main takeaways from the panel:

Music industry challenges

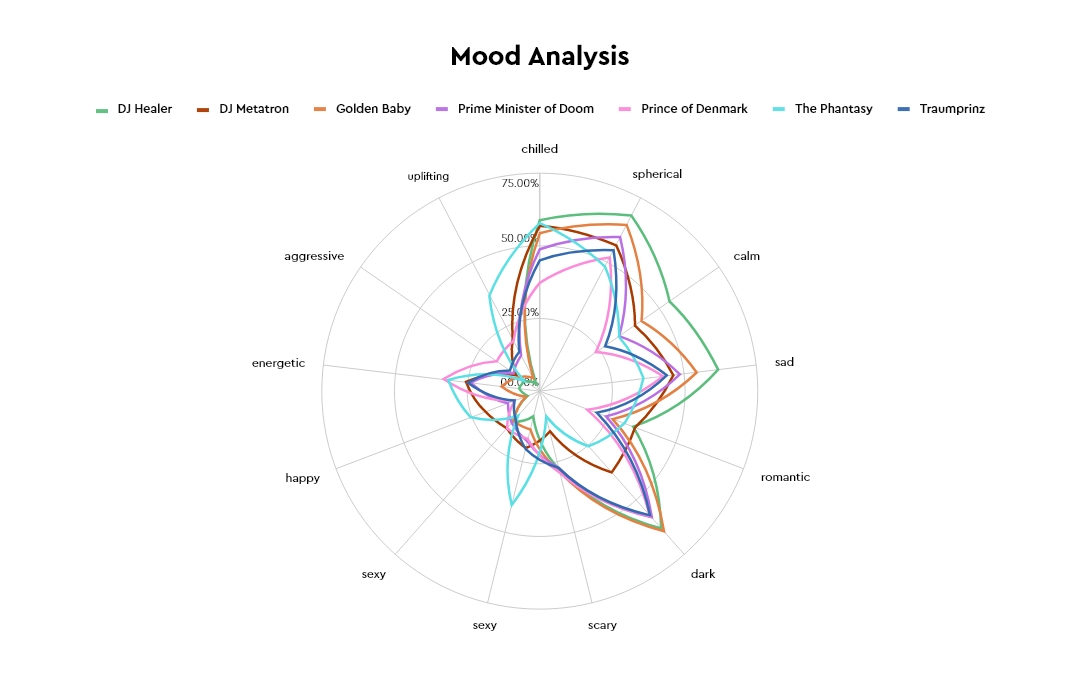

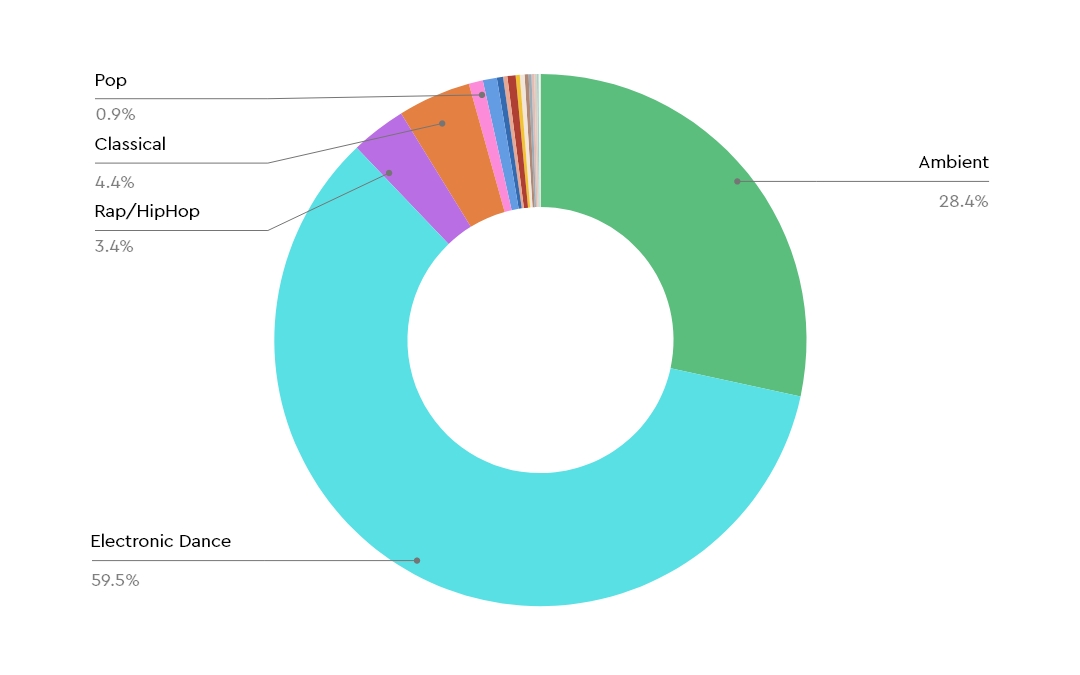

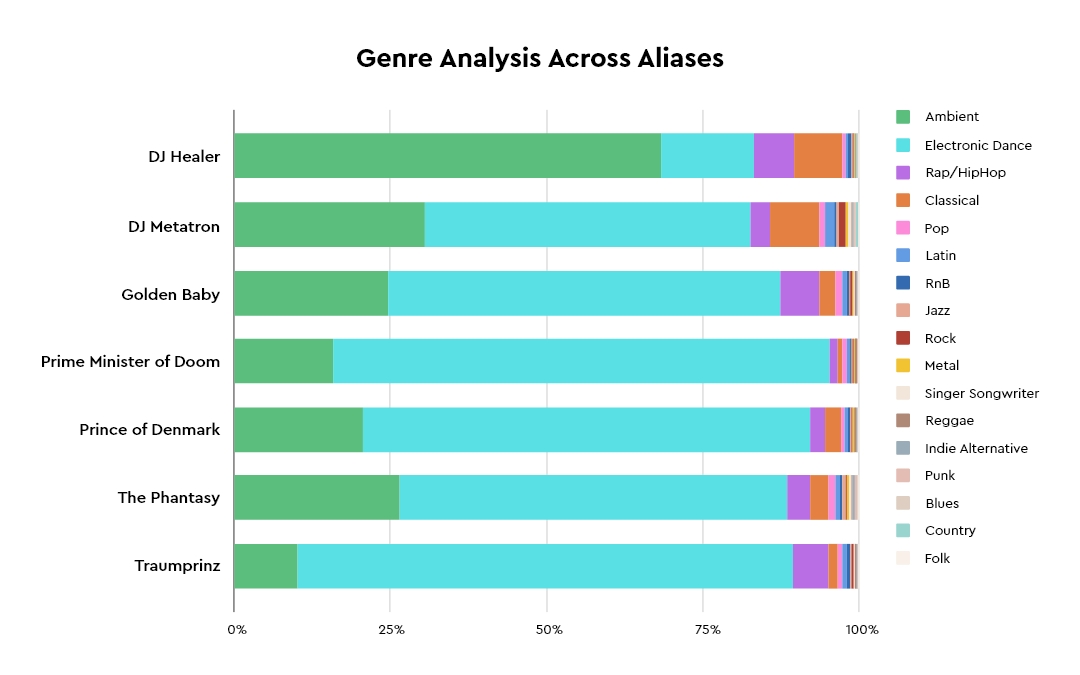

The main music industry challenge that all participants agreed on was the overwhelming amount of music produced these days. Another challenge is reaching a shared understanding of music.

The way someone searches for music depends on their understanding of music which can widely differ and their role in the music industry. Music supervisors, for example, use a different language to search for music than film producers.

We talked about it in detail at Synchtank blog back in May 2022. AI can solve these issues, especially with the new developments in the field.

Audience Question from Adam Taylor, APM Music: Where do we see AI going in the next 5 years?

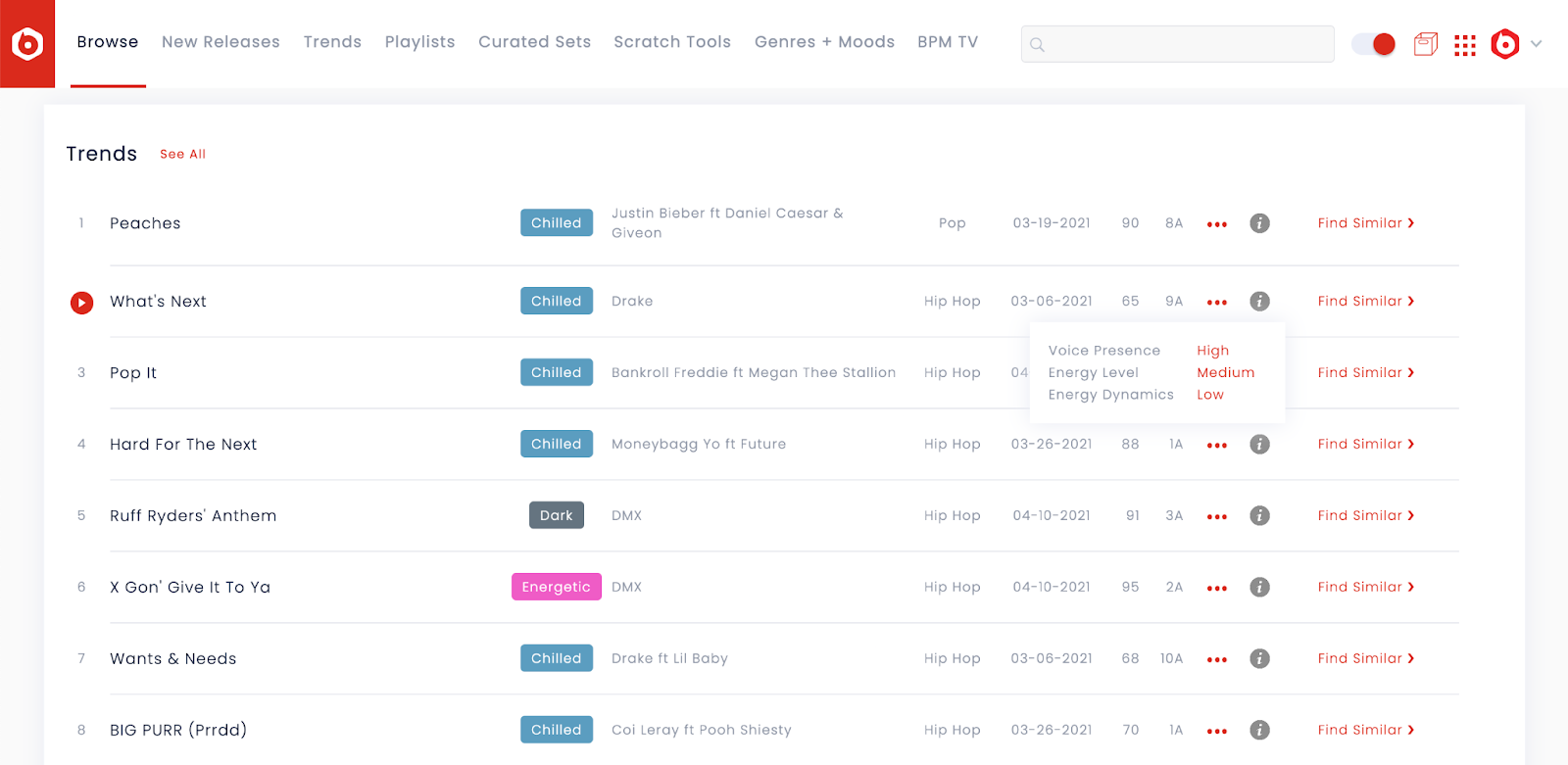

So what’s in store for music AI in the next 5 years? We’re entering a post-tagging era marked by the combination of developments in music search. Keyword search will no longer be the main way to search for or index music. Instead, the following developments will take place:

- Similarity Search has shown that we can use complex inputs to find music. Similarity search pulls a list of songs that match a reference track. It is projected to be the primary way of searching for music in the future.

- Free Searches – Search in full-text that allows searching for music in your own words based on natural language processing technologies. With a Free Search, you enter what comes to mind into a search bar and the AI suggests a song. This is a technology similar to DALL-E or Midjourney that returns an image based on text input.

- Music service that already knows what to do – in a further perspective, music services will emerge that recommend music depending on where you are in your role or personal development. These services will cater to all levels of search: from an amateur level that simply gives you a requested song to expert searches following an elaborate sync brief including images and videos that accompany the brief or even a stream of consciousness.

Audience Question from Alan Lazar, Luminary Scores: Can I decode which songs have the potential to be a hit?

While some AI companies attempted to decode the hit potential of music, it is still unclear if there is any way to determine whether the song becomes a hit.

The nature of pop culture and the many factors that compile a hit from songwriting to production and elusive factors such as what is the song connected make it impossible to predict whether or not a song becomes a hit.

The vision for AI from Cyanite – where would we like to see it in the future?

AI curation in music is developing at a lightning speed. We’re hoping that it will make music space more exciting and diverse, which includes in particular:

- Democratization and diversity of the field – more opportunities will become available for musicians and creators, including democratized access to sync opportunities and other ways to make a livelihood from music.

- Creativity and surprising experiences – right now AI is designed to do the same tasks at a rapid speed. We’re hoping AI will be able to perform tasks co-creatively and produce surprising experiences based on music but also other factors. As music has the ability to touch directly into people’s emotions, it has the potential to be a part of a greater narrative.

You are currently viewing a placeholder content from Default. To access the actual content, click the button below. Please note that doing so will share data with third-party providers.

More InformationVideo from the PMC 2022 panel: Using AI Music Search In A Co-Creative Approach Between Human and Machine

Bonus takeaway: Co-creativity between users and tech – supplying music data to technology

It seems that we should be able to pull all sorts of music data from the environments such as video games and user-generated content. However, the diversity of music projects is quite astonishing.

So when it comes to co-creativity from the side of enhancement of machine tagging with human tagging, personalization can be harmful to B2B. In B2B, AI mainly works with audio features without the involvement of user-generated data.

Conclusion

To sum up, AI can co-create with humans and solve the challenges facing the music industry today. There is a lot in store for AI’s future development and there is a lot of potential.

Still, AI is far away from replacing humans and should not replace them completely. Instead, it will improve in ways that will make music searches more intuitive and co-creative responding to human input in the form of a text search, image, or video.

As usual with AI, some people overestimate what it can do. Some tasks such as identifying music’s hit potential remain unthinkable for AI.

On the other hand, it’s not hard to envision the future where AI can help democratize access to opportunities for musicians and produce surprising projects where music will be a part of a shared emotional experience.

We hope you enjoyed this read and learned more about AI co-creativity and the future of AI music search. If you’re interested to learn more, you can also check out the article “The 4 Applications of AI in the Music Industry”. If you have any feedback, questions, or contributions, please reach out to markus@cyanite.ai.

I want to integrate AI search into my library – how can I get started?

Please contact us with any questions about our Cyanite AI via mail@cyanite.ai. You can also directly book a web session with Cyanite co-founder Markus here.

If you want to get the first grip on Cyanite’s technology, you can also register for our free web app to analyze music and try similarity searches without any coding needed.