How to Turn Music Data into Actionable Insights

Decisions in the music industry are increasingly made based on data. Services like Chartmetric, Spotify for Artists, or Facebook Business Manager among others are powerful tools to enable music industry teams to back up their work with data. This process of collective data generation is called datafication, pointing at the fact that almost everything nowadays can be collected, measured, and captured either manually or digitally. Data can be used by recommendation algorithms to recommend music. This data is also an opportunity for the stakeholders to make business decisions, reach new audiences in the market, and find innovative ways to do business.

However, decision-making from data also requires a new level of analytical skills. Simple statistical analysis is not enough for data-based decision-making. The amount of data, its complexity, and challenges associated with storing and synchronizing large data require smart technologies to help manage the data and make use of it.

This article explains the general framework for data-based decision-making and explores how Cyanite capabilities can lead to actionable business insights. It is specifically tailored to music publishers, music labels, and all types of music businesses that own musical assets. This framework can be applied in a variety of ways to areas such as catalog acquisitions, playlist pitching, and release plans.

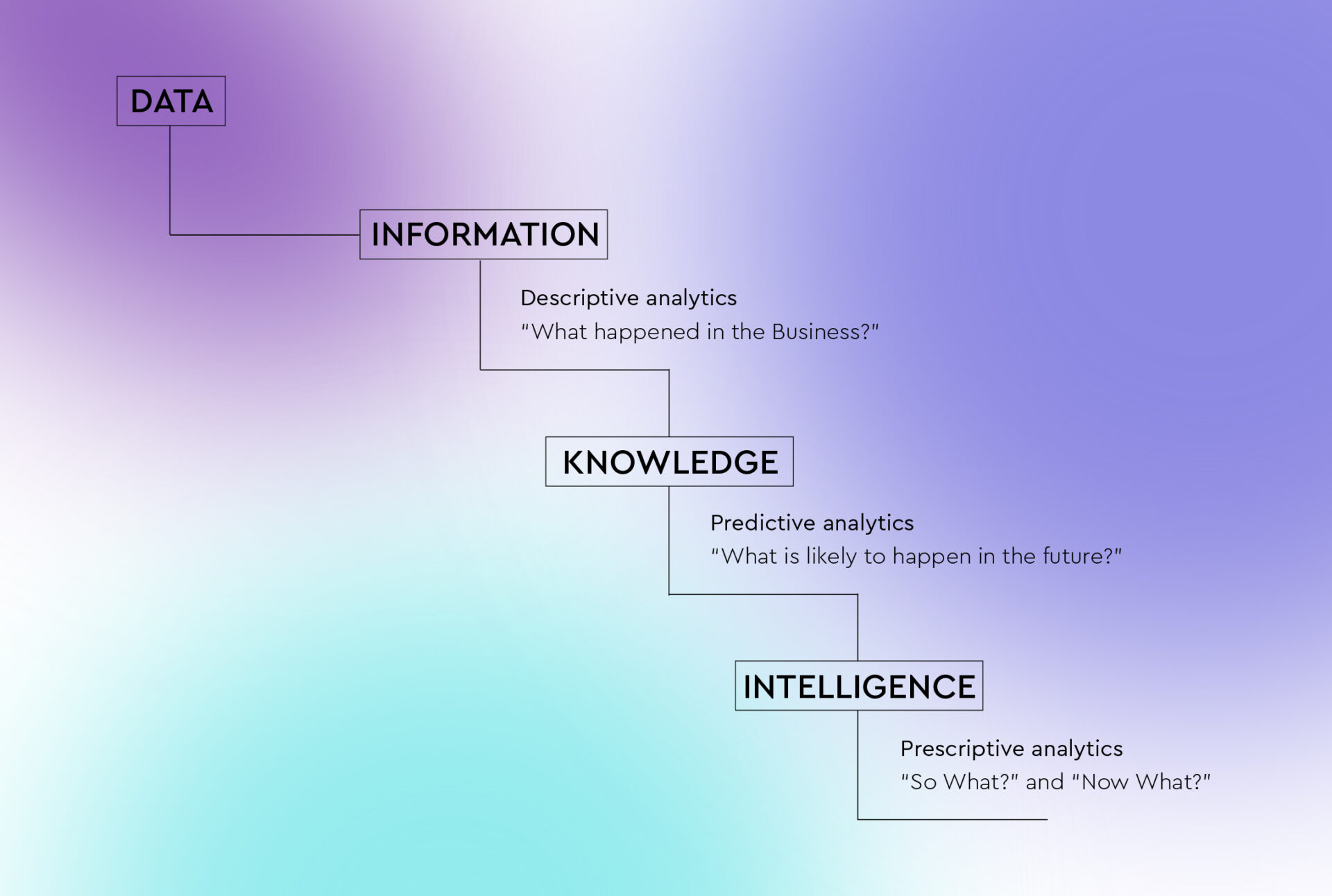

Data Pyramid

So how are data-based decisions made? The framework for decision-making is based on the Berklee University Data Pyramid model by Liv Buli. In the next parts we outline the 4 steps of the model and add a certain Cyanite layer to it:

- Data Layer

- Information Layer

- Knowledge Layer

- Intelligence Layer

1. Data Layer

At the very first layer, the raw data is generated and collected. This data includes user activity, information about the artist such as social media accounts and usernames, album release dates or concert dates, and creative metadata. Among others, a technology like Cyanite generates creative metadata such as genre, mood, instruments, bpm, key, vocals, and more.

At this stage the data is collected in a raw form, so limited insights can be made out of it. This step is, however, crucial for insight generation at the next layers of the pyramid.

It’s also important to remember a general rule of thumb: the better the input, the better the output. That is, the higher the quality of this initial data generation, the better insights you can derive from it at the top of the pyramid. Any mistakes made in the crucial data layer will be carried in the other layers biasing insights and limiting actionability.

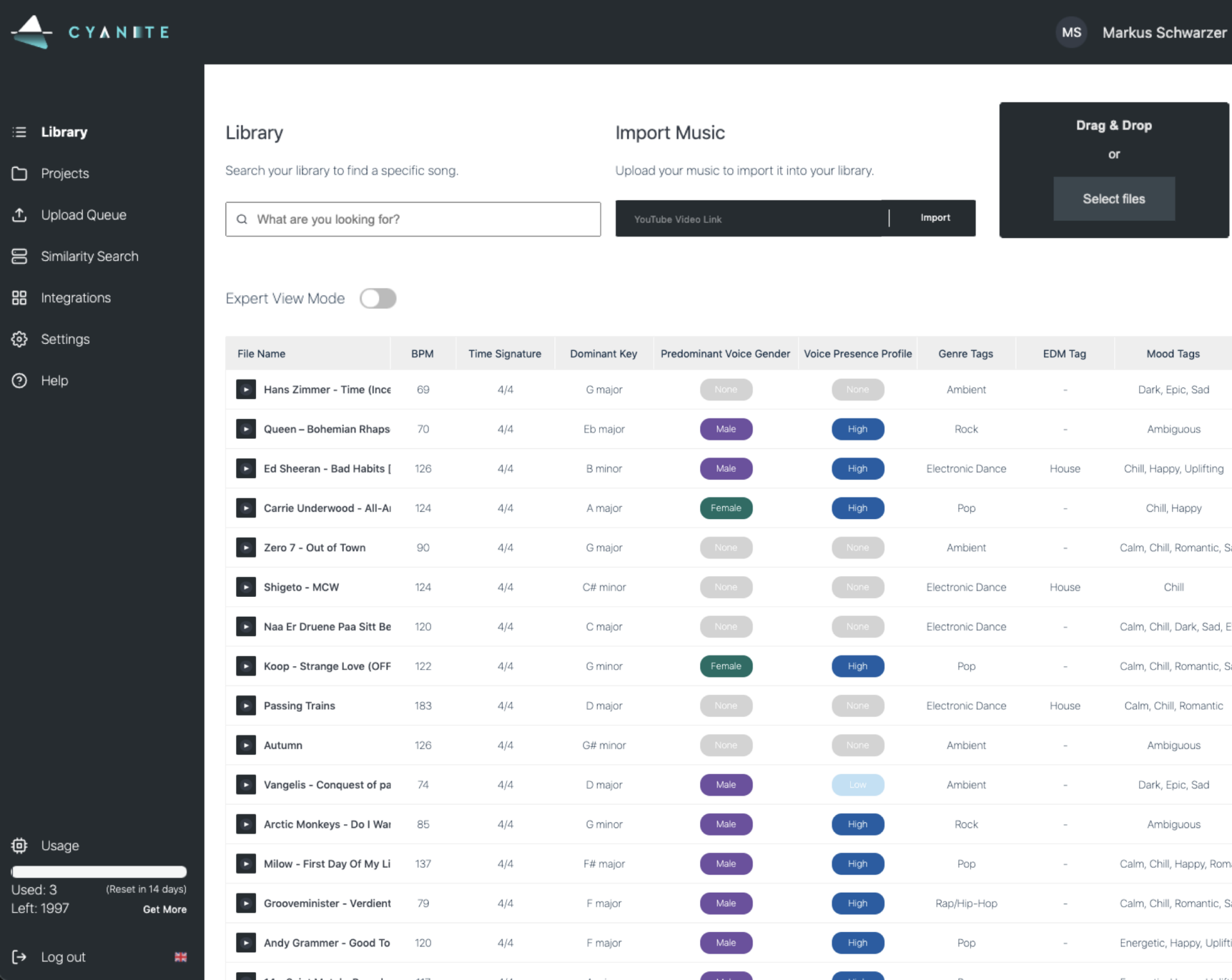

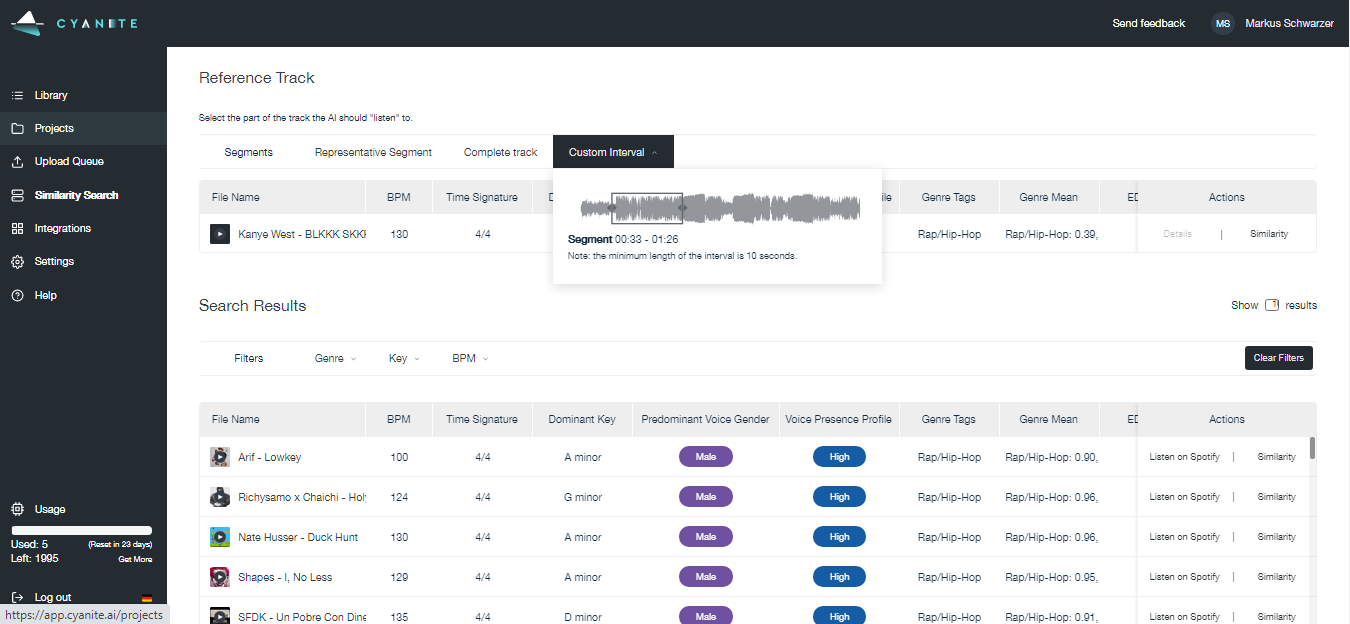

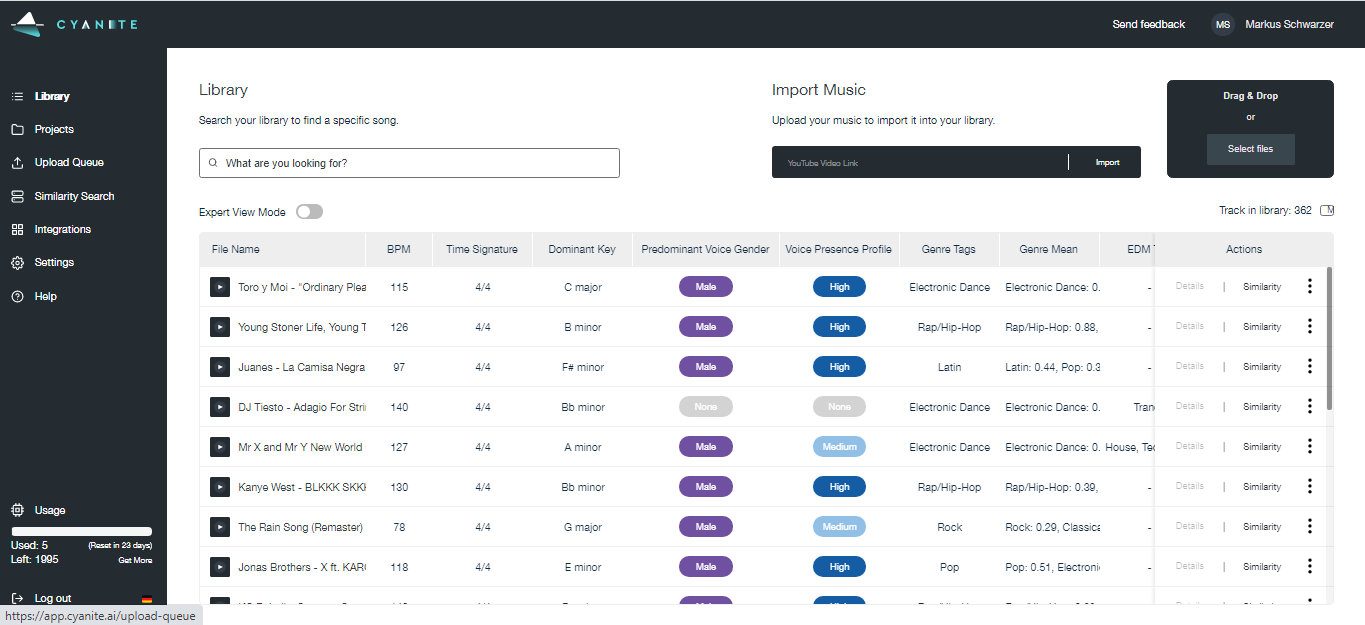

Our philosophy at Cyanite is to have a reduced set of data output in the first layer to help accuracy and reliability. The data is presented in readable form in the Cyanite interface. You can see what kind of data Cyanite generates in this analysis of Spotify’s New Music Friday Playlist.

Cyanite Interface

2. Information Layer

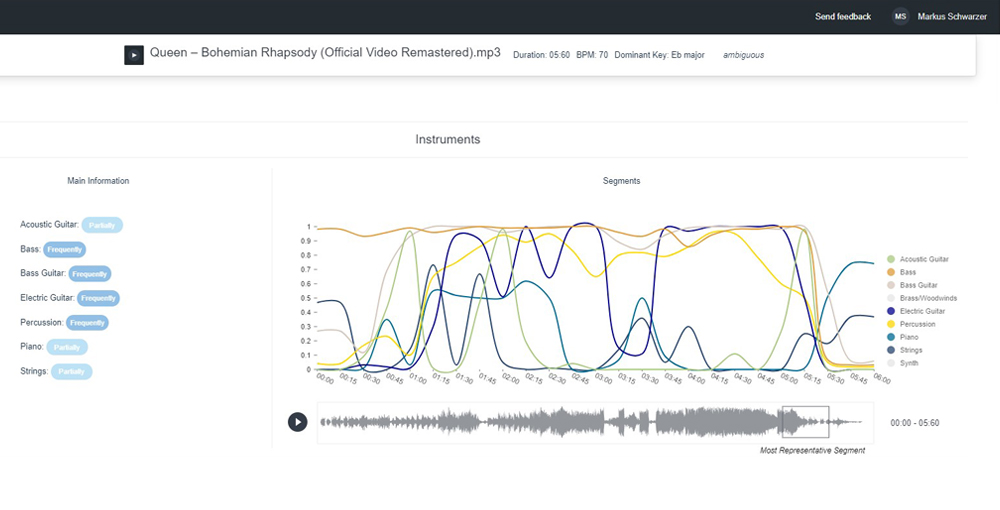

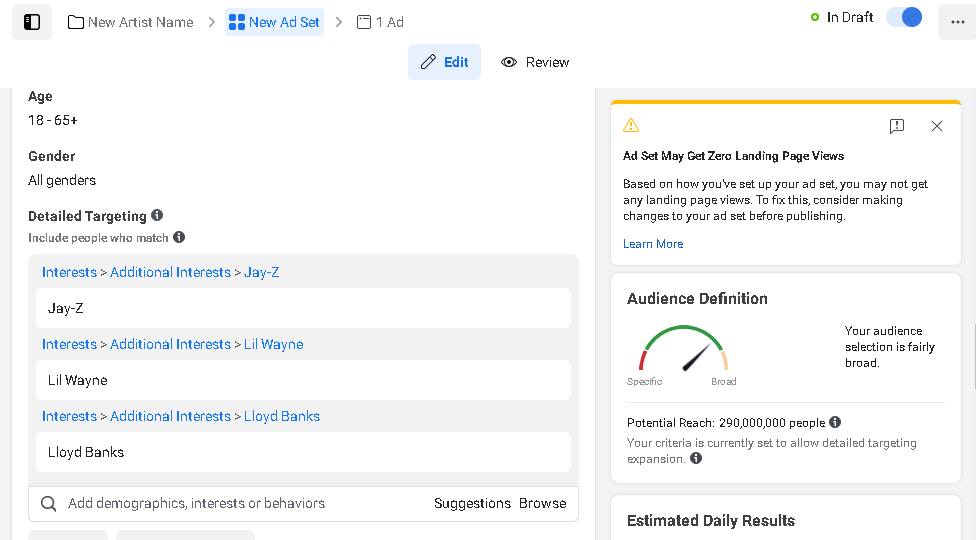

At the information level, the data is structured and visualized, often in a graphical form. A first glance at data in a visual form can already bring value and enable the company to answer questions such as: “What happened with the artist, playlist, or a chart?”

At each stage of the pyramid, an appropriate analytical method is used. The visualization and reporting methods among others at the information stage are called descriptive analytics. The main goal at this stage is to identify useful patterns and correlations that can be later used for business decisions.

Analytical methods used at each stage of the data pyramid

(based on Sivarajah et al. classification of data analytical models, 2016)

At this level, there are two main techniques: data aggregation and data mining. Data aggregation deals with collecting and organizing large data sets. Data mining discovers patterns and trends and presents the data in a visual or another understandable format.

This is the simplest level of data analysis where only cursory conclusions can be made. Inferences and predictions are not made on this level.

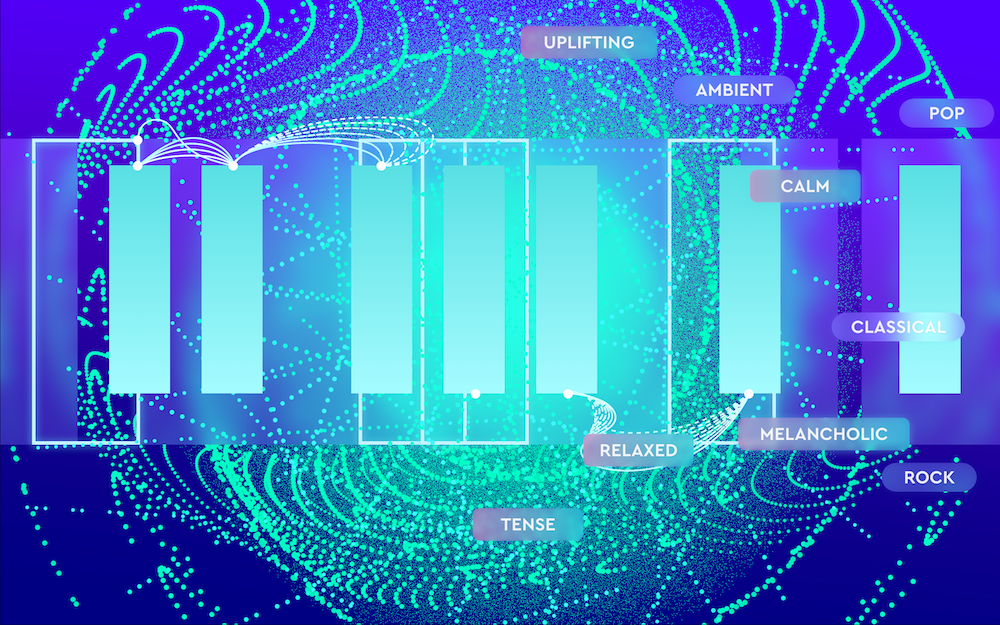

One of many ways to visualize music via Cyanite’s detail view

3. Knowledge Layer

The knowledge layer is the stage where the information is converted into knowledge. Benchmarking and setting milestones are used at this stage to derive insights from data. For example, you can set expectations for the artist’s performance based on the information about how they performed in the past. Various activities such as events and show appearances can influence the artist’s performance and this information too can be converted into knowledge, indicating how different factors affect the artist’s success.

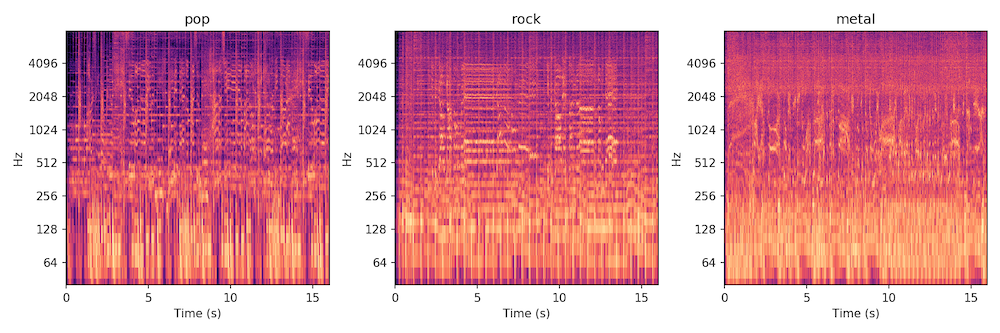

This stage employs the so-called predictive analytics that answer the question: “What is likely to happen in the future?” This kind of analytics captures patterns and relationships in data. They can also deal with historical patterns, connect them to future outcomes, and capture interdependencies between variables.

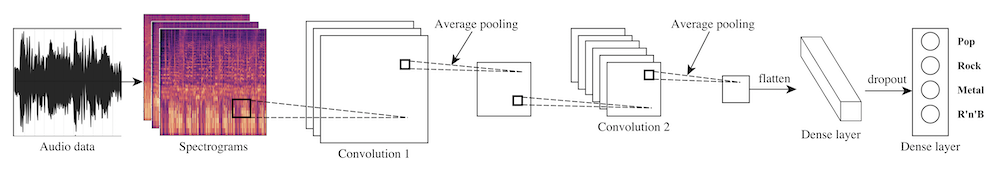

At the knowledge level, such techniques as data mining, statistical modeling, and machine learning algorithms are used. For example, machine learning methods can try to fill in the missing data with the best possible guesses based on the existing data. More information on the techniques and advantages and disadvantages of each analytical method can be found here.

Here are some contexts in which the data generated by Cyanite could create knowledge:

- Analyzing a “to-be-acquired” catalog of music rights and benchmarking it into the existing one;

- Analyzing popular playlists to predict matches;

- Analyzing trending music in advertising to find the most syncable tracks in the own catalog.

4. Intelligence Layer

The intelligence layer is the stage where questions such as “So What?” and “Now What?” are asked and possibly answered. This level enables the stakeholders to predict the outcomes and recommend actions with a high level of confidence. However, decision-making at this level is risky as the wrong prediction can be expensive. While this level is still very much human-operated, machines, especially AI, are moving up the pyramid levels to take over insights generation and decision making.

Prescriptive analytics deal with cause-effect relationships among knowledge points. They allow businesses to determine actions and assess their impact based on feedback produced by predictive analytics at the knowledge level. This is the level where the truly actionable insights that can affect business development are born.

Intelligence anticipates what and when something might happen. It can even attempt to understand why something might happen. At the intelligence level, each possible decision option is evaluated so that stakeholders can take advantage of future opportunities or avoid risks. Essentially, this level deals with multiple futures and evaluates the advantages of each option in terms of future opportunities and risks. For further reading, we recommend this article from Google revealing the mindset you need to develop data into business insights.

Cyanite and Data-Based Decision

At the very base of the Data Pyramid lies raw data. The quality and accuracy of raw data is detrimental in decision making.

Cyanite generates data about each music track such as bpm, dominant key, predominant voice gender, voice presence profile, genre, mood, energy level, emotional profile, energy dynamics, emotional dynamics, instruments, musical era, and other characteristics of the song. This data is then presented in a visual format such as graphs with dynamic and the ability to choose the custom segment.

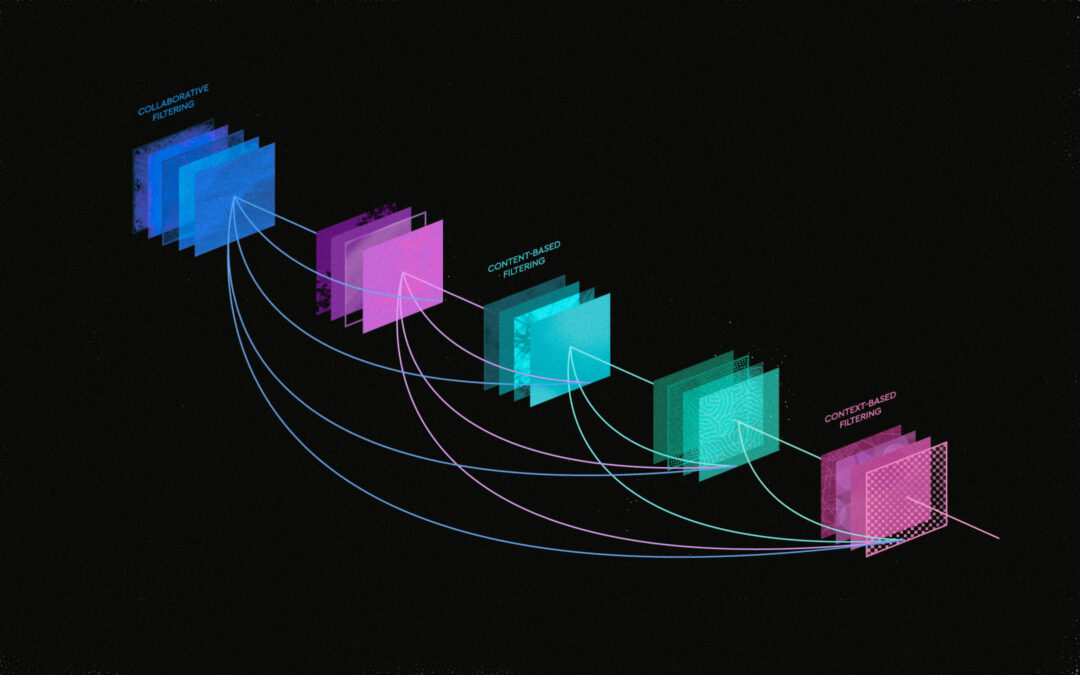

Based on different audio parameters, the system determines the similarity between the items, and lists similar songs based on a reference track. From Cyanite analytics, it can be derived, for example, what the overall mood of the library is and different songs can be added to make the library more comprehensive. Branding decisions can also be made using Cyanite, to ensure all music employed by the brand adheres to one mood or theme.

I want to integrate AI in my service as well – how can I get started?

Please contact us with any questions about our Cyanite AI via sales@cyanite.ai. You can also directly book a web session with Cyanite co-founder Markus here.

If you want to get the first grip on Cyanite’s technology, you can also register for our free web app to analyze music and try similarity searches without any coding needed.