Last updated on November 3rd, 2025 at 05:17 pm

This article is a continuation of the series “How to Turn Music Data into Actionable Insights”. We’re diving deeper into the first layer of the Data Pyramid and explore different kinds of metadata in the industry.

Metadata represents a form of knowledge in the music industry. Any information you have about a song is considered metadata – information about performance, sound, ownership, culture, etc. Metadata is already used in every step of the value chain; for music recommendation (algorithms), release planning, advance payments, marketing budgeting, royalty payouts, artist collaborations, and etc.

Nonetheless, the music industry has an ambivalent relationship with musical metadata. On the one hand, the data is necessary as millions of songs are circulating in the industry every day. On the other hand, music is quite varied and individual (pop music is very different to ambient sounds, for example) so the metadata that describes music can take different forms and meanings making it a quite complex field.

This article intends to explore all kinds of metadata used in the industry from basic descriptions of acoustic properties to company proprietary data.

The article was created with helpful input from Music Tomorrow:

Music data is a multi-faceted thing. The real challenge for any music business looking to turn data into powerful insights is connecting the dots across all the various types of music data and aggregating it at the right level. This process starts with figuring out how the ideal dataset would look like — and a well-rounded understanding of all the various data sources available on the market is key

Types of Metadata

There are various classifications of the music metadata. The basic classification is Public vs Private metadata:

- Public metadata is easily available and visible to the public.

- Private metadata is kept behind closed doors due to legal and security or because of economic reasons. Maintaining competitiveness is one of these reasons why the metadata is kept private. Typically, performance-related metadata like sales numbers is private.

Another very basic classification of metadata is Manual vs Automatic annotations:

- Manual metadata is entered into the system by humans. These could be annotations from the editors or users.

- Automatic metadata is obtained through automatic systems, for example, AI.

1. Factual (Objective)

Factual metadata is objective. This is such metadata as artist, album, year of publication, and duration of the song, etc. Factual metadata is usually assigned by the editor or administrator and describes the information that is historically true and can not be contested.

Usually, factual metadata doesn’t describe the acoustic features of the song.

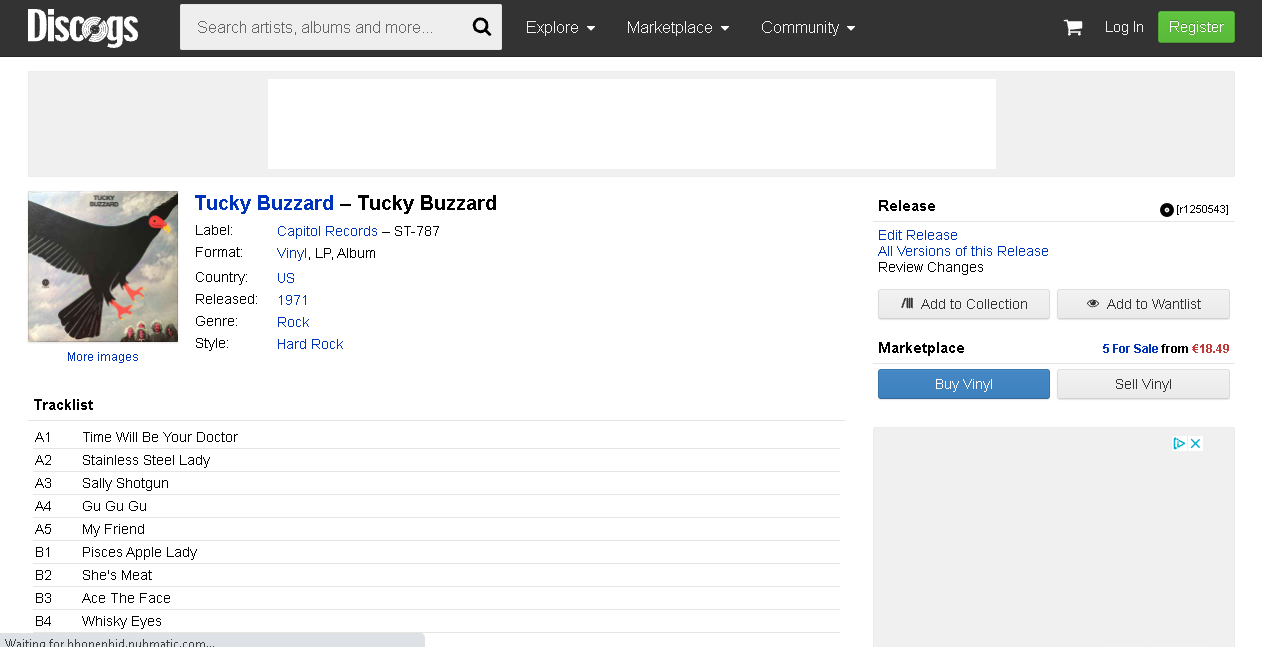

Besides the big streaming services, platforms like Discogs are great sources to find and double-check objective metadata.

Discogs provides a full account of factual metadata

2. Descriptive (Subjective)

Descriptive metadata (often also referred to as creative metadata or subjective metadata) provides information about the acoustic qualities and artistic nature of a song. These are such data as mood, energy, and genre, voice, and etc.

Descriptive metadata is usually subjectively defined by the human or the machine based on the previous experience or dataset. However, BPM, Key, and Time Signature are the exception to this rule. BPM, Key, and Time Signature are objective metadata that describe the nature of the song. We still count them as the descriptive metadata.

Major platforms like Spotify and Apple Music have strict requirements for submitted files. Having incomplete metadata can result in a file being rejected for further distribution. For music libraries, the main concern is user search experience as categorization and organization of songs in the library rely almost entirely on metadata.

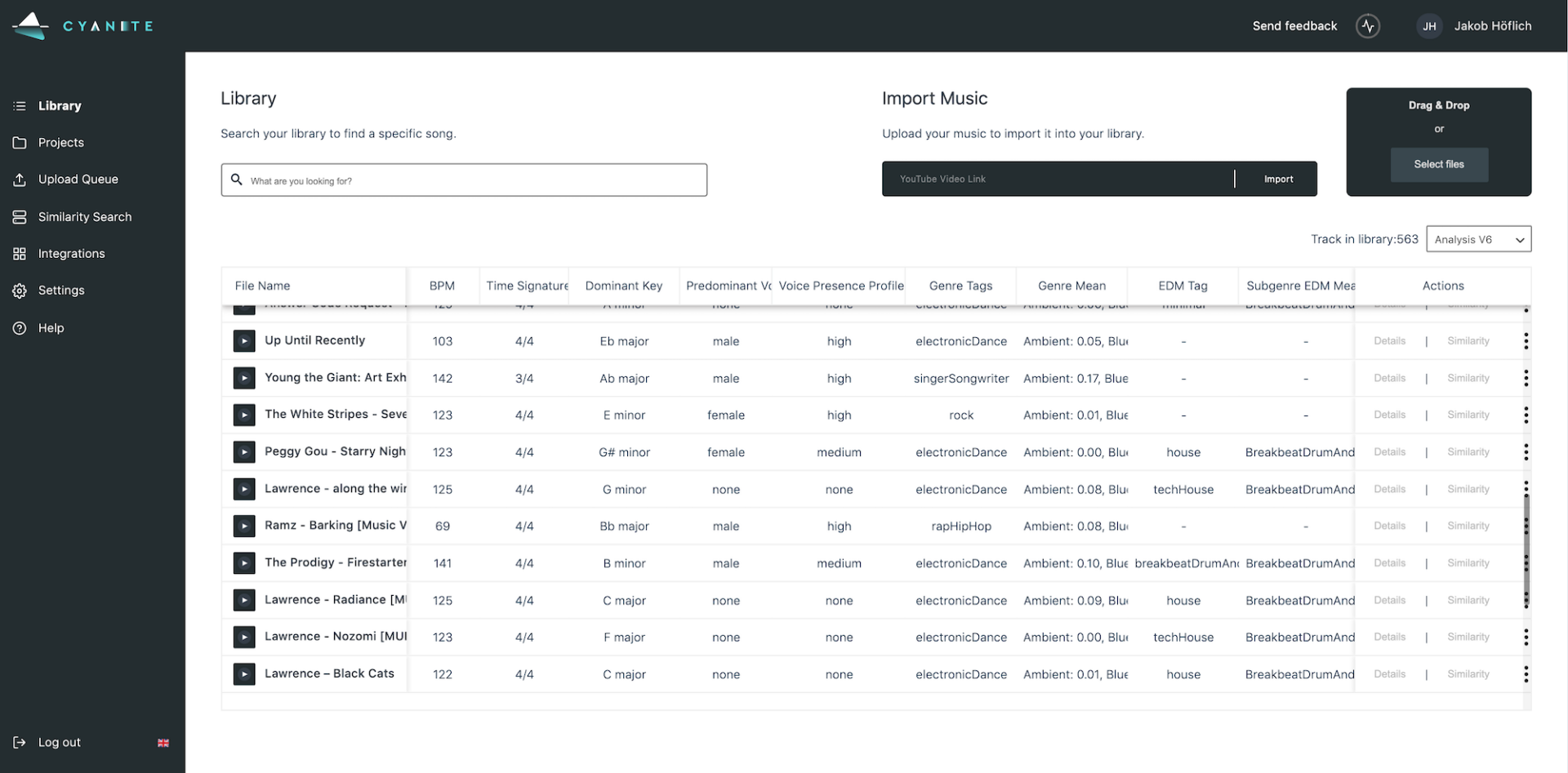

Companies such as Cyanite, Musiio, Musimap, or FeedForward are able to extract descriptive metadata from the audio file.

3. Ownership/Performing Rights Metadata

Ownership Metadata defines the people or entities who own the rights to the track. These could be artists, songwriters, labels, producers, and others. These are all sides interested in a royalty split, so ownership metadata ensures everyone involved is getting paid. Allocation of royalties can be quite complicated with multiple songwriters involved, songs using samples of other songs, and etc. So ownership metadata is important.

Companies such as Blòkur, Jaxta, Exectuals, Trqk, and Verifi Media provide access to ownership metadata with the goal to manage and track changes to ownership rights of the song over time – and ensure correct payouts for the rights holders.

4. Performance – Cultural Metadata

The performance or cultural metadata is produced by the environment or culture. Usually, this implies users having an interaction with the song. This interaction is then registered in the system and analyzed for patterns, categories, and associations. Such metadata includes likes, ratings, social media plays, streaming performance, chart positions, and etc.

The performance category can be divided into two parts:

- Consumption or Sales data deals with consumption and use of the item/track and usually needs to be acquired from partners. For example, Spotify shares data with distributors, distributors pass it down to labels, and so forth.

- Social or Audience data. Social data indicates how well music/artist does within a particular platform plus who the audience is. It can be accessed either through first-party tools or third-party tools.

First-party tools are powerful but disconnected. They require to harmonize data from different platforms to get a full picture. They are also limited in scope, meaning that they cover only proprietary data. Third-party tools are more useful. They provide access to data across the market, incl. performance for artists you have to connect to. In this case, the data is already harmonized but the level of detail is lower.

Another way to acquire social data is tracking solutions (movie syncs, radio, etc) that produce somewhat original data — these could be either integrated with third-party solutions (radio-tracking on Soundcharts/Chartmetric, for example) or operate as standalone tools (radio monitor, WARM, BDS tracker). It is still the consumption data but it’s accessed bypassing the entire data chain.

5. Proprietary Metadata

Proprietary data is the data that remains in the hands of the company and rarely gets disclosed. Some data used by recommender systems is proprietary data. For example, a song’s similarity score is proprietary data that can be based on performance data. This type of data also includes insights from ad campaigns, sales, merch, ticketing, and etc.

Some of the proprietary data belongs to more than one company. Sales, merch, ticket sales — a number of parties are usually involved here.

Outlook

Today, processes in the music industry are rather one-dimensional when it comes to data. For instance, marketing budgets are often planned merely based on past performance of an artist’s recordings – so are multiples on the acquisitions of their rights.

Let’s look at the financial sector: In order to estimate the value of a company, one has to look at company-internal factors such as inventory, assets, or human resources as well as outside factors such as past performances, political situation, or market trends. Here we look at proprietary data (company assets), semi-proprietary data (performance), and public data (market trends). The art of connecting those to make accurate predictions will be the topic of future research on the Cyanite blog.

Cyanite library provides a range of descriptive metadata

Conclusion

Musical metadata is needed to manage large music libraries. We tried to review all metadata types in the industry, but these types can intersect and produce new kinds of metadata. This metadata can then be used to derive information, build knowledge, and deliver business insights, which constitute the layers of the Data Pyramid – a framework we presented earlier that helps make data-based decisions.

In 2021, every company should see itself as a data company. Future success is inherently dependent on how well you can connect your various data sources.

I want to integrate AI in my service as well – how can I get started?

Please contact us with any questions about our Cyanite AI via sales@cyanite.ai. You can also directly book a web session with Cyanite co-founder Markus here.

If you want to get the first grip on Cyanite’s technology, you can also register for our free web app to analyze music and try similarity searches without any coding needed.