jazzahead! is a professional jazz industry event and a place to listen to contemporary jazz music that takes place every year in Bremen. This is where all the participants of the jazz music scene from professional musicians to media representatives meet and take part...

What is Music Prompt Search? ChatGPT for music?

Last updated on March 6th, 2025 at 02:14 pm

How Music Prompt Search Works & Why It’s Only Part of the Puzzle

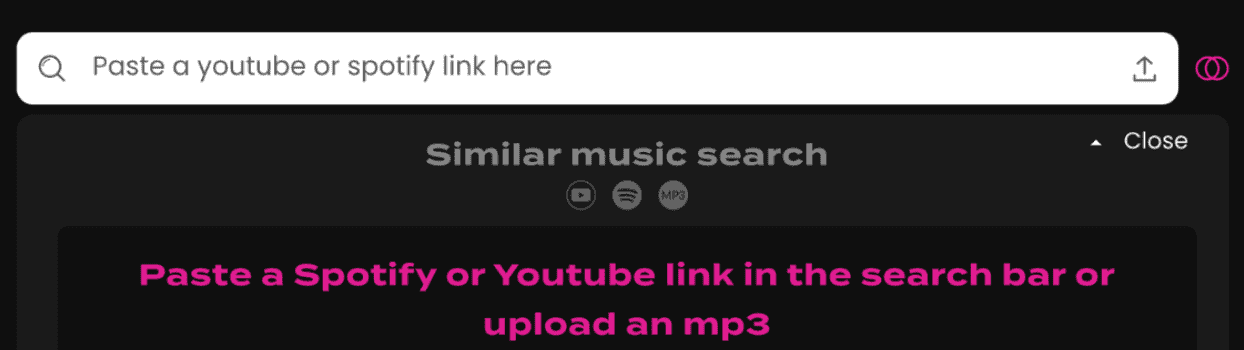

Alongside our Similarity Search, which recommends songs that are similar to one or many reference tracks, we’ve built an alternative to traditional keyword searches. We call it Free Text Search – our prompt-based music search.

Imagine describing a song before you’ve even heard it:

“Dreamy, with soft piano, a subtle build-up, and a bittersweet undertone. Think rainy day reflection.”

This is the kind of prompt that Cyanite can turn into music suggestions – not based on genre or mood tags, but on the actual sound of the music.

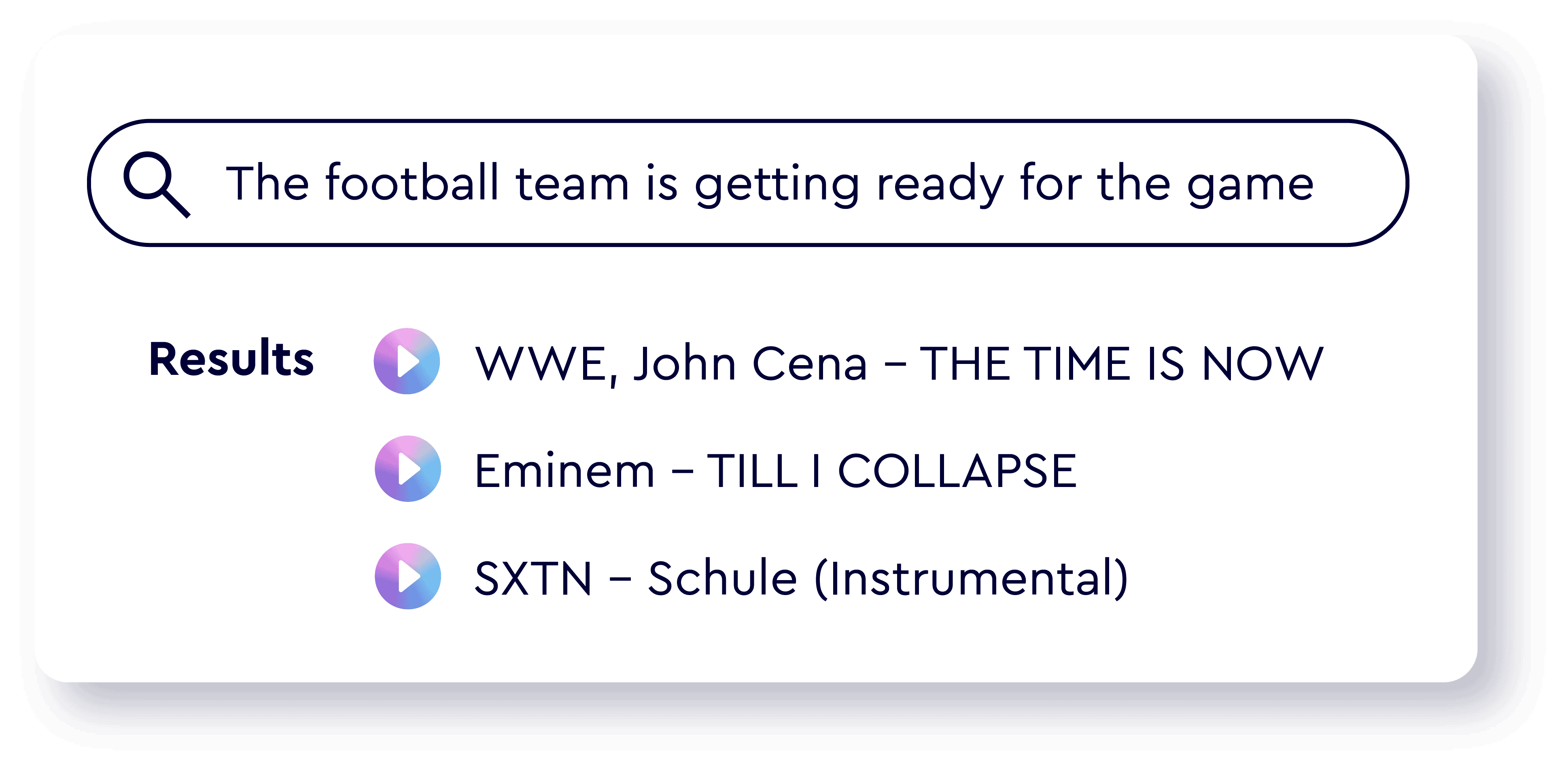

Music Prompt Search Example with Cyanite’s Free Text Search

What Is Music Prompt Search?

Prompt search allows you to enter a natural language description (e.g. “uplifting indie with driving percussion and a nostalgic feel”) and get back music that matches that idea sonically.

We developed this idea in 2021 and were the first ones to launch a music search that was based on pure text input in 2022. Since then we’ve been improving and refining this kind of AI-powered search so that it can accurately translate text into sound. That way, you will get the closest result to the prompt that your catalog allows for.

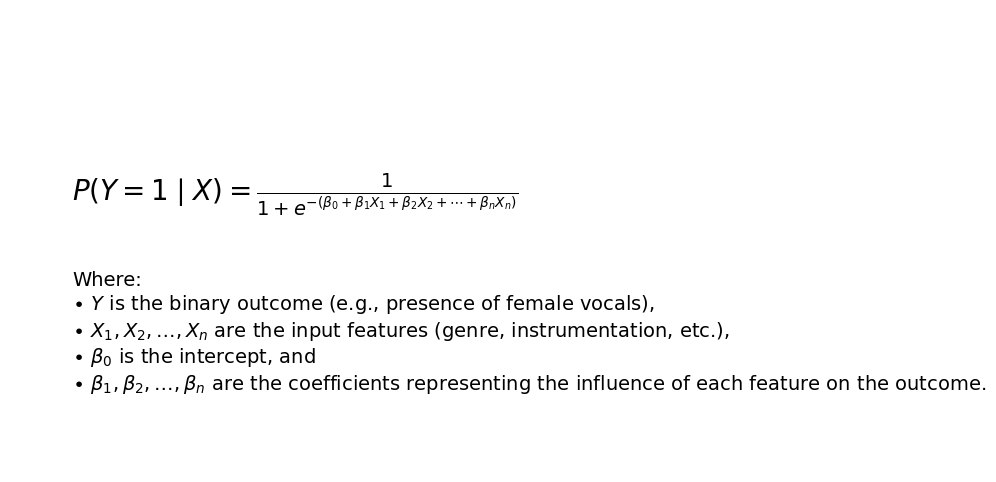

We are not searching for certain keywords that appear in a search. We directly map text to music. We make the system understand which text description fits a song. This is what we call Free Text Search.

Built with ChatGPT? Not All Prompts Are Created Equal

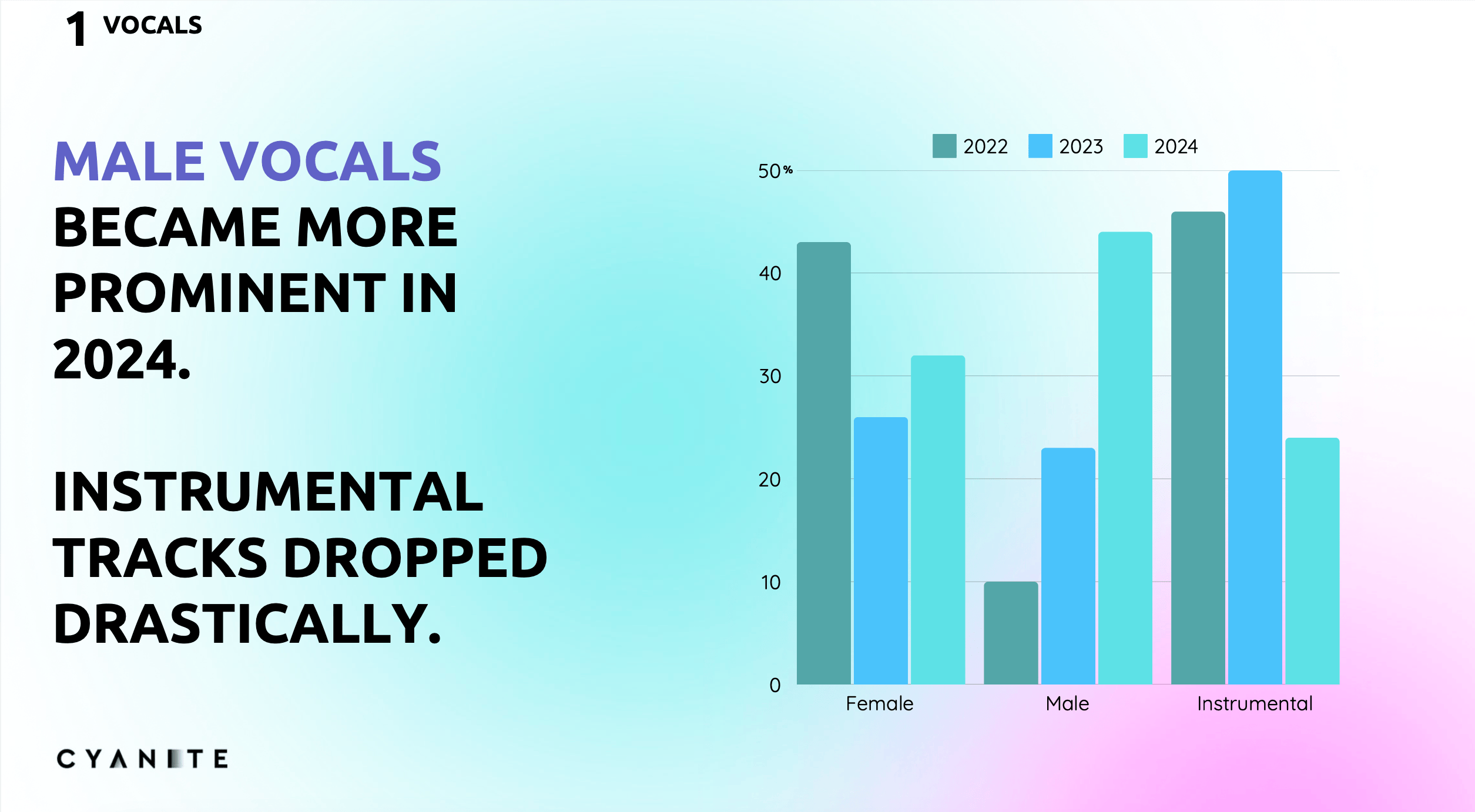

More recently, different companies have entered the field of prompt-based music search, using large language models like ChatGPT as a foundation. These models are strong at interpreting natural language, but can not understand music the way we do.

They generate tags based on text input and then search those tags. So in reality, these algorithms work like a traditional keyword search, and only decipher natural language prompts into keywords.

When Prompt Search Shines

Prompt search is a game-changer when:

- You have a specific scene or mood in mind

- You’re working with briefs from film, games, or advertising

- You want to match the energy or emotional arc of a moment

This is ideal for music supervisors, marketers, and creative producers.

Note: Our Free Text Search just got better!

With our latest update, Free Text Search is now:

✅ Multilingual – use prompts in nearly any language

✅ Culturally aware – understand references like “Harry Potter” or “Mario Kart”

✅ Significantly more accurate and intuitive

It’s available free for all API users on V7 and for all web app accounts created after March 15. Older accounts can request access via email.

The limits of prompt-based music search.

Prompt search is powerful – but it’s not a silver bullet.

There’s still the blank page problem: It’s hard to put a vision of a song into words. And if you don’t know what you’re looking for, prompts can become paralyzing. And how you write the prompts really matters! To be sure your recommendations are not limited by your prompting skills, be sure to read our guide on how to write the perfect prompt every time.

Where prompt-based search has its limits – structured metadata and keyword search shines. Sometimes, you need to explore music by filtering, tagging, and iterating.

At Cyanite, we see prompt search and tagging not as opposing systems, but as complementary tools.

In short:

-

- Prompt Search helps you describe what you hear in your mind.

- Tag-Based Filtering helps you discover what you didn’t know you needed.

Why We Build Our Own Models

We chose to develop every model in-house

Not only for data security and IP protection, but because music deserves a dedicated algorithm.

Few things are as complex and deep as the world of music. General-purpose AI doesn’t understand the nuance of tempo shifts, the subtle timbre of analog synths, or the emotional trajectory of a song.

Our models are trained on the sound itself. That means:

-

- More precise results

- Higher musical integrity

- More confidence when recommending or licensing tracks

If you wanna learn more on how our models are working – check out this blog article and interview with our CAIO Roman Gebhardt.

A Smarter Way to Find Music

We’ve spent nearly a decade building toward a vision where music discovery becomes more human, more intuitive, and more creative. Our goal is to help people working with music to make more data-backed decisions to create music moments – and at the same time equally appreciate artists of any size that fit your vision. Our AI does not filter or sort the results by anything but musical similarity to the input.

That is of course if you don’t pre-filter the search results. To learn more about pre- & post-filtering and why it matters – check out the first edition of our Newsletter for Businesses that we launched earlier this year.

We think that prompt search is definitely part of the future of music discovery – but it’s only one piece of the puzzle. And we’re dedicated to making sure that every piece of that puzzle fits and pushes the music industry to a bright & fair future.

If you want to share your thoughts, or feedback on how we can achieve this, talk to us!