Last updated on June 28th, 2023 at 02:25 pm

Music recommendation systems can significantly improve the listening and search experience of a music library or music application. Algorithmic recommender systems have become inevitable due to increased access to digital content. In the music industry, there is just too much music for the user to navigate tens of millions of songs effectively. Since the need for satisfactory music recommendations is so high, the MRS (music recommendation systems) field is developing at a lightning speed. On top of that, the popularity of streaming services such as Spotify and Pandora shows that people like to be guided in their music choice and discover new tracks with the help of algorithms.

Hitting the musical spot for their users is the goal of every music service. However, there are many ways and philosophies to music recommendation with very different implications.

In this article, we unravel all the specifics of music recommendation systems. We look into the different approaches to music recommendation and explain how they work. We also discuss approaches to Music Information Retrieval which is a field concerned with automatically extracting data from music.

If you want to upgrade a music library or build a music application, keep reading to find out which recommendation system works best for your needs.

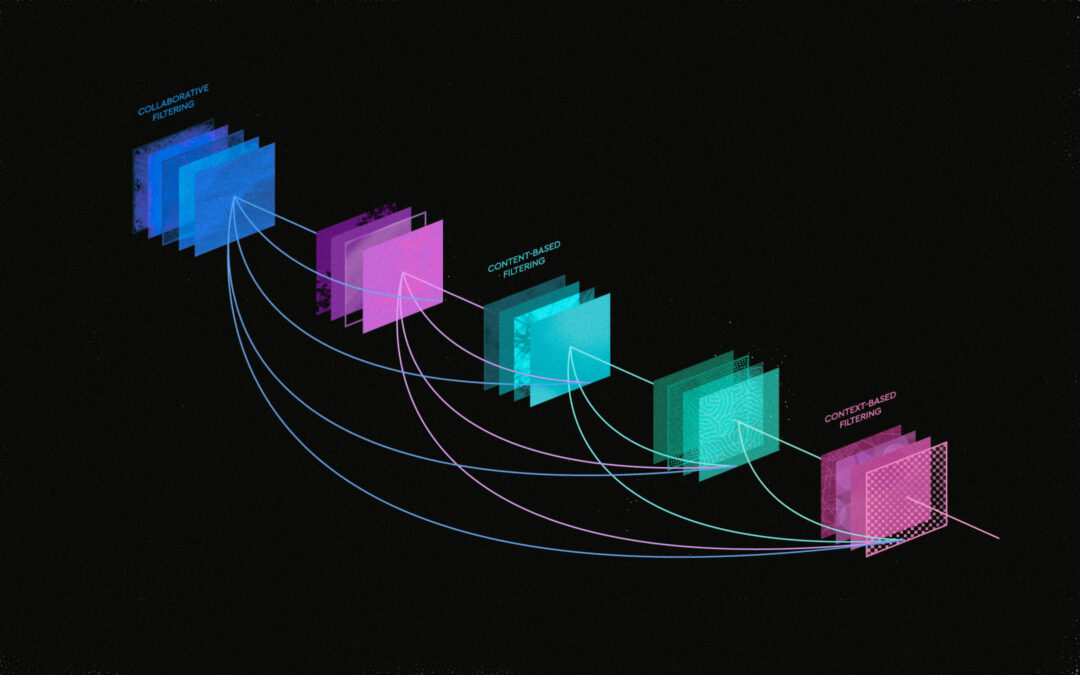

Approaches to Music Recommendation

We focus on three approaches to music recommender systems: Collaborative Filtering, Content-based Filtering, and Contextual Approach.

1. Collaborative Filtering

The collaborative filtering approach predicts what users might like based on their similarity to other users. To determine similar users, the algorithm collects user historical activity such as user rating of a music track, likes, or how long the user was listening to the track.

This approach reproduces the friends’ recommendations approach in the days when music was passed around in the tight circle of friends with similar interests. Because only user information is relevant, collaborative filtering doesn’t take into account any of the information about the music or sound itself. Instead, it analyzes user preferences and behavior and by matching one user to another predicts the likelihood of a user liking a song. For example, if User A and User B liked the same song in the past, it is likely that their preferences match. In the future, User A might get song recommendations that User B is listening to based on the similarity that was established earlier.

The most prominent problem of the collaborative filtering approach is the cold start. When the system doesn’t have enough information at the beginning, it won’t provide accurate recommendations. This applies to new users, whose listening behavior is not tracked yet, or new songs and artists, where the system needs to wait before users interact with them.

In collaborative filtering, several approaches are used such as user-based and item-based filtering, and explicit and implicit ratings.

Alina Grubnyak @ Unsplash

Collaborative Filtering Approaches

It is common to divide collaborative filtering into two types – user-based and item-based filtering:

- User-based filtering establishes the similarity between users. User A is similar to User B so they might like the same music.

- Item-based filtering establishes the similarity between items based on how users interacted with the items. Item A can be considered similar to Item B because they were both rated 5 out of 10 by users.

Another differentiation that is used in collaborative filtering is explicit vs implicit ratings:

- Explicit rating is when users provide obvious feedback for items such as likes or shares. However, not all items get a rating, and sometimes users will interact with the item without rating it. In that case, the implicit rating can be used.

- Implicit ratings are predicted based on user activity. When the user didn’t rate the item but listened to it 20 times, it is assumed that the user likes the song.

2. Content-based Filtering

Content-based filtering uses metadata attached to the items such as descriptions or keywords (tags) as the basis of the recommendation. Metadata characterizes and describes the item. Now, when the user likes an item the system determines that this user is likely to like other items with similar metadata to the one they already liked.

Three common ways to assign metadata to content items are through a qualitative, quantitative, and automated approach.

Firstly, the qualitative approach is through library editors that professionally characterize the content.

Secondly, in the quantitative or crowdsourced approach, a community of people assigns metadata to content manually. The more people participate, the more accurate and less subjectively biased the metadata gets.

And thirdly, the automated way where algorithmic systems automatically characterize the content.

Metadata

Musical metadata is adjacent information to the audio file. It can be objectively factual or descriptive (based on subjective perception). In the music industry, the latter is also often referred to as creative metadata.

For example, artist, album, year of publication are factual metadata. Descriptive data describes the actual content of a musical piece e.g. the mood, energy, and genre. Understanding the types of metadata and organizing the taxonomy of the library in a consistent way is very important as the content-based recommender uses this metadata to pick the music. If the metadata is wrong the recommender might pull out a wrong track. You can read more about how to properly structure a music catalog in our free taxonomy paper. For professional musicians sending music, this guide on editing music metadata can be helpful.

David Pupaza @ Unsplash

Content-based recommender systems can use both factual and descriptive metadata or focus on one type of data only. Much attention is put into content-based recommendation systems as they allow for objective evaluation of music and can increase access to “long-tail” music. They can enhance the search experience and inspire many new ways of discovering and interacting with music.

The field concerned with extracting descriptive metadata from music is called Music Information Retrieval (MIR). More on that later in the article.

3. Context-aware Recommendation Approach

Context has become popular in recommender systems recently and it is a relatively new and still developing field. The context includes the user’s situation, activity, and circumstances that content-based recommendation and collaborative filtering systems don’t take into account, but might influence music choice. Recent research by the Technical University of Berlin shows that 86% of music choices are influenced by the listener’s context.

This could be environment-related context and user-related context.

- Environment-related Context

In the past, recommender systems were developed that established a link between the user’s geographical location and music. For example, when visiting Venice you could listen to a Vivaldi concert. When walking the streets of New York, you could blast Billy Joel’s New York State Of Mind. Emotion indicating tags and knowledge about musicians were used to recommend music fitting to a geographical place.

- User-related Context

You could be walking or running depending on the time of the day and your own plans, you could also be sad or happy depending on what happened in your personal life – these circumstances represent the user-related context. Being alone vs being in a social company may also significantly influence music choice. For example, when working out you might want to listen to more energetic music than your usual listening habits and musical preferences would suggest. Good music recommendation systems would take this into account.

Music Information Retrieval

The research concerned with the automated extraction of creative metadata from the audio is called Music Information Retrieval (MIR). MIR is an interdisciplinary research field combining digital signal processing, machine learning, and artificial intelligence with musicology. In the area of music analysis, its focus is widely spread, evolving from BPM or key detection from audio, analysis of higher-level information like automatic genre or mood classification to state of the art approaches like automatic full-text song captioning. It also covers research on the similarity of musical audio pieces and, in line with that, search algorithms for music and automatic music generation.

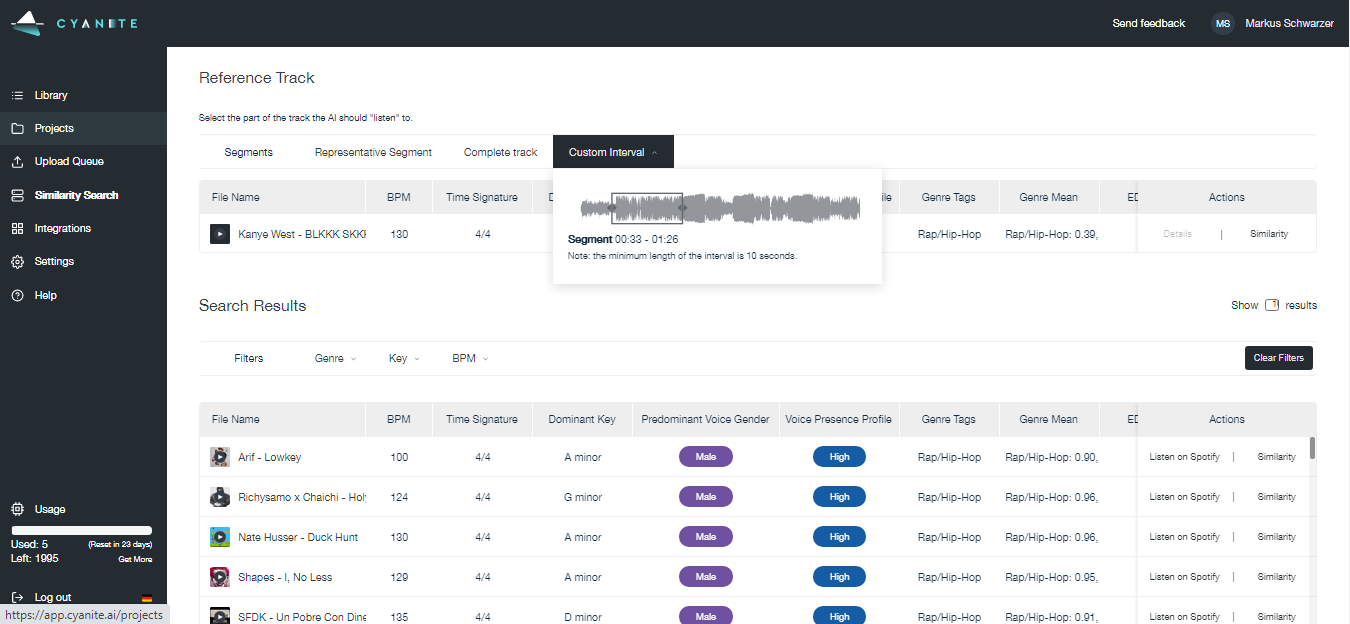

At Cyanite, we are using a combination of Music Information Retrieval methods. For example, various artificial neural network architectures are used to predict the genre, mood, and other features of the song based on the existing dataset and subsequent network training. More on that in this article on how to analyze music with neural networks. Our Similarity Search takes a reference track and gives you a list of songs that match by pulling audio, metadata, and other relevant information from audio files. The overall character of the library can be determined and managed using Similarity Search.

Our Cyanite AI found the best applications in music libraries targeted at music professionals, DJs, artists, and brands. They represent the business segment of the industry.

Cyanite Similarity Search

Conclusion

The choice of a music recommendation approach is highly dependent on your personal needs and the data you have available. An overarching trend is a hybrid approach that combines features of collaborative filtering, content-based filtering, and context-aware recommendations. However, all fields are in a state of constant development and innovations make each approach unique. What works for one music library might not be applicable to another.

The common challenges of the field are access to large enough data sets and understanding how different musical factors influence people’s perception of music. More on that soon on the Cyanite blog!

I want to integrate AI in my service as well – how can I get started?

Please contact us with any questions about our Cyanite AI via sales@cyanite.ai. You can also directly book a web session with Cyanite co-founder Markus here.

If you want to get the first grip on Cyanite’s technology, you can also register for our free web app to analyze music and try similarity searches without any coding needed.

If you are interested in going deeper into Music Recommender Systems we highly recommend the following reads:

Current challenges and visions in music recommender systems research