Our CEO Markus Schwarzer has published a guest post on UK-based music industry medium Hypebot.

In this guest post, our CEO Markus elaborates on how AI can be used to resurface, reuse, and monetize long-forgotten music, addressing concerns about its impact on the music industry. By leveraging AI-driven curation and tagging capabilities, music catalog owners can extract greater value from their collections, enabling faster search, diverse curation, and the discovery of hidden music, while still protecting artists and intellectual property rights.

You can read the full guest post below or head over to Hypebot via this link.

by Markus Schwarzer, CEO of Cyanite

AI-induced anxiety is ever-growing.

Whether it’s the fear that machines will evolve capabilities beyond their coders’ control, or the more surreal case of a chatbot urging a journalist to leave his wife, paranoia that artificial intelligence is getting too big for its boots is building. One oft-cited concern, voiced in an open letter from a group of AI-experts and researchers calling themselves the Future of Life Institute calling for a pause in AI development, is whether, alongside mundane donkeywork, we risk automating more creative human endeavors.

It’s a question being raised in recording studios and music label boardrooms. Will AI begin replacing flesh and blood artists, generating music at the touch of a button?

While some may discount these anxieties as irrational and accuse AI skeptics of being dinosaurs who are failing to embrace the modern world, the current developments must be taken seriously.

AI poses a potential threat to the livelihood of artists and in the absence of new copyright laws that specifically deal with the new technology, the music industry will need to find ways to protect its artists.

We all remember when AI versions of songs by The Weeknd and Drake hit streaming services and went viral. Their presence on streaming services was short-lived but it’s a very real example of how AI can potentially destabilise the livelihood of artists. Universal Music Group quickly put out a statement asking the music industry “which side of history all stakeholders in the music ecosystem want to be on: the side of artists, fans and human creative expression, or on the side of deep fakes, fraud and denying artists their due compensation.“

“there are vast archives of music of all genres lying dormant and thousands of forgotten tracks”

However, there are ways that AI can deliver real value to the industry – and specifically to the owners of large music catalogues. Catalogue owners often struggle with how to extract the maximum value out of the human-created music they’ve already got.

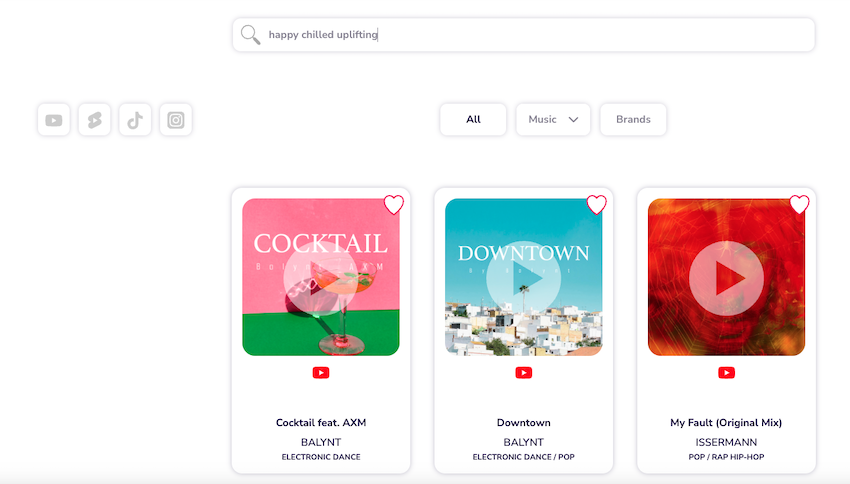

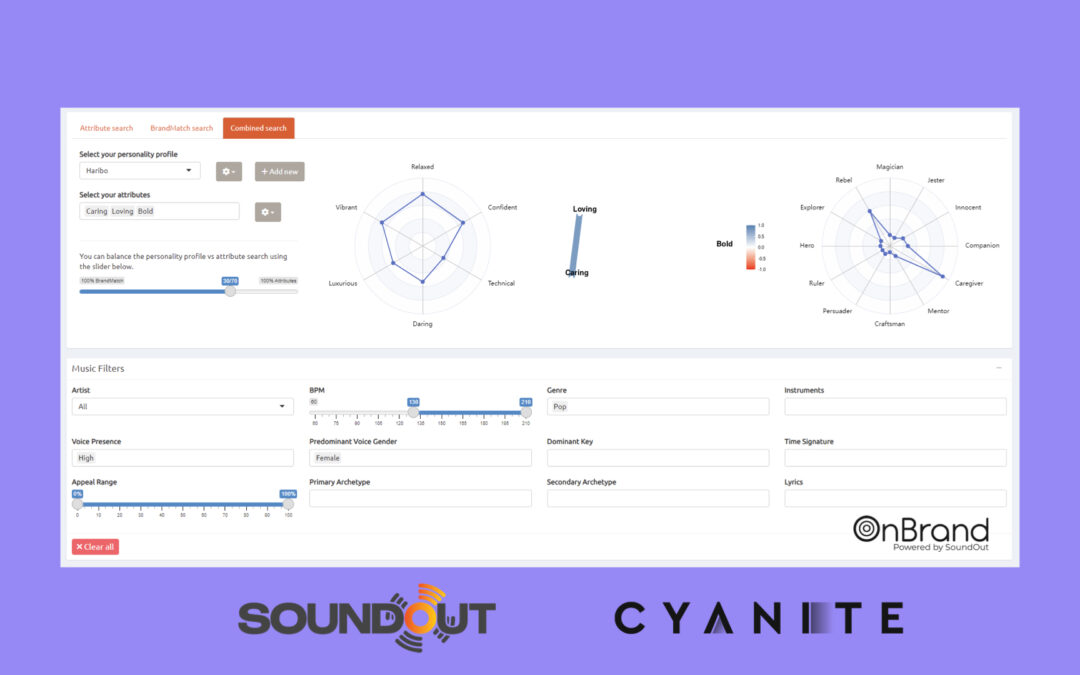

But we can learn from genAI approaches. Recently introduced by AI systems like Midjourney, ChatGPT or Riffusion, prompt-based search experiences are prone to creep into anyone’s user behavior. But instead of having to fall back to bleak replicas of human-created images, texts, or music, AI engines can give music catalogue owners the power to build comparable search experience with the advantage of surfacing well-crafted and sounding songs with a real human and a real story behind it.

There are vast archives of music of all genres lying dormant, and thousands of forgotten tracks within existing collections, that could be generating revenue via licensing deals for film, TV, advertising, trailers, social media clips and video games; from licences for sampling; or even as a USP for investors looking to purchase unique collections. It’s not a coincidence that litigation over plagiarism is skyrocketing. With hundreds of millions of songs around, there is a growing likelihood that the perfect song for any use case already exists and just needs to be found rather than mass-generated by AI.

With this in mind, the real value of AI to music custodians lies in its search and curation capabilities, which enable them to find new and diverse ways for the music in their catalogues to work harder for them.

How AI music curation and AI tagging work

To realize the power of artificial intelligence to extract value from music catalogues, you need to understand how AI-driven curation works.

Simply put, AI can do most things a human archivist can do,but much, much faster; processing vast volumes of content, and tagging, retagging, searching, cross-referencing and generating recommendations in near real-time. It can surface the perfect track – the one you’d forgotten, didn’t know you had, or would never have considered for the task in hand – in seconds.

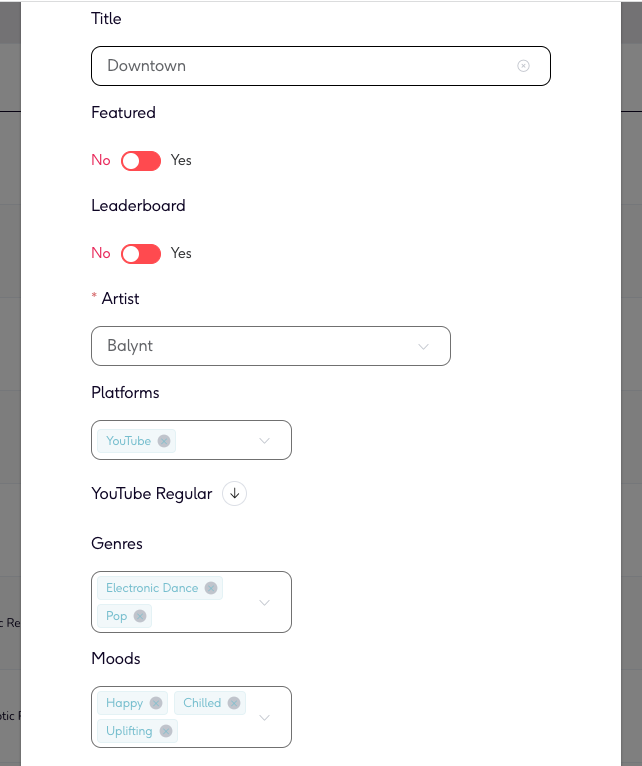

This is because AI is really good at auto-tagging, a job few humans relish. It can categorise entire music libraries by likely search terms, tagging each recording by artist and title, and also by genre, mood, tempo and language. As well as taking on a time-consuming task, AI removes the subjectivity of a human tagger, while still being able to identify the sentiment in the music and make complex links between similar tracks. AI tagging is not only consistent and objective (it has no preference for indie over industrial house), it also offers the flexibility to retag as often as needed.

The result is that, no matter how dusty and impenetrable a back catalogue, all its content becomes accessible for search and discovery. AI has massively improved both identification and recommendation for music catalogues. It can surface a single song using semantic search, which identifies the meaning of the lyrics. Or it can pick out particular elements in the complexities of music in your library which make it sound similar to another composition (one that you don’t own the rights to, for example). This allows AI to use reference songs to search through catalogues for comparable tracks.

The power of AI music catalog search

The value of AI to slice and dice back catalogs in these ways is considerable for companies that produce and licence audio for TV, film, radio and multimedia projects. The ability to intelligently search their archives at high speed means they can deliver exactly the right recording to any given movie scene or gaming sequence.

Highly customisable playlists culled from a much larger catalogue are another benefit of AI-assisted search. While its primary function is to allow streaming services such as Spotify to deliver ‘you’ll like this’ playlists to users, for catalogue owners it means extracting infinitely refinable sub-sets of music which can demonstrate the archive’s range and offer a sonic smorgasbord to potential clients.

“the extraction of ‘hidden’ music”

Another major value-add is the extraction of ‘hidden’ music. The ability of AI to make connections based on sentiment and even lyrical hooks and musical licks, as well as tempo, instruments and era, allows it to match the right music to any project with speed and precision only the most dedicated catalogue curator could fulfil. With its capacity to search vast volumes of content, AI opens the entirety of a given library to every search, and surfaces obscure recordings. Rather than just making money from their most popular tracks, therefore, the owners of music archives can make all of their collection work for them.

The tools to do all of this already exist. Our own solution is a powerful AI engine that tags and searches an entire catalogue in minutes with depth and accuracy. Meanwhile, AudioRanger is an audio recognition AI which identifies the ownership metadata of commercially released songs in music libraries. And PlusMusic is an AI that makes musical pieces adaptive for in-game experiences. As the gaming situation changes, the same song will then adapt to it.

Generative AI – time for careful reflection

The debate on the role of generative AI in the music industry won’t be solved anytime soon and it shouldn’t. We should reflect carefully on the incorporation of any technology that might potentially reshape our industry. We should ask questions such as: how do we protect artists? How do we use the promise of generative AI to enhance human art? What are the legal and ethical challenges that this technology poses? All of these issues must be addressed in order for the industry to reap the benefits of generative AI.

Adam Taylor, President and CEO of the American production music company APM Music, shared with me that he believes it is vital to safeguard intellectual property rights, including copyright, as generative AI technologies grow across the world. As he puts it: “While we are great believers in the power of technology and use it throughout our enterprise, we believe that all technology should be used in responsible ways that are human-centric. Just as it has been throughout human history, we believe that our collective futures are intrinsically tied to and dependent on retaining the centrality of human-centered art and creativity.”

The debate around the role of generative AI models will continue to play out as we look for ways to embrace new technologies and protect artists, and naturally there are those like Adam who will wish to adopt a cautious approach. But while there are many who are reluctant to wholeheartedly embrace generative AI models, andthere are many more who are willing to embrace analysis and search AI to protect their catalogues and make them more efficient and searchable.

Ultimately, it’s down to the industry to take control of this issue, find a workable level of comfort with AI capabilities, and build AI-enhanced music environments that will vastly improve the searchability – and therefore usefulness – of existing, human-generated music.

If you want to get more updates from Markus’ view on the music industry, you can connect with him on LinkedIn here.