PR: APM Music Partners with Cyanite to Enhance Music Tagging

PRESS RELEASE

APM Music partners with Cyanite to Enhance Music Tagging

Mannheim/Berlin/Los Angeles, September 9, 2021 – APM Music, the largest production music library in North America, and Cyanite, a technology company developing a suite of AI-powered music search products announced today a strategic partnership that will provide users with improved tagging and metadata to enhance their search queries.

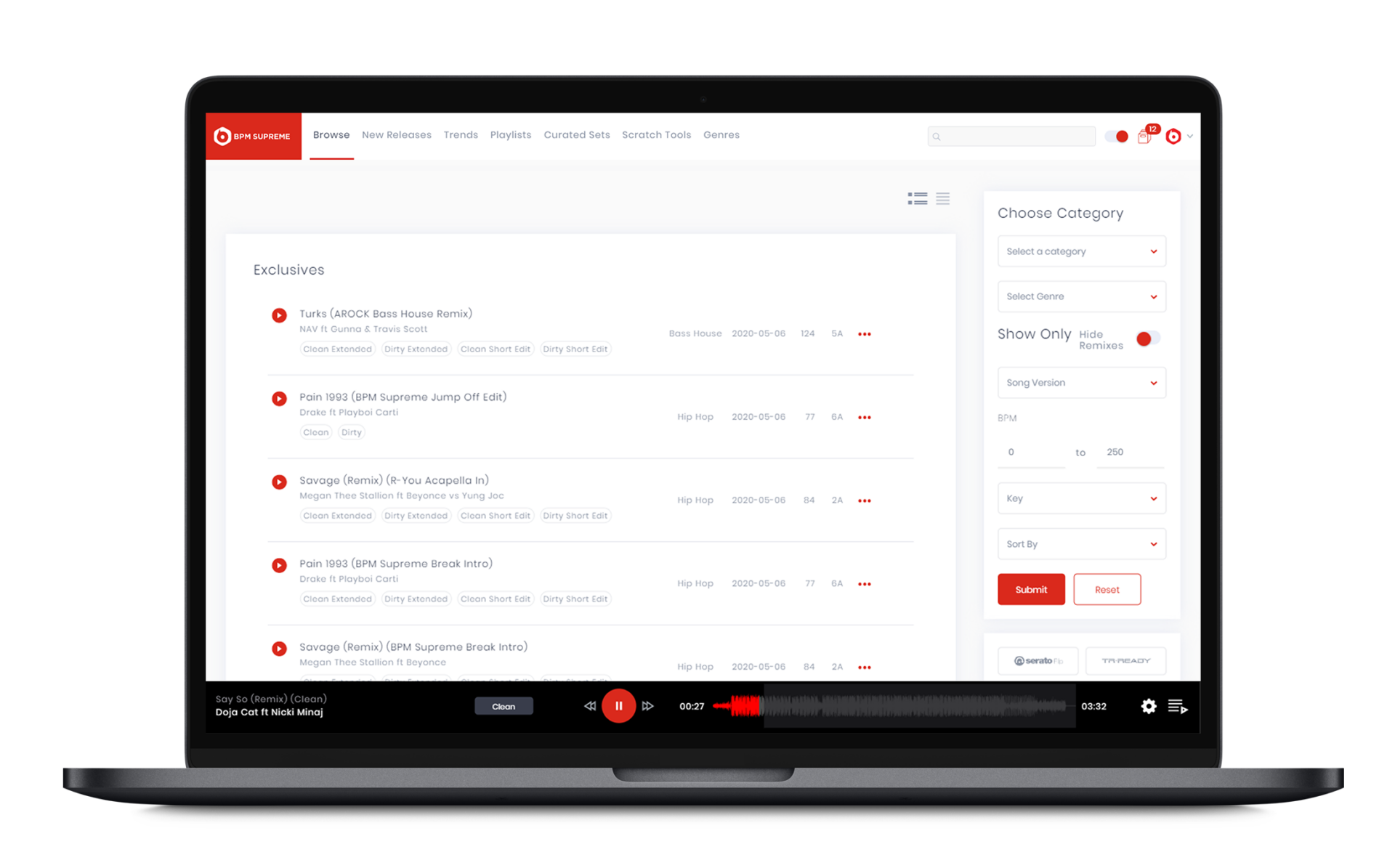

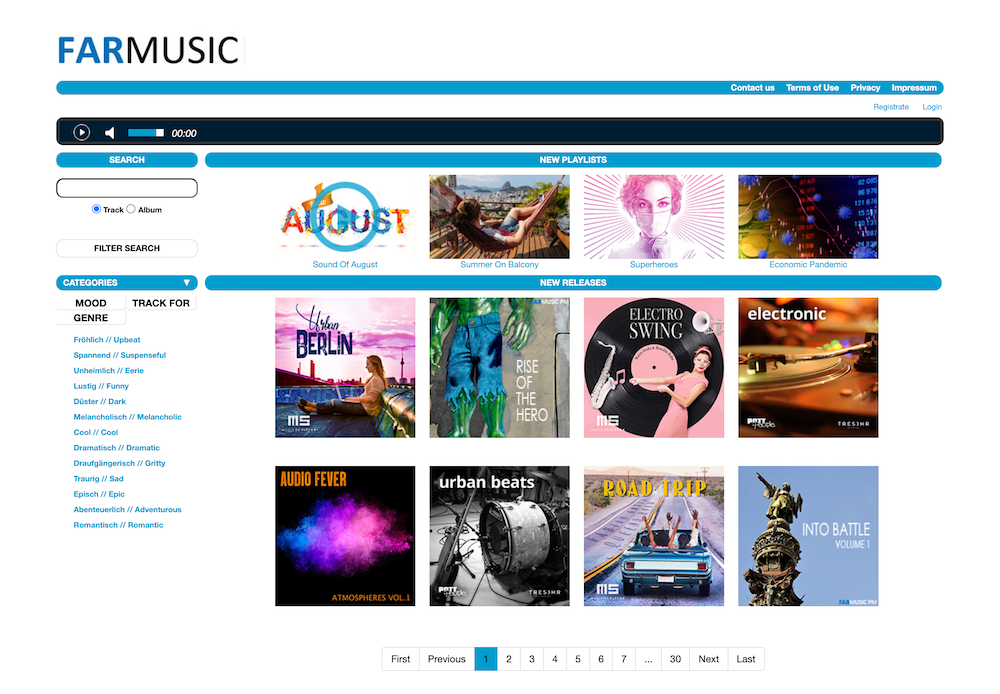

A superior music discovery experience begins with content that is comprehensively, consistently, and accurately tagged. With an ever-growing music library such as the one APM Music has been providing the marketplace for nearly four decades, maintaining high-quality tagging and precise metadata at a large scale is a primary concern. Incorporating Cyanite’s AI will allow APM Music to introduce human-assisted auto-tagging to the music submission and review process, thus increasing the quality and consistency of tagging.

APM Music’s President/CEO Adam Taylor comments: “For APM Music, accurate and reliable music tagging has always been of the utmost importance and we are aligned with Cyanite on this constant strive for quality. Markus and the team have proven that they are able to quickly react to our feedback and improve their algorithms at a rapid speed. We are excited to integrate artificial intelligence into APM and create the best possible support for our team.”

Cyanite’s artificial intelligence listens to and categorizes songs, helping to deliver the right music content, no matter the use case. Integrating this technology will benefit the end user by ensuring search queries continue to yield accurate and tailored results as APM’s music library expands in depth and breadth.

Markus Schwarzer, CEO of Cyanite: “We look forward to working with such a prestigious and renowned partner as APM Music. Everyone on their team is a unique expert in their field. Being the chosen AI partner for the important and extensive transition into this new age of music distribution fills our entire team with pride.”

The addition of Cyanite’s technology extends APM’s commitment to continually increasing the quality and performance of its search engine, thereby delivering a superior quality discovery experience to match its richness of catalog.

Anyone wishing to try Cyanite’s technology can register for the Web App free of charge and upload music or integrate Cyanite into an existing database system via their API.

Full press material including German press release can be found via this link.

Background to APM Music:

APM Music is located in Hollywood, California, and is the premier go-to source for production music. Founded in 1983, APM Music is the largest production music library in North America. To find out more, please visit www.apmmusic.com.

Background to Cyanite:

Cyanite believes that state-of-the-art technology should not be exclusive to big tech companies. The start-up is one of Europe’s leading independent innovators in the field of music-AI and supports some of the most renowned and innovative players in the music and audio industry. Among the music companies using Cyanite are the Mediengruppe RTL, the record pool BPM Supreme. the radio station SWR, the music publishers NEUBAU Music and Schubert Music, and the sound branding agencies Universal Music Solutions, TAMBR, and amp sound branding.

Press Inquiries

Jakob Höflich

Co-Founder

+49 172 447 0771

jakob@cyanite.ai

Headquarter Mannheim

elceedee UG (haftungsbeschränkt)

Badenweiler Straße 4

68239 Mannheim

Berlin Office

Cyanite

Gneisenaustraße 44/45

10961 Berlin