How to Build Trust with the Music Industry Gatekeepers – 3 Working Ways

Let’s face it: your success as an artist is significantly influenced by the amount of love you get from the gatekeepers of the music industry. But who are the gatekeepers in the music industry? Music gatekeepers are the people and entities that control access to your audience. Namely, these are bloggers, playlist curators, magazines, social media influencers, A&R’s, radio DJs, etc. They have the keys to the doors or “gates” which provide access to the audience and sales. They can also become a bottleneck on your way to music fans.

Usually, the track reaches the audience through a complicated chain from the artist to a record label, radio station, press, retail stores, and event booking agents. So gatekeeping in music is a regular and widely accepted practice. In recent years, some of the gatekeepers were substituted by streaming platforms and music curators. These “new” gatekeepers combine human and algorithmic editorial practices to form the playlists and influence music listeners. This source establishes that there are around 400 music curators worldwide who work for Spotify, Apple Music, Deezer, and Google Play Music. There are also personal playlists and music blogs’ playlists on all the platforms.

The key to their heart is:

- Understanding how they got there

- Helping them stay relevant to their audience.

Gatekeepers in music are not that different from you. It took them hard work and time to establish trust with their audience which is why they have so much influence. Knowing that the gatekeepers will deliver what the audience wants, makes people stick to a blog, playlist, or radio station. The listeners know what to expect. For music industry gatekeepers, this means that they have to deliver fresh content at a good level of continuity – both in time and style.

So in reality, the music industry gatekeepers are dependent on YOU and your music! This is important to understand. Yet, you are not the only artist around. This means, they constantly have to choose between several songs or artists, and you need to make it easy for them to decide in your favor.

YOU DO THIS BY EARNING THEIR TRUST.

- Show them that you mean it.

- Show them they are not alone in supporting you.

- Show them that their audience will like the track.

A brand image that evolves around your music also shows a serious level of professionalism. Don’t ignore your branding as an artist as it helps create a consistent identity that the audience gravitates toward. This read by Spinnup sums up this topic very well.

Some gatekeepers will only take your song if they see that you’re actively promoting it on your side. You can do that by advertising on Facebook, Instagram, or Twitter. For a clever hack on social media advertising, visit the guide How to Create Custom Audiences for Pre-Release Music Campaigns in Facebook, Instagram, and Google.

1. Listen carefully to all their music, radio shows, playlists, mixes, etc., and find common characteristics.

2. Make use of music analysis tools to support your pitching with objective data.

The analysis tools give some sort of neutral perspective on your music and the gatekeeper’s offerings and serve as an objective advocate. A solution like Cyanite displays the emotional profile and musical style of your songs. When you analyze the playlist that you want to be placed in and the profiles match, chances are high that the playlist curator will consider your song.

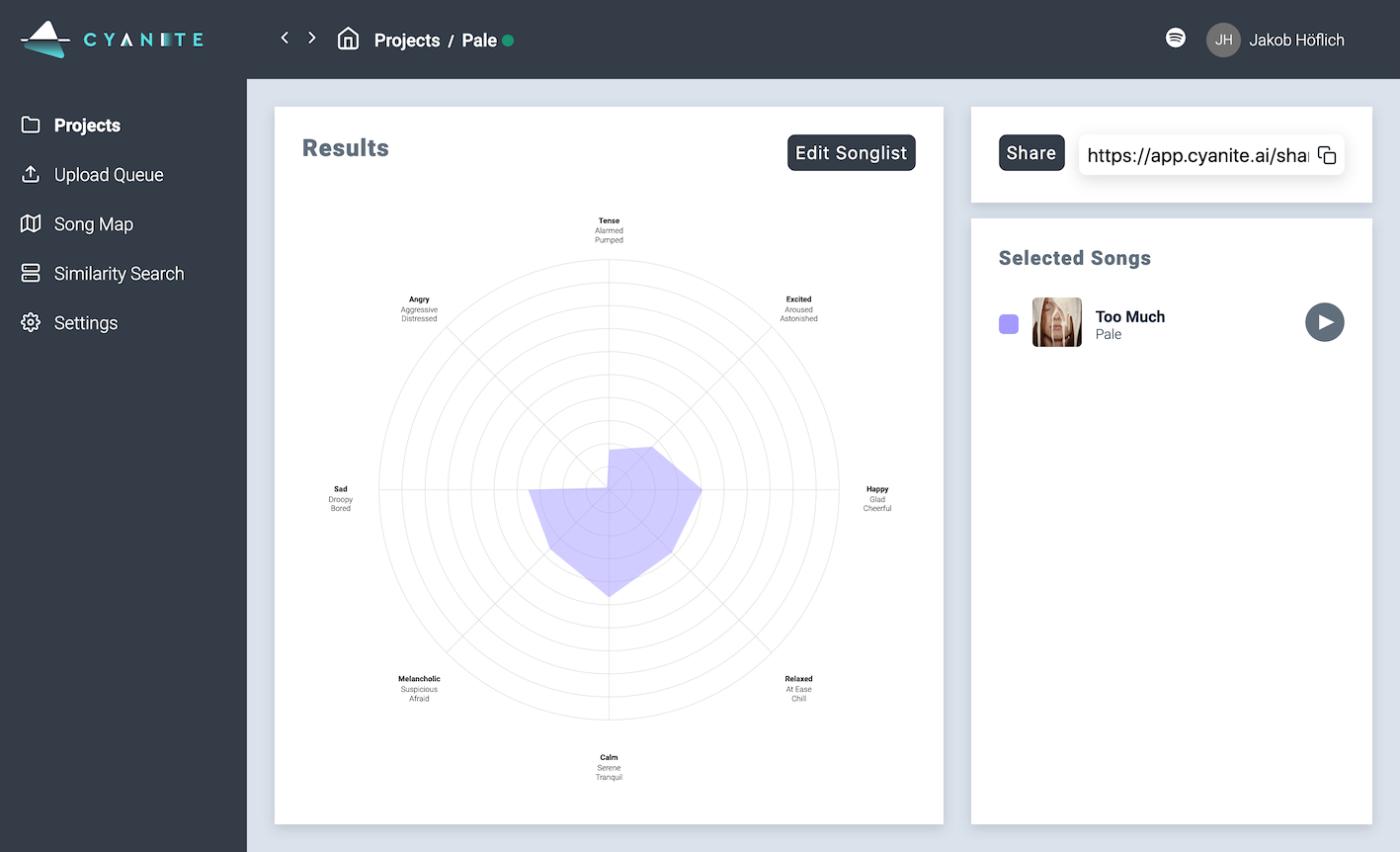

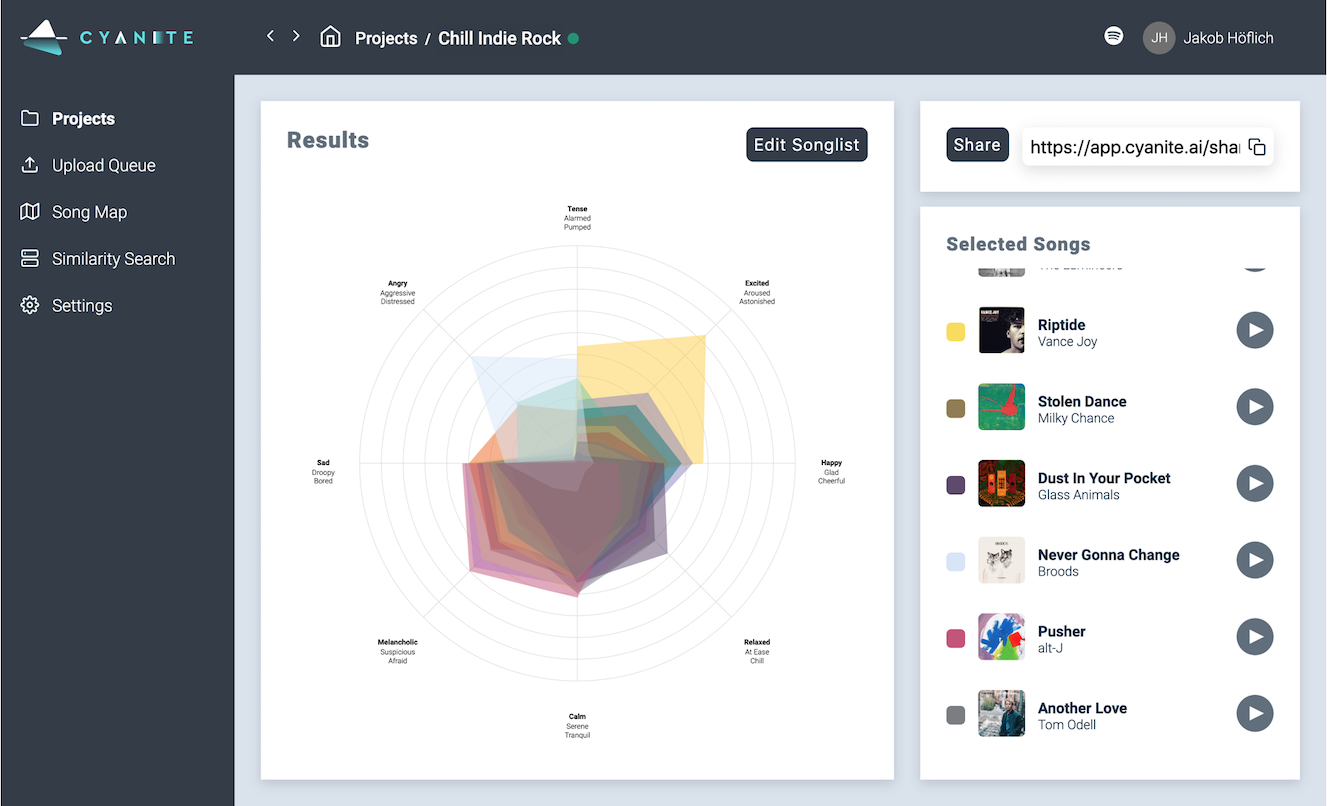

Let’s explore an example. Below you see two screenshots taken from Cyanite: the first one is the emotional profile of the song Too Much by the British band Pale, and the second one is the independently curated playlist Chill Indie Rock. Imagine you are pitching the song to the curator of the playlist. You can add the “emotional fit” as another piece to your story.

However, if it doesn’t match, find a playlist that does. We created a more detailed guide on how to pitch playlists in music streaming – read it before you start pitching Spotify curators.

Screenshot 1: Track Mood Analysis of Pale – Too much

Click on the button to load the content from open.spotify.com.

Screenshot 2: Playlist Chill Indie Rock

Click on the button to load the content from open.spotify.com.

Networking in the music industry involves being active on social media and commenting and sending supporting tweets to blog owners. A mutual connection by email or LinkedIn is ideal, as it increases trust, but it is hard to come by. By supporting the gatekeeper and being an active fan, you can make sure it is not a completely cold approach.

While it is important to network with the gatekeepers through social media channels and email, it is more important to understand the angle of the media and how well your music fits into it. To see how you can identify the right match see the article How to Write Press Releases and Music Pitches with Cyanite.

Gatekeepers have hundreds of people contacting them every day but only a limited amount of time to sift through the endless stream of music. For the most part, they have a very distinct kind of music/artist they cover. ‘Musical style’ is only a small part of it – they also consider the stage of your career, looks, affiliation to other artists, political affiliation and engagement, and your lifestyle.

If you are not somewhat a perfect match: don’t bother writing to them as it is better to spend that time creating new music and/or building up your Social Media game and brand image around your music. With the gatekeepers that match your music though, make sure you use a warm approach, show them that you are serious about your music, and make use of the analytics and AI tools out there to enrich your story with objective data that will help the gatekeepers make a positive decision.

Markus ist the Co-Founder and CEO of CYANITE. Before he co-founded the boutique label Serve & Volley Rec. and worked at the music promotion agency Shoot Music.

I want to use Cyanite to reach the music gatekeepers – how can I get started?

Please contact us with any questions about our Cyanite AI via sales@cyanite.ai. You can also directly book a web session with Cyanite co-founder Markus here.

If you want to get the first grip on Cyanite’s technology, you can also register for our free web app to analyze music and try similarity searches without any coding needed.