Explore New Cyanite Features – June 2021

Introducing Cyanite’s new features

We are constantly updating and adding new features to Cyanite based on your feedback. In June we released features that will make you even more efficient at analyzing and discovering music.

Our new features include an updated library view, more opportunities for deep analysis with genre and mood values, musical era tags, and improved Similarity Search with Custom Interval scanning and up to 100 search results.

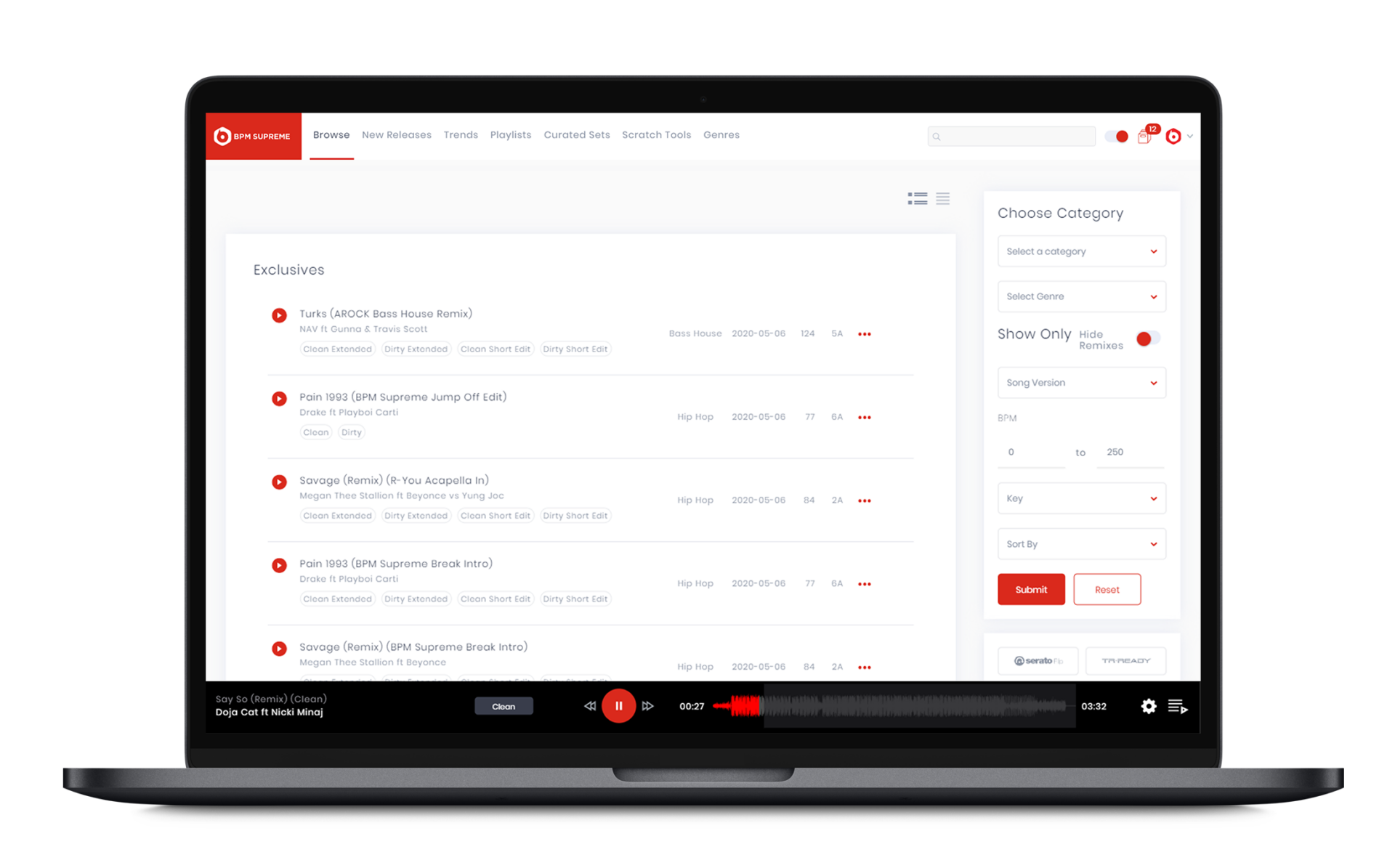

New Library View

The new library view is a feast for your eyes with the following new features:

-

- You can now enjoy the library view with colored tabs highlighting results in Energy Level, Emotional Profile, and other columns. The color scheme helps you understand the song’s character right away.

-

- Find the exact numerical values for each genre and mood and get a granular analysis of the song. You’ve never experienced such a detailed music analysis before.

-

- Additionally, have a look at the musical era column. The AI can now determine the decade the song sounds like.

Similarity Search

The Similarity Search lets you search your own database using a reference track from Spotify, Youtube, or from your own library, and filter the results by genre, bpm, and key.

-

- We have now improved the performance of similarity search so you can get more relevant and precise results.

-

- Choose the exact part of your reference song you want to find similar tracks for using the Custom Interval

-

- Also now you can display up to 100 songs in the search results.

Augmented Keywords

If there are keywords you don’t see in Cyanite but would like to use, we have additional keywords for you. Augmented keywords are now available and can be unlocked upon request. Explore genres, brand values, moods, and ‘music fors’ such as “urban”, “mellow”, “party”, “sports”, “contemplative”, and “confident”.

I want to try out Cyanite’s AI platform – how can

I get started?

If you want to get a first grip on how Cyanite works, you can also register for our free web app to analyze music and try out similarity searches without any coding needed.

Contact us with any questions about our frontend and API services via mail@cyanite.ai. You can also directly book a web session with Cyanite co-founder Markus here.

For more Cyanite content on AI and music, check these out:

The 4 Applications of AI in the Music Industry

Translating Sonic Languages: Different Perspectives on Music Analysis