How AI Empowers Employees in the Music Industry

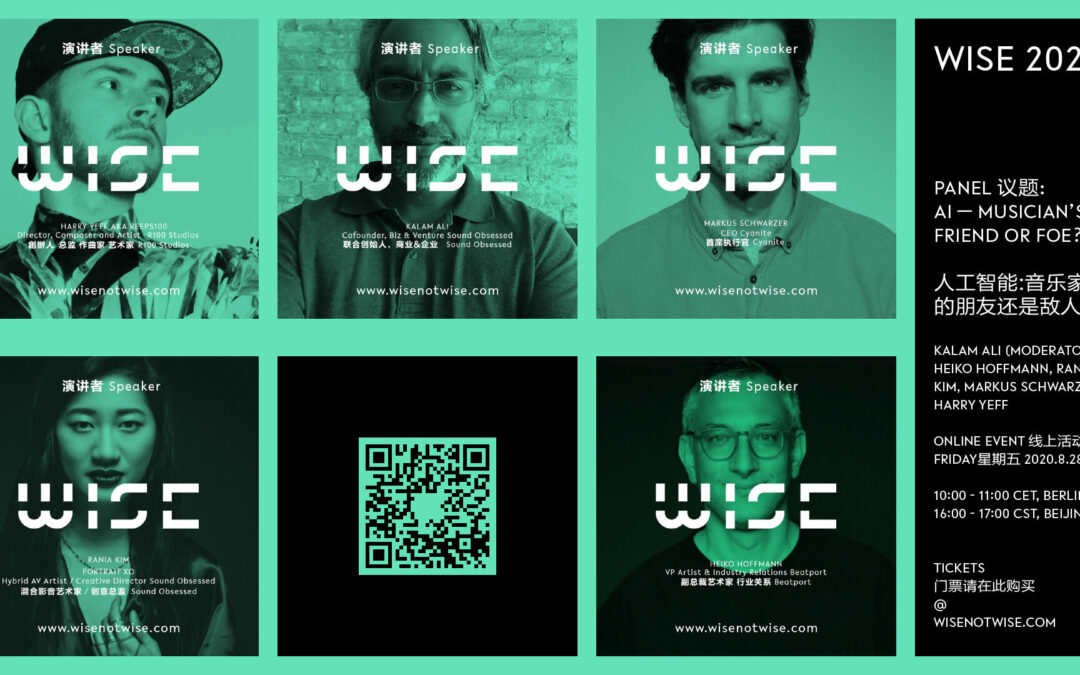

According to a McKinsey report, 70% of companies are expected to adopt some kind of AI technology by 2030. The music industry is not an exception. Yet, when it comes to AI, skepticism often overshadows the potential. AI is thought to be a job killer and a force that will outperform humans in creativity and value production. This popular view causes general anxiety across all industries. This article is intended to add a new point of view about AI in music and show how AI can be used to solve typical business problems such as employee motivation and getting the work done in a more meaningful way.

The music industry is special in its AI adoption journey, as it is one of the industries that has very vivid problems that can be solved by AI. The number of tracks uploaded to the internet reaches 300.000 a day and the number of artists on Spotify could be 50 million by 2025. In this situation, the output exceeds the human capacity to manage it, so sometimes there is no other way but to use the AI. Despite the benefits, the negative impact of AI is a common subject in the media – see the article here.

Annie Spratt © Unsplash

For a more balanced view on the topic, let’s explore how AI can actually help humans working in the music industry if the goal is not to maximize profits and productivity but empower the workforce.

How AI can improve the employee experience

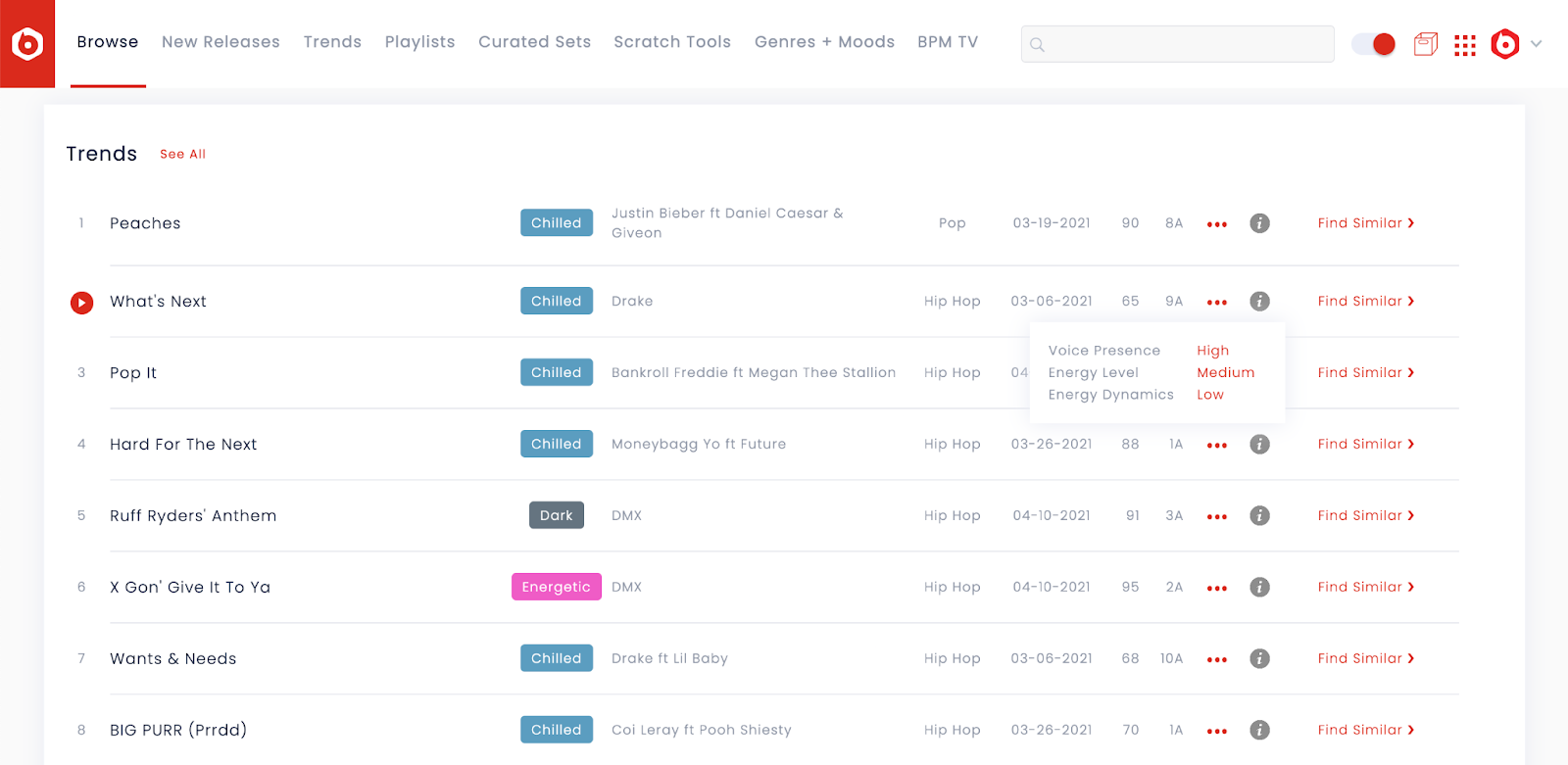

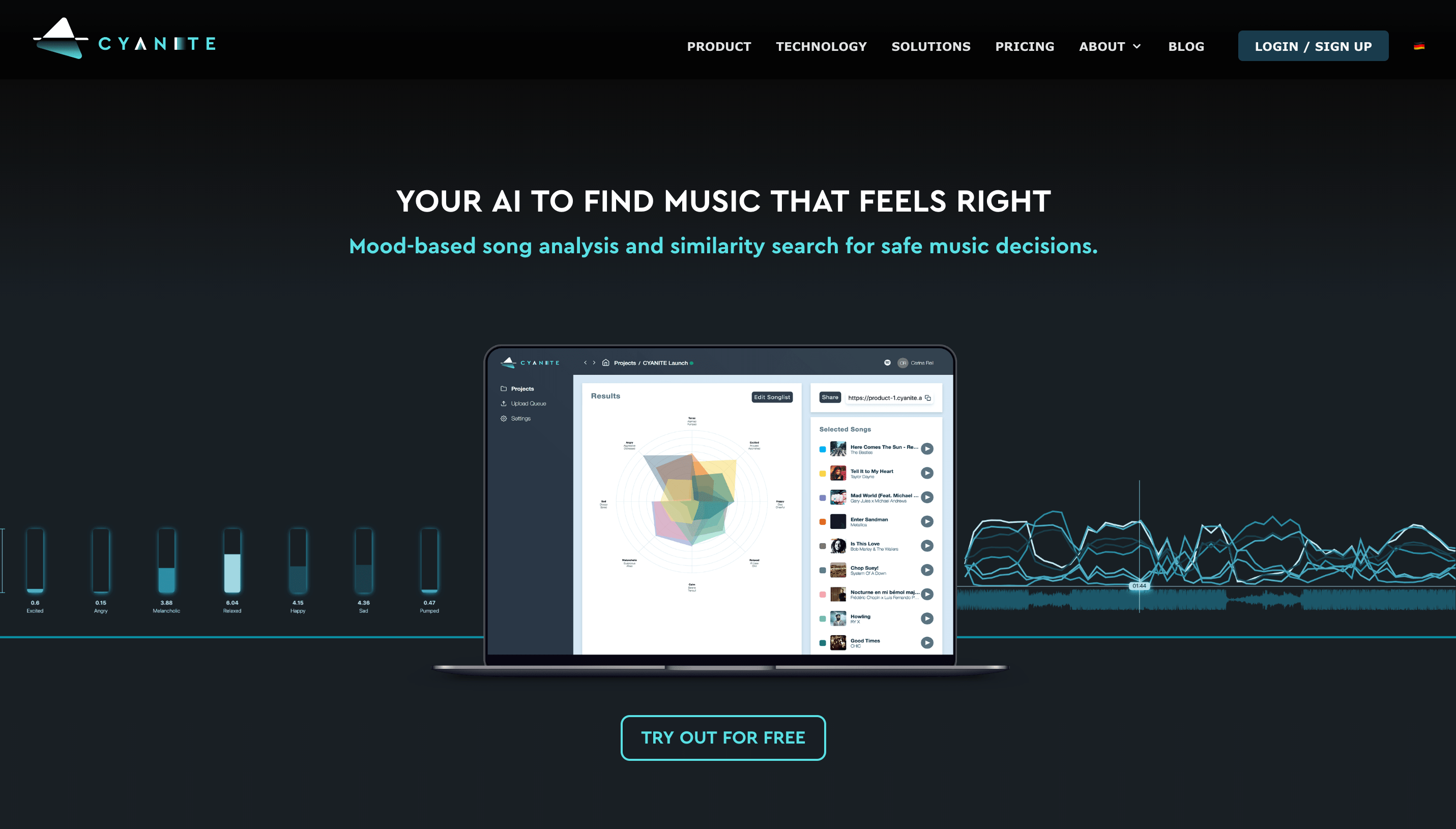

Speed up music tagging and search

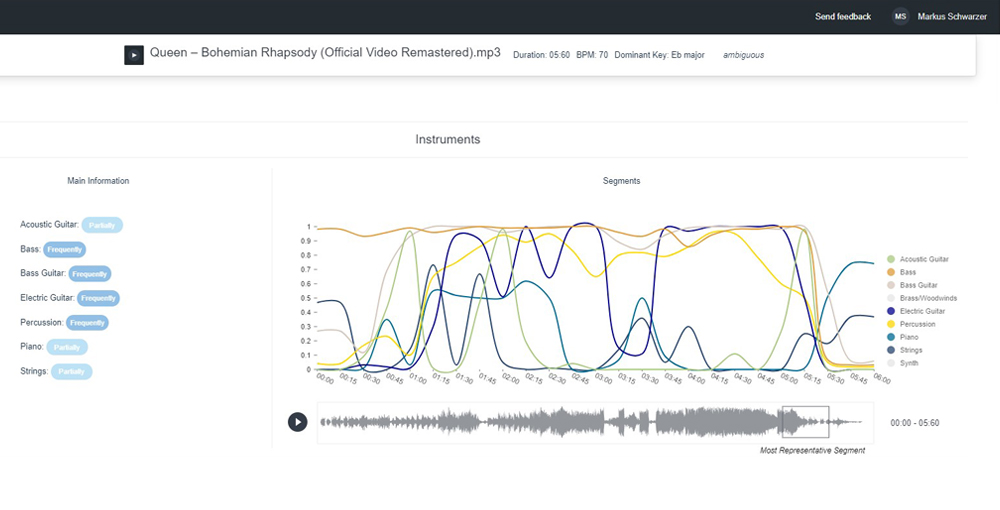

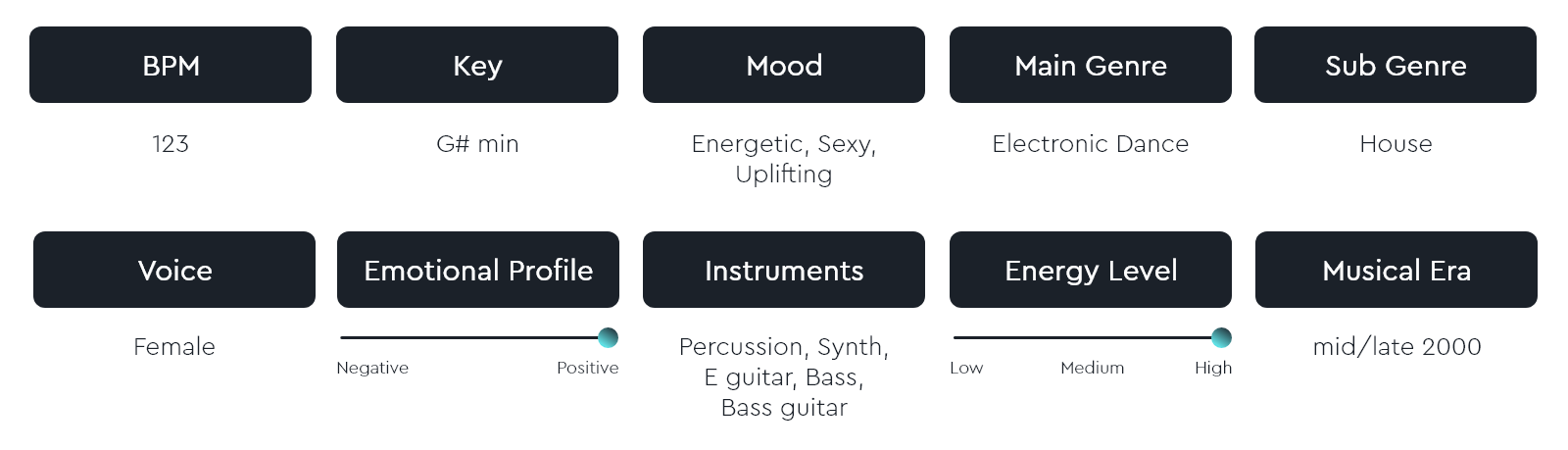

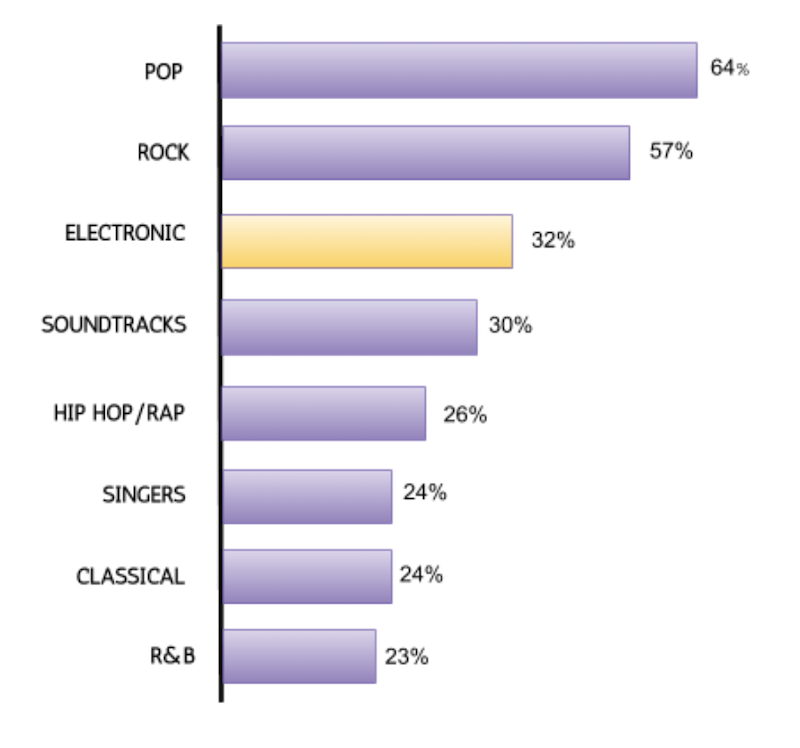

In their work, music companies often have to deal with music search. The essential part of a music search is a well-managed and consistently tagged music library. Such a library usually uses a lot of metadata. Some of the metadata are “hard factors” such as release date, recording artist, or title. But “soft factors” such as “genre”, “mood”, “energy level”, and other music describing factors become more and more important.

Obviously, assigning tags for those soft factors to music is a tedious and subjective task. AI helps with these tagging tasks by automatically detecting all the data in a music track and categorizing the song. It can easily decipher between rock music and pop music, emotions, such as “sad” or “uplifting”, add instruments to a track, and tag thousands of songs in a very short time.

But in fact, music tagging AI doesn’t perform without errors. AI is not perfect and it still needs human supervision.

Automatic tagging increases work speed which gives companies the opportunity to quickly add songs to their catalog and pitch them to customers.

Clean up a huge amount of data

As it is with AI, there is always a possibility of errors when a person manages the catalog. Especially when different team members managed a catalog over the years, the number of errors can become overwhelming.

AI helps reduce human errors in existing catalogs as well as prevent them in the future. For existing catalogs, AI can provide an analysis of data, discover oddly tagged songs, and then eliminate discrepancies. AI will also make some mistakes but it will do them in a consistent way. You can see how it works step by step in this case study here.

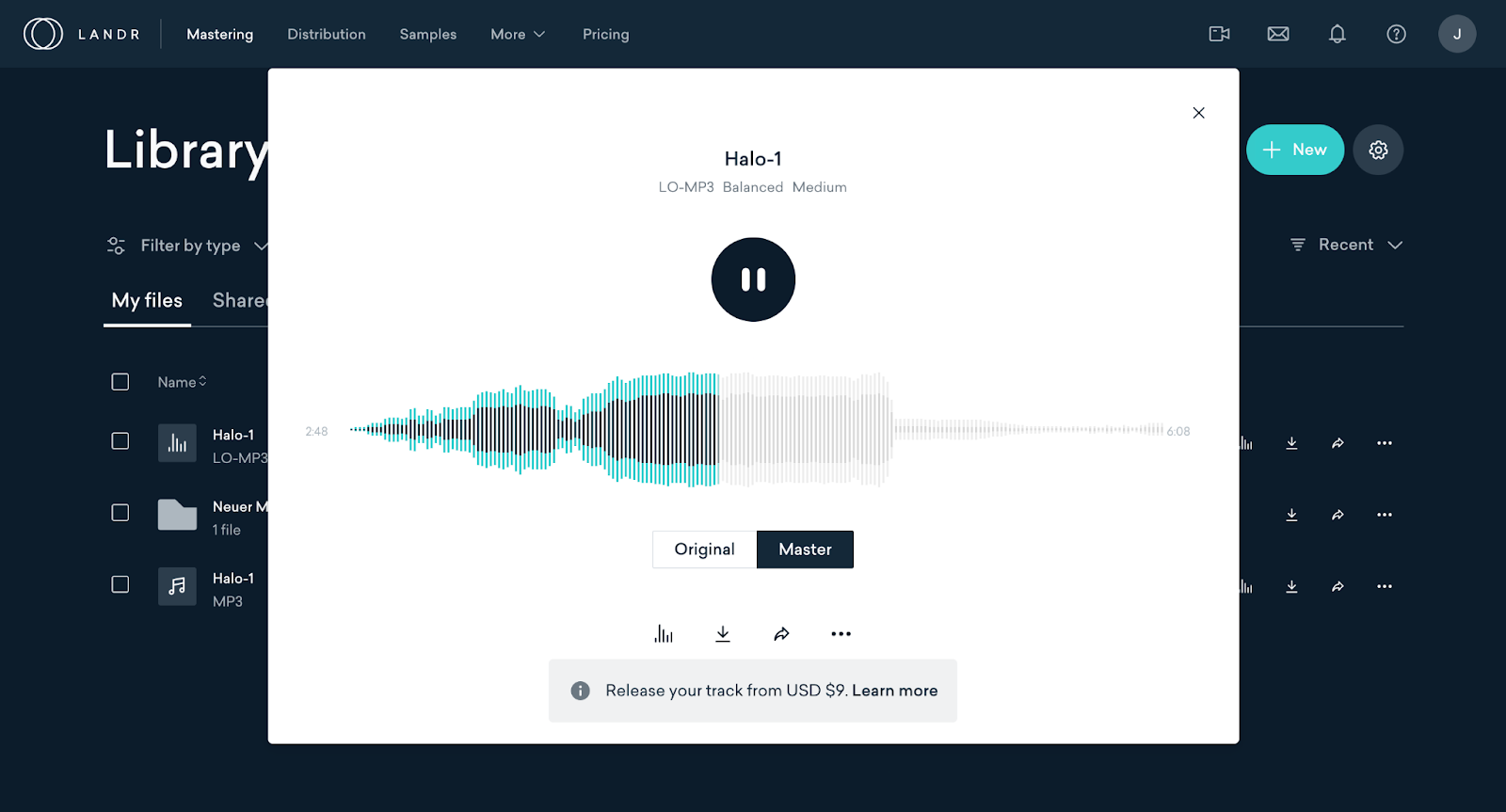

Enrich tedious music discussions with AI-generated data

AI is great for visualizing data and making complex information digestible – and so it is for music! In some visualizations, songs are grouped by emotions so you can get a very comprehensive view of the library. For single tracks, AI can analyze emotions across the whole track or in a custom segment. Another way to visualize a catalog is to group songs by similarity so most similar artists are bundled up together.

Ever tried to convince a data-driven CMO that this one song has a melancholic touch and doesn’t fit too well to the campaign? Try to back up your expertise with some data the next time!

Instruments analysis in Cyanite app

In any case, AI can complement marketing and sales efforts by giving the companies tools to visualize the catalog and song data and then use this data to sell. On a song-by-song basis, visualization provides a snapshot of the song that can be understood easily. But, really, visualization of data emphasizes innovative data-centered positioning of the company and adds a bit of spice to the sales efforts.

At Cyanite, we even created several music analysis stories using visualization.

Reduce human bias and make data-based decisions

AI in music can ensure every decision is based on data, not emotions. For example, when choosing tracks for a brand video it is important that it adheres to the brand guidelines. But more often, tracks are chosen simply because someone liked them.

To avoid human bias, checking in with AI can be implemented into the business strategy for more consistent and better branding efforts. For example, in the case of a branded video, AI will offer songs that correspond to the brand profile whether it is “sexy”, “chill”, or “confident”.

All these capabilities of AI drastically improve the quality of the end result for customers and allow to get rid of tedious and boring tasks for employees.

How AI can boost motivation

Spend more time on creative and meaningful tasks

One of the AI benefits is that employees can focus on creative solutions rather than on repetitive tasks. This frees up individual qualities such as empathy, communication, and problem-solving. Those qualities are then useful for customer acquisition and service, as the studies confirms. If you are working in sync and answering a sync briefing, finding right-fitting songs from the catalog with AI’s help leaves you more time to add a creative storyline why this song is a great fit beyond its pure sound. In the end, AI has the potential to increase the customer service level while contributing to higher employee satisfaction.

LinkedIn Sales Solutions © Unsplash

Speed up learning and training new employees

In one of the case studies, we’ve shown the process of cleaning a music library. Some assets that were created during the project can be used by the company to teach and train new employees. For example, a visualization of song categories can be used as a guide for new staff who are in charge of tagging new songs. See for more details here. Also, starting to work with a catalog of 10,000 songs represents a very high entry barrier and it usually takes months to understand a catalog in depth. With a Similarity Search, like the one from Cyanite or other services like Musiio, AIMS or MusiMap, a catalog search can start intuitively and easily with a reference track. It provides guidance and creates more opportunities for meaningful human work. Overall, AI is characterized by ease of use. It is highly intuitive, doesn’t need much time to be set up, and produces results at an instant. The better UX helps not only employees but also the customers if they have access to the catalog. To see for yourself, you can try the Cyanite web app here.

Ensure consistent and collaborative approach to work processes and policies

In general, AI follows one consistent tagging scheme and does so automatically which means less control is needed from a human side to keep things going. Having clean metadata means at any point in time, the catalog can be repurposed, offered to a third party, and integration can be done. And integration will become more and more important in the future: could you directly serve a new music-tech startup that wants to offer your catalog in their new licensing platform? How well are you equipped to seize business opportunities? When a catalog includes many different music libraries, and there is a need for a unified approach, AI will scan the catalog for keywords that are equal in meaning and eliminate the redundancies. When a catalog is being integrated into a larger audio library, the AI will draw parallels between the two tagging systems and then automatically retag every song in the style of the new catalog at little to no information loss rate. In general, having clean metadata and the ability to repurpose catalogs allows music companies to experiment with their offers and be more agile and innovative.

Summary

There are many benefits of AI for music companies. But also there are quite a lot of risks. When looking at AI in the music industry, it is important to understand that AI isn’t replacing jobs but it is a tool to work with and help employees improve. Of course, AI tools are different. In the case of Cyanite, the AI is handling boring repetitive tasks such as music analysis, tagging, and search. At the same time, it gives people the opportunity to work on something more meaningful and inspiring.

However, the introduction of AI not only in the music but in any industry has the potential to bear a variety of risks. That is why we are advocates for empowering human work with AI. It is important to stay critical, question new technology, and help its creators make the right decisions.

For more Cyanite content on AI and music, check these out:

The 4 Applications of AI in the Music Industry

Translating Sonic Languages: Different Perspectives on Music Analysis