AI Music Recommendation Fairness: Gender Balance

Eylül

Data Scientist at Cyanite

Part 2 of 2. To get a more general overview of AI Music recommendation fairness – more specifically the topic of gender bias, click here to check out part 1.

Diving Deeper: The Statistics of Fair Music Discovery

While the first part of this article introduced the concept of gender fairness in music recommendation systems in an overview, this section delves into the statistical methods and models that we employ at Cyanite to evaluate and ensure AI music recommendation fairness, particularly in gender representation. This section assumes familiarity with concepts like logistic regression, propensity scores, and algorithmic bias, so let’s dive right into the technical details.

Evaluating Fairness Using Propensity Score Estimation

To ensure our music discovery algorithms offer fair representation across different genders, we employ propensity score estimation. This technique allows us to estimate the likelihood (or propensity) that a given track will have certain attributes, such as the genre, instrumentation, or presence of male or female vocals. Essentially, we want to understand how different features of a song may bias the recommendation system and adjust for that bias accordingly to enhance AI music recommendation fairness.

Baseline Model Performance

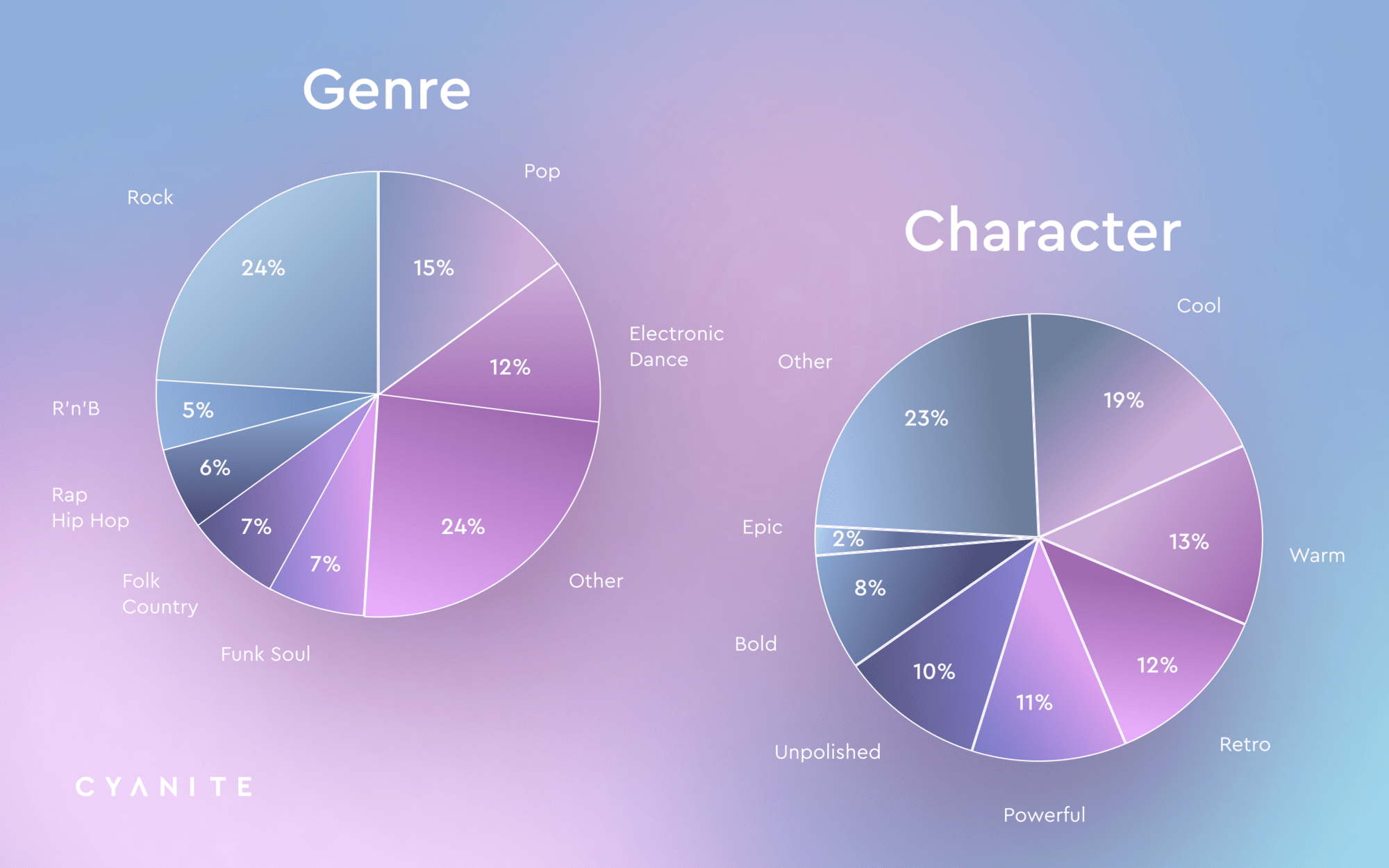

Before diving into our improved music discovery algorithms, it’s essential to establish a baseline for comparison. We created a basic logistic regression model that utilizes only genre and instrumentation to predict the probability of a track featuring female vocals.

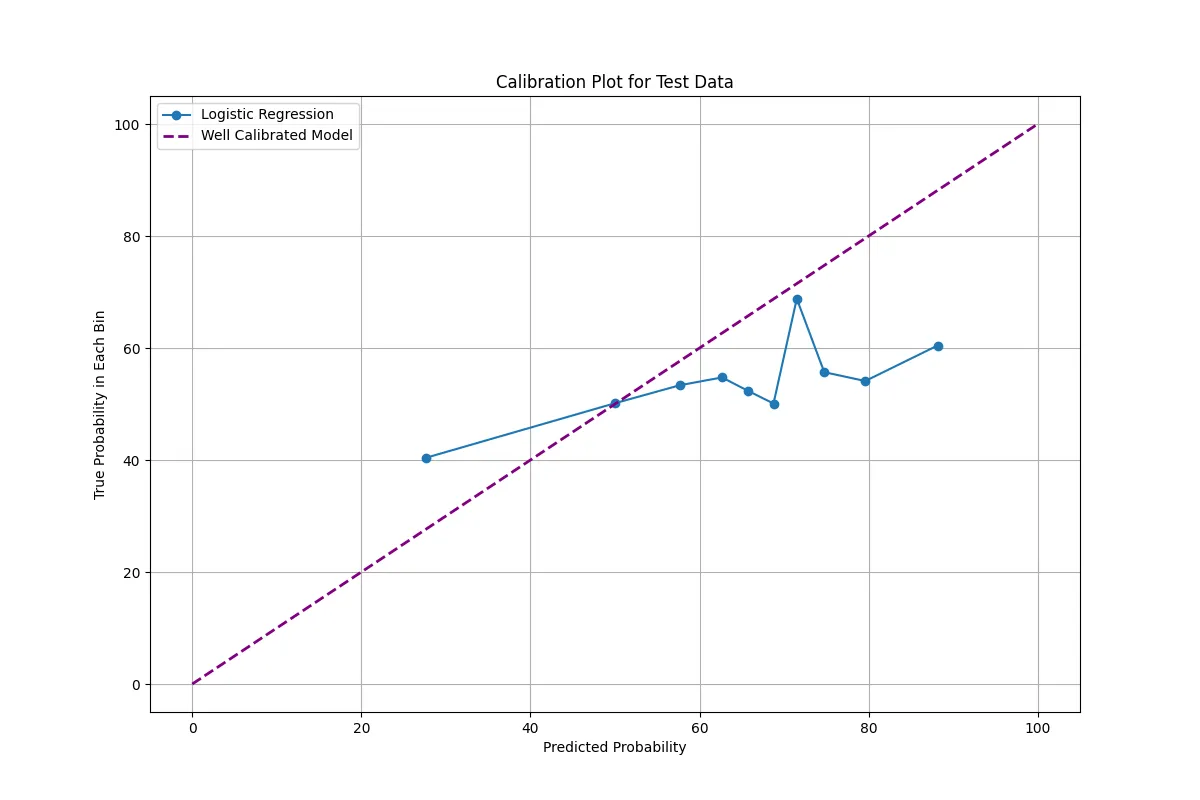

A model is considered well-calibrated when its predicted probabilities (represented by the blue line) closely align with the actual outcomes (depicted by the purple dashed line in the graph below).

Picture 1: Our analysis shows that the logistic regression model used for baseline analysis tends to underestimate the likelihood of female vocal presence within a track at higher probability values. This is evident from the model’s performance, which falls below the diagonal line in reliability diagrams. The fluctuations and non-linearity observed suggest the limitations of relying solely on genres and instrumentation to predict artist representation accurately.

Propensity Score Calculation

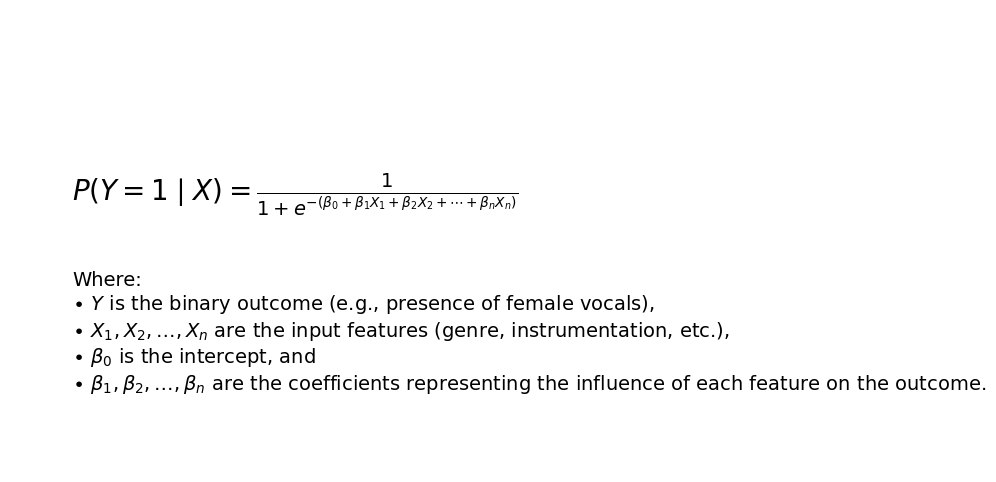

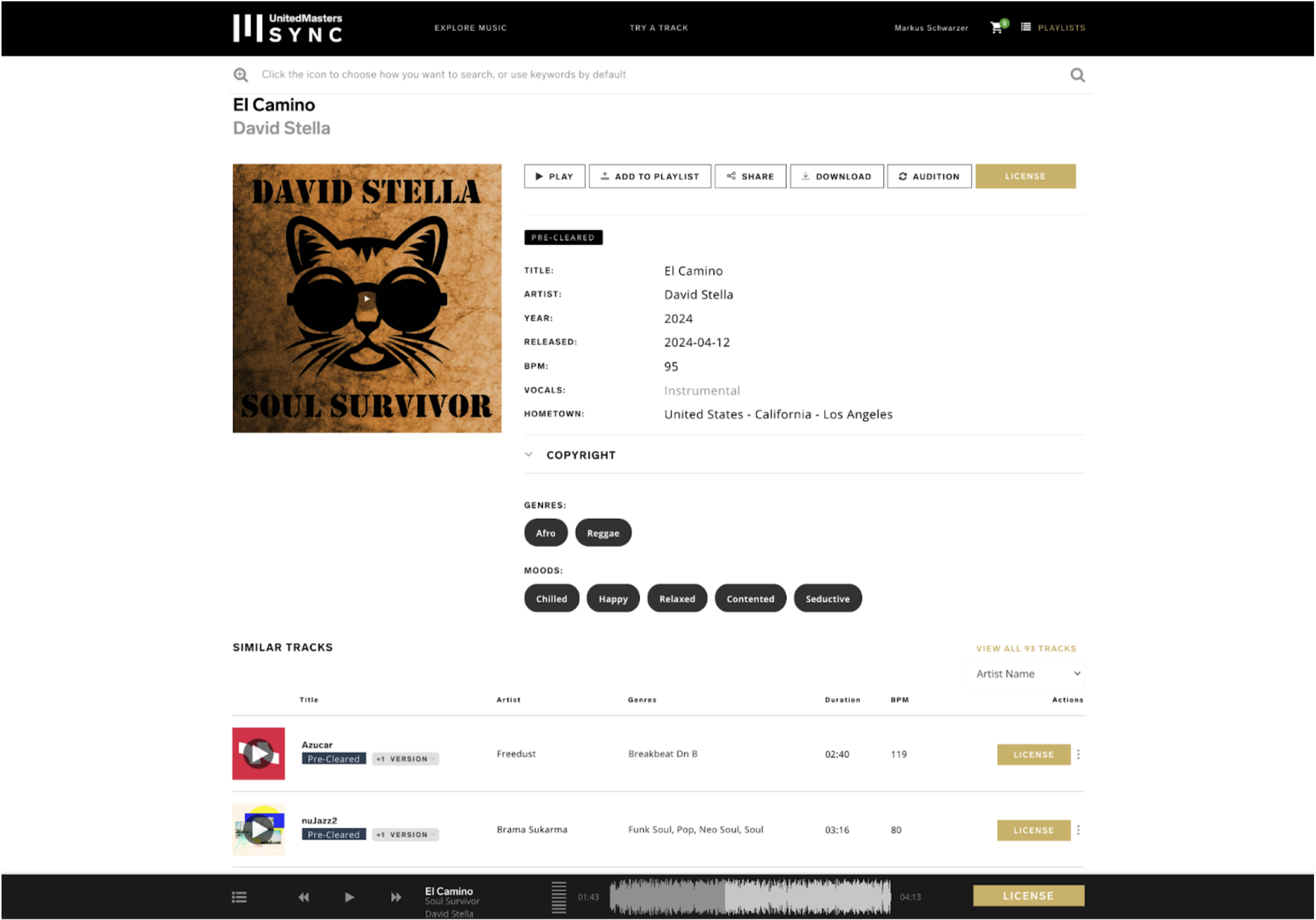

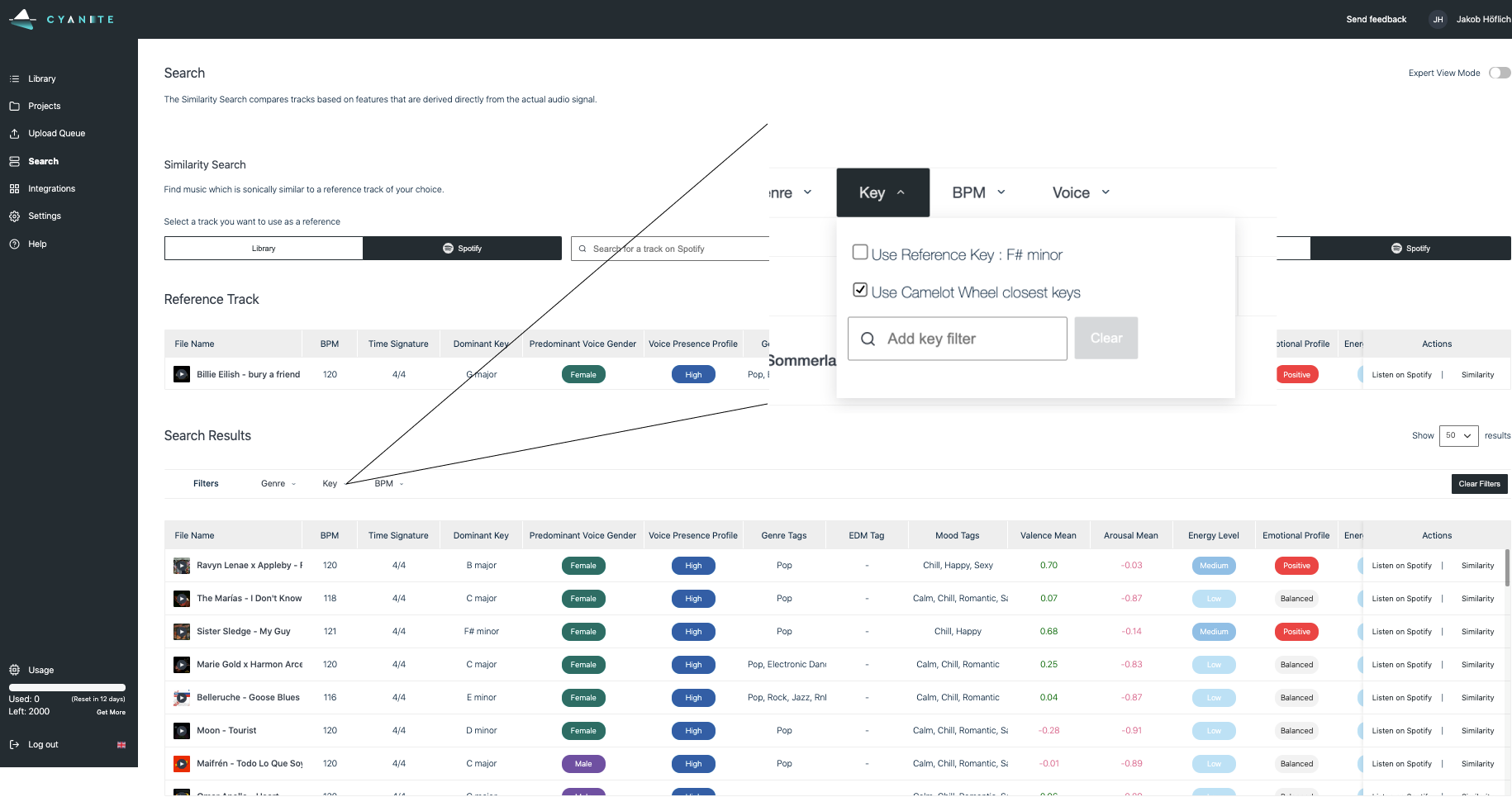

In Cyanite’s Similarity Search – one of our music discovery algorithms – we model the likelihood of female vocals in a track as a function of genre and instrumentation using logistic regression. This gives us a probability score for each track, which we refer to as the propensity score. Here’s a basic formula we use for the logistic regression model:

Picture 2: The output is a probability (between 0 and 1) representing the likelihood that a track will feature female vocals based on its attributes.

Binning Propensity Scores for Fairness Evaluation

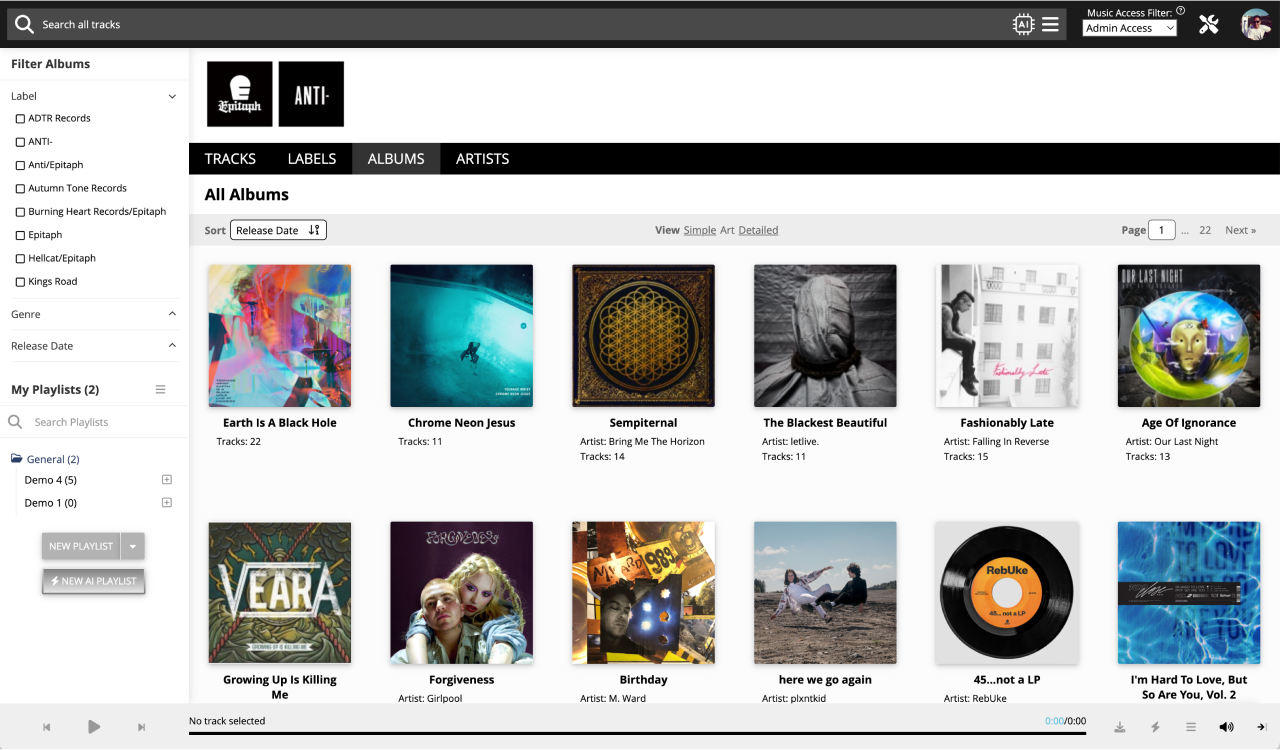

To assess the AI music recommendation fairness of our models by observing the correlations between the input features such as genre and instrumentation with the gender of the vocals, we analyze for each propensity the model outcome of the female artist ratio. To see the trend of continuous propensity scores into discrete variables and the average of female vocal presentation for that range, binning has been applied.

We then calculate the percentage of tracks within each bin that have female vocals as the outcome of our models. This allows us to visualize the actual gender representation across different probability levels and helps us evaluate how well our music discovery algorithms promote gender balance.

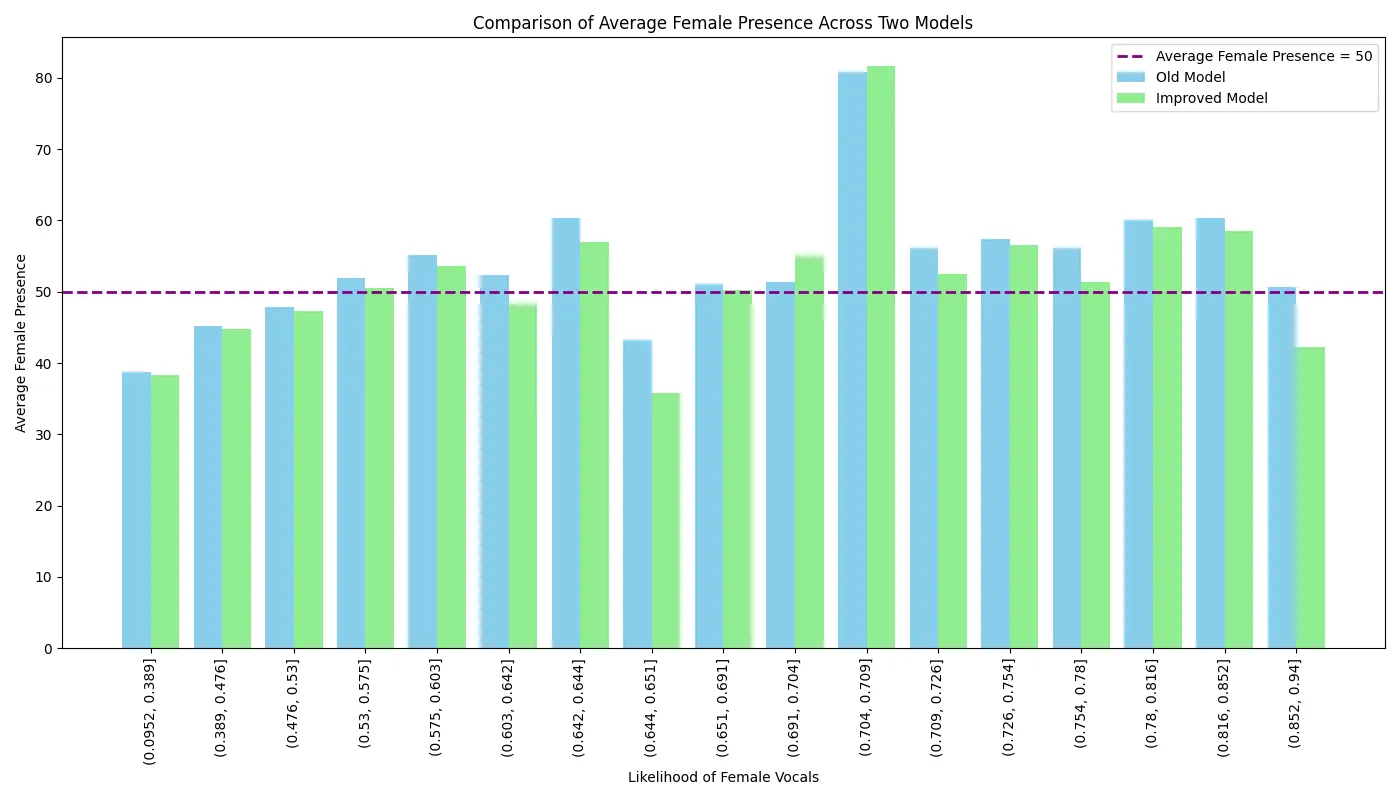

Picture 3: We aim for gender parity in each bin, meaning the percentage of tracks with female vocals should be approximately 50%. The closer we are to that horizontal purple dashed line, the better our algorithm performs in terms of gender fairness.

Comparative Analysis: Cyanite 1.0 vs Cyanite 2.0

By comparing the results of Cyanite 1.0 and Cyanite 2.0 against our baseline logistic regression model, we can quantify how much fairer our updated algorithm is.

- Cyanite 1.0 showed an average female presence of 54%, indicating a slight bias towards female vocals.

- Cyanite 2.0, however, achieved 51% female presence across all bins, signaling a more balanced and fair representation of male and female artists.

This difference is crucial in ensuring that no gender is disproportionately represented, especially in genres or with instruments traditionally associated with one gender over the other (e.g., guitar for males, flute for females). Our results underscore the improvements in AI music recommendation fairness.

How Propensity Scores Help Balance the Gender Gap

Propensity score estimation is a powerful tool that allows us to address biases in the data samples used to train our music discovery algorithms. Specifically, propensity scores help ensure that features like genre and instrumentation do not disproportionately affect the representation of male or female artists in music recommendations.

The method works by estimating the likelihood of a track having certain features (such as instrumentation, genre, or other covariates) using and checking if those features directly influence our Similarity Search by putting our algorithms to the test. Therefore, we investigate the spurious correlation which is directly related to gender bias in our dataset, partly from the societal biases.

We would like to achieve a scenario where we could represent genders equally in all kinds of music. This understanding allows us to fine-tune the model’s behavior to ensure more equitable outcomes and further improve our algorithms.

Conclusion: Gender Balance

In conclusion, our comparative analysis of artist gender representation in music discovery algorithms highlights the importance of music recommendation fairness in machine learning models.

Cyanite 2.0 demonstrates a more balanced representation, as evidenced by a near-equal presence of female and male vocals across various propensity score ranges.

If you’re interested in using Cyanite’s AI to find similar songs or learn more about our technology, feel free to reach out via mail@cyanite.ai.

You can also try our free web app to analyze music and experiment with similarity searches without needing any coding skills.