How to Use AI Music Search for Your Music Catalog

The burgeoning field of artificial intelligence has brought forth more tools than we can count, aiming to revolutionize the music industry. Amidst this landscape, AI-based music tagging and search stand out as proven technologies with quantifiable benefits for music companies worldwide.

This article aims to demystify the potential of AI music search and recommendation systems, offering insights to help music catalog owners and distributors determine the relevance of this technology for their businesses. Whether navigating a vast catalog or seeking innovative solutions for content discovery, this guide is tailored to your needs.

If you are also interested in Auto-Tagging music, please check out our article on The Power of Automatic Music Tagging with AI.

Assessing Your Needs

Understanding the suitability of AI music search begins with assessing your specific requirements. Consider the following prompts:

-

- Has your catalog experienced significant growth from various sources?

- Do a variety of different users access and search through your catalog?

- Is sync a key aspect of your company?

- Do you find yourself repeatedly using the same tracks while underutilizing others?

If you answer affirmatively to at least two of these questions, further exploration of AI music search is advisable.

Exploring Options

Two primary AI-driven search options dominate this landscape:

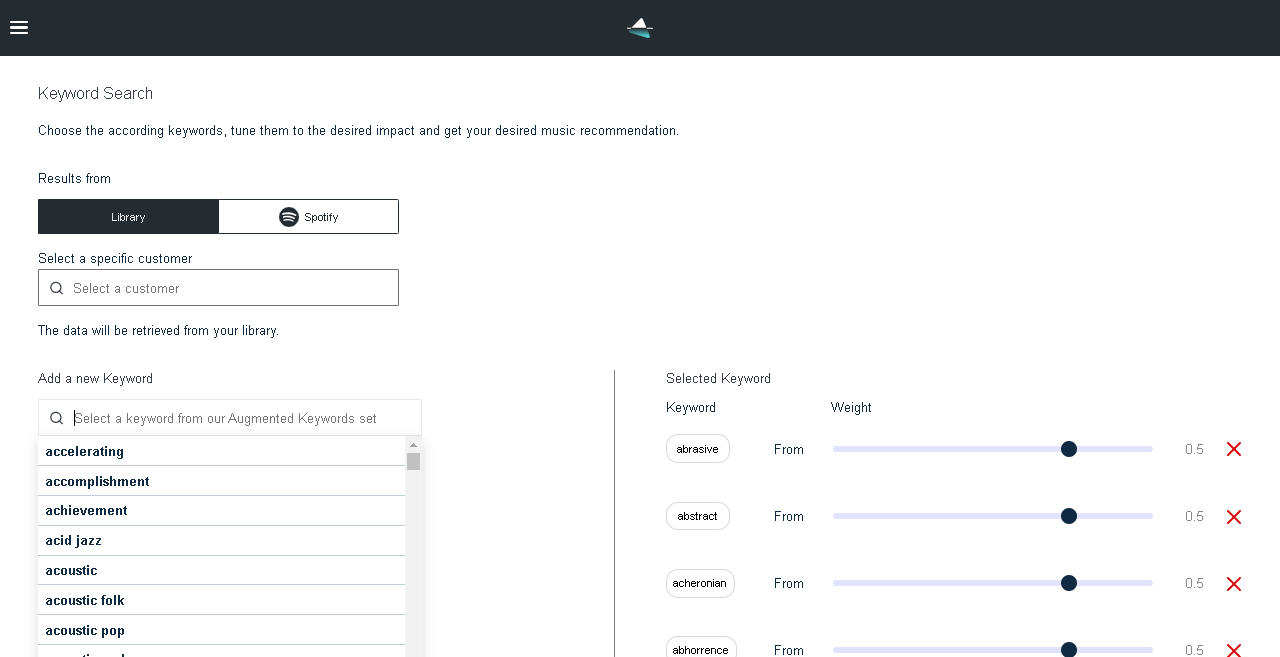

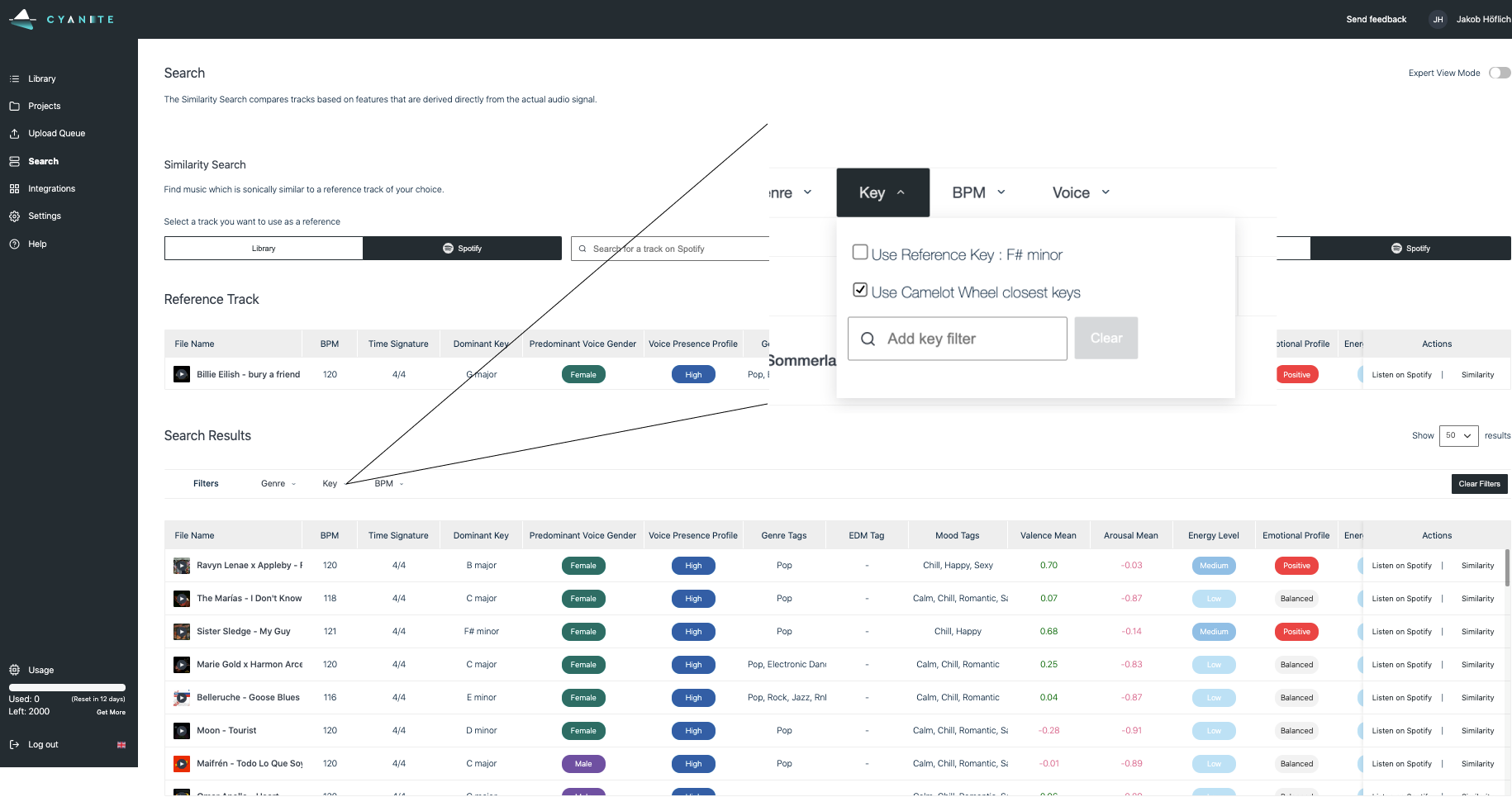

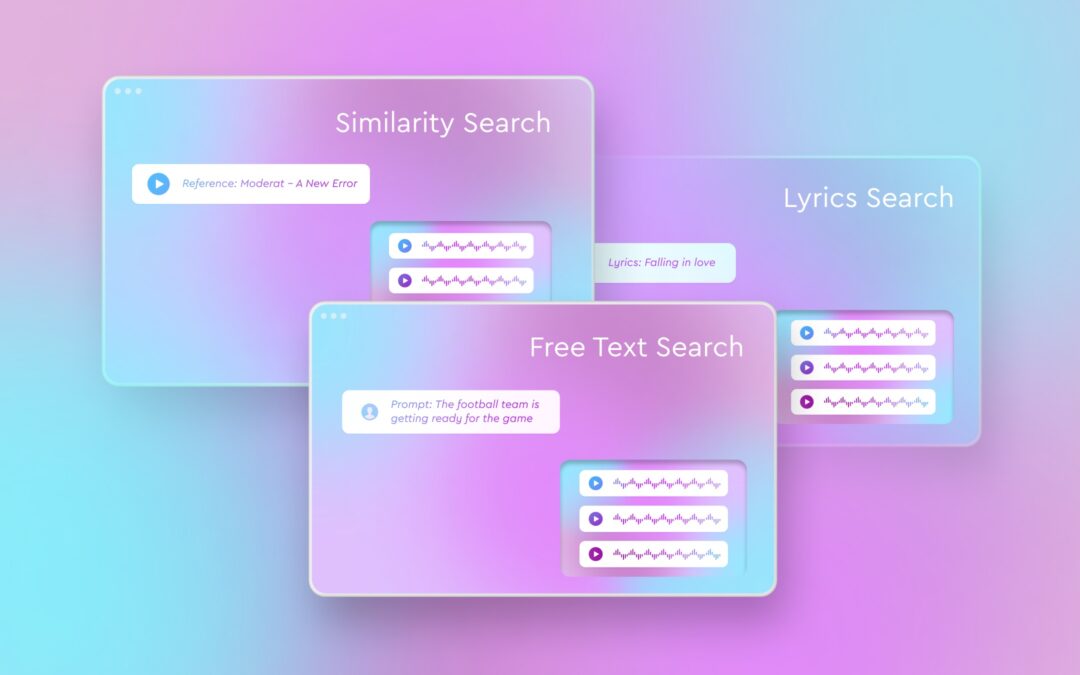

Similarity Search: Ideal for discovering tracks similar to a reference song, this feature aids sync and licensing teams worldwide. Whether finding similar songs in your catalog to worldwide hits or augmenting your song collection for your sync pitch, this tool enhances any music search.

Free Text Search (Searching by natural language): Utilizing text prompts, this search method facilitates music discovery for visual projects and instances where translating thoughts into keywords proves challenging. Its versatility extends from B2B applications, common in music licensing, to B2C scenarios, as demonstrated by Cyanite’s collaboration with streaming platforms like Sonu.

Lyrics Search: Enhance your search experience by delving into the lyrical content of songs. This feature adds a contextual dimension to sound-based searches, allowing users to find songs based on specific words or themes within their lyrics. Whether you’re searching for songs containing a particular word like “love” or exploring themes such as “falling in love,” this functionality offers a nuanced approach to music discovery.

If you want to test those AI music search options, you can easily create a free account for Cyanite’s web app and try it out.

Building vs. Buying

Choosing between building an in-house AI solution or licensing from an external provider hinges on several factors:

-

- Do you possess an internal data science and development team?

-

- Do you have a meticulously tagged library of at least 500,000 audios?

-

- Can you allocate a significant budget for AI development (mid-six-figure sum) and ongoing updates?

-

- Are you prepared to invest in monthly maintenance costs for a proprietary AI system (monthly five-figure sum)?

Building your AI may be viable if you answer at least two of these questions positively. Alternatively, partnering with an AI provider like Cyanite presents a compelling option for those lacking the resources or expertise for independent development.

To book an individual and free consultation with our experts, please follow the button below.

Long Story Short

AI music search offers a transformative solution for navigating the complexities of music catalogs, empowering businesses with enhanced efficiency and discovery capabilities. Whether leveraging similarity search or text-based prompts, the adoption of AI-driven technologies promises to redefine content discovery in the music industry.

Explore the possibilities of AI music search with Cyanite, and unlock the full potential of your music catalog today.

Your Cyanite Team.