How to Create Mood- and Contextual Playlists With Dynamic Keyword Search

In the last article on the blog, we covered how Cyanite’s Similarity Search can be used in music catalogs. In this article, we explore another way to search for songs using Dynamic Keyword Search and how to leverage it to create mood- and contextual-based playlists.

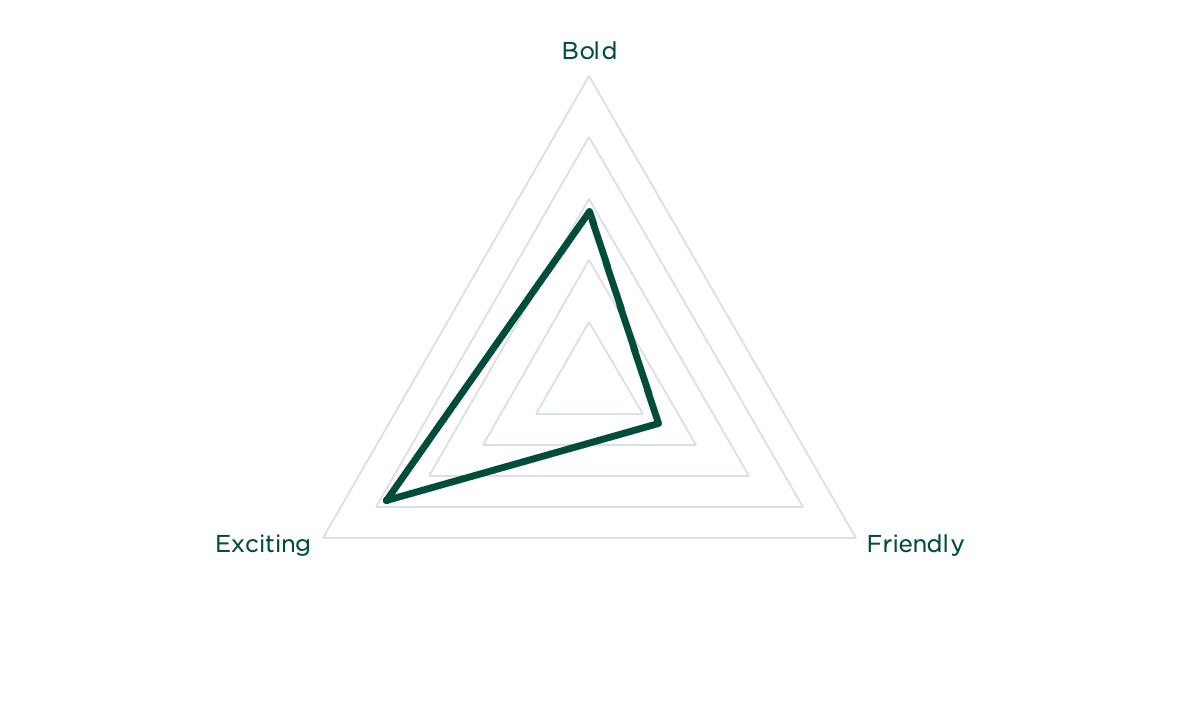

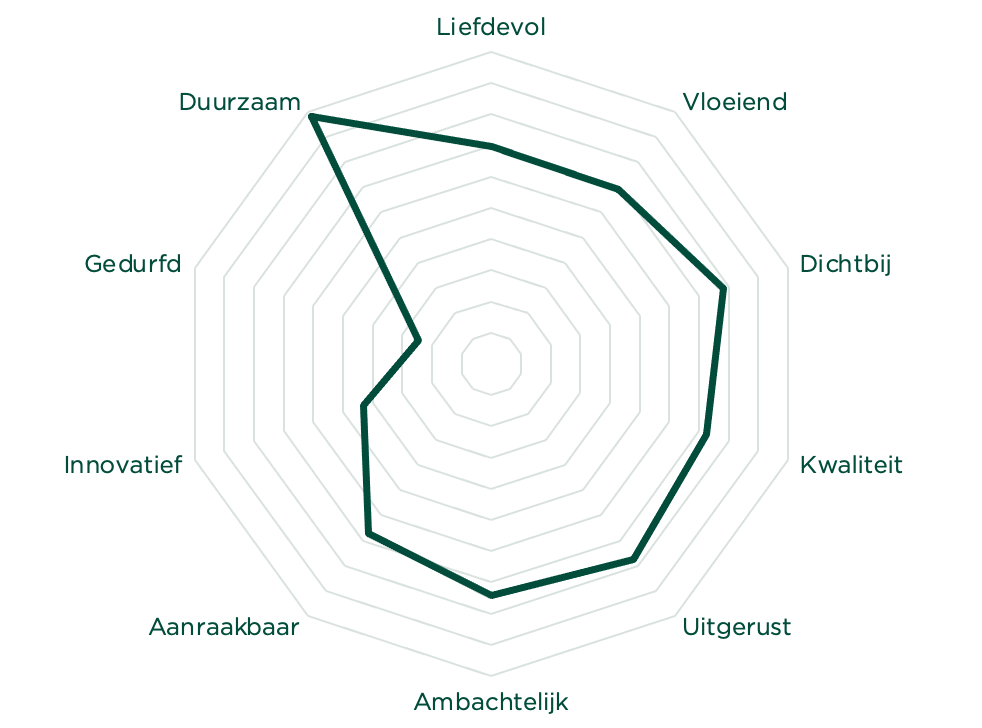

Rather than relying on a reference track, Dynamic Keyword Search allows you to select and combine from a list of 1,500 keywords and adjust the impact of these keywords on the search. This is especially helpful to create playlists where songs match in mood, activity, or other characteristics.

But before we explain how this feature works, let’s explore how playlists are created. What makes a perfect playlist? Why are playlists so essential when utilizing a music catalog? And how can the Dynamic Keyword Search help with that?

There are three techniques for playlist creation:

- Manual creation (individually picking songs)

- Automatic generation and recommendation

- Assisted playlist creation.

Historically, manual creation has been the most basic and old approach. It involves picking songs individually for playlists. It might be the simplest technique but the amount of time and effort that goes into it can be overwhelming. Imagine you are working 100,000 audios in a catalog and have to create an “Energetic Workout” and “Beach Party” playlist.

Automatic generation uses various algorithms to create playlists with no human intervention. One of the most famous ones is, for example, “Discover Weekly” by Spotify.

Assisted playlist creation uses music technology to guide and support manual playlist creation.

In the research by Dias, Goncalves, and Fonseca, manual playlist creation was found to be most effective in terms of control, engagement, and trustiness. This means that people trust handmade playlists. Also, manual creation provides the most amount of control over the outcome and it engages editors in the creation process.

Automatic creation was found to be the most effective in adapting to the listeners’ needs. There is no manual control involved, so automatic tools can adapt and change playlists in no time.

Assisted techniques were found to be most effective in terms of engagement and trustiness whilst being quick to create. They also performed well on the song selection criteria. Song selection has been defined as the most critical factor in the playlist creation process according to this study. However, while song selection is considered very important, the question of what makes a song right for the particular playlist is still open. Apart from that, assisted techniques proved to be optimal in control, and serendipity and they also can adapt to listening preferences rather easily.

To anticipate things already: The Dynamic Keyword Search is exactly such an assisted technique in playlist creation.

Why are search tools for playlist creation important in a catalog?

Playlists have been known to be the ultimate tool for promoting music. We already covered the ways artists can get on Spotify and other people’s playlists in other articles on the blog. But creating playlists can also be beneficial for catalog owners and catalog users, be it professional musicians or labels. Here is why:

- You can realize new and passive modes to exploit and monetize your catalog. If you make it easier for your users and/or customers to explore your catalog, you directly increase its value.

- Playlists are used as a promotional tool to showcase the works of an artist or the inspirations behind the artist. This article recommends creating two playlists: a vibe playlist and a catalog playlist for brand engagement and streams.

- Playlists help organize music by theme or context.

- With playlist creation features, users save time on finding the right fitting songs

- Playlists can be indexed separately in search results. This helps music get discovered.

So playlist creation tools in a catalog are pretty important. Similarity Search is one of these tools. Another one, which we focus on in this article is Dynamic Keyword Search.

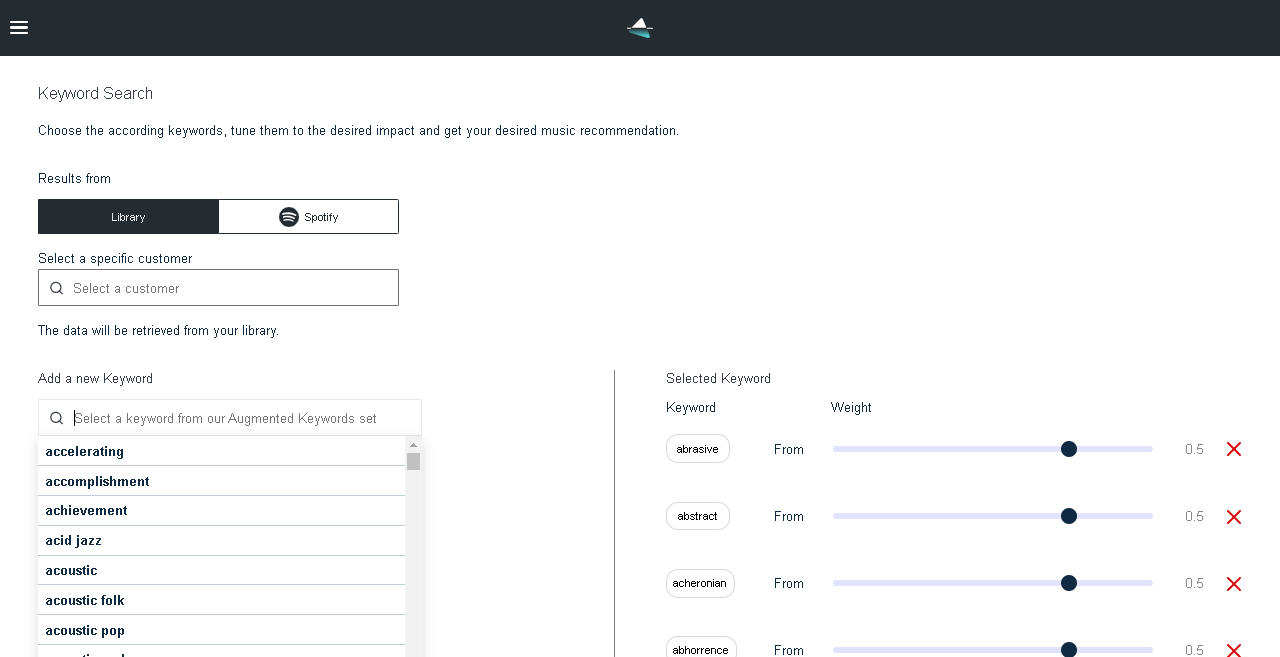

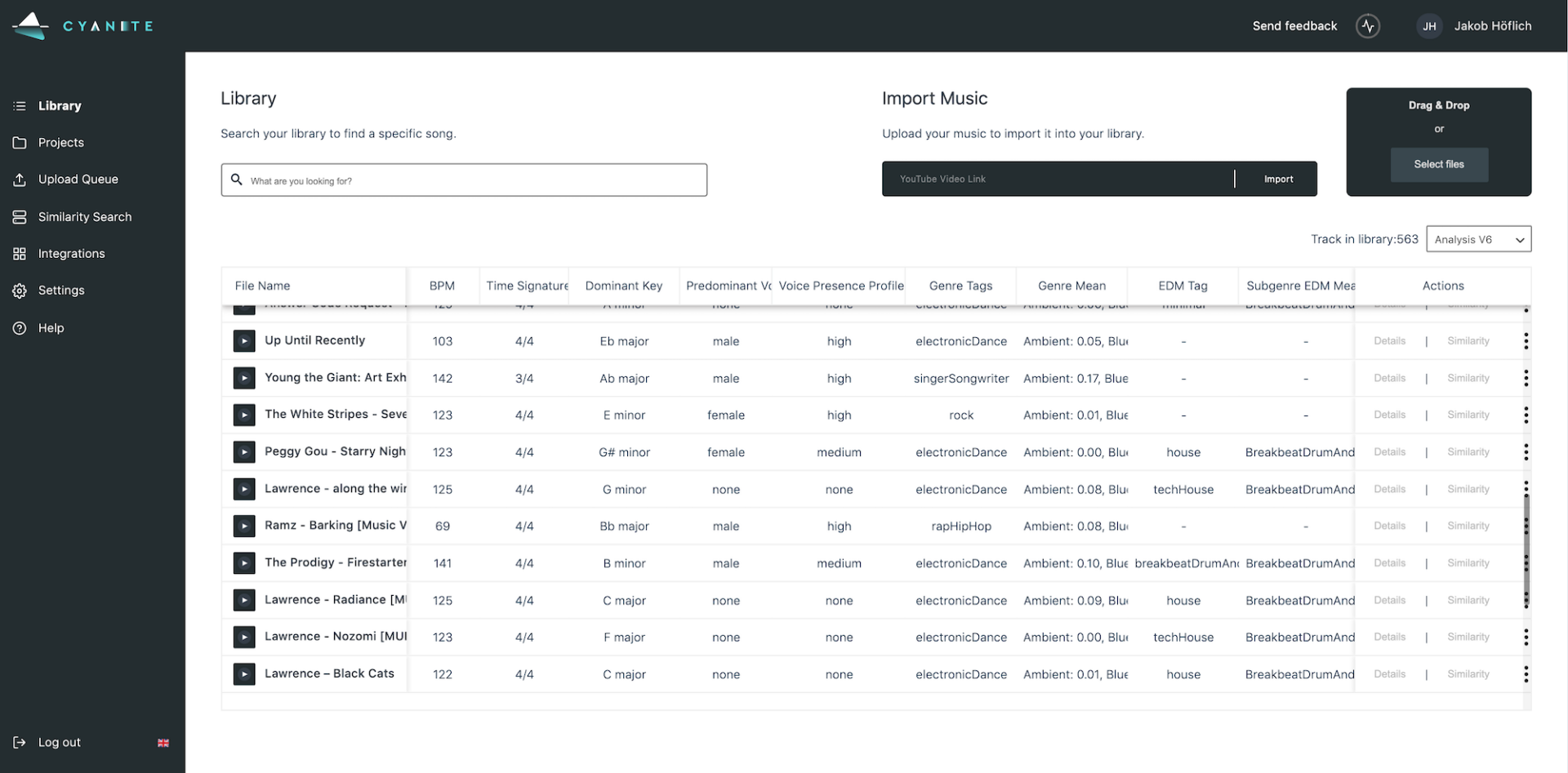

Cyanite’s Dynamic Keyword Search allows for searching tracks based on multiple keywords simultaneously where each keyword can be weighted for its impact on the search. This feature leads to more relevant search results with less time-effort spent on search.

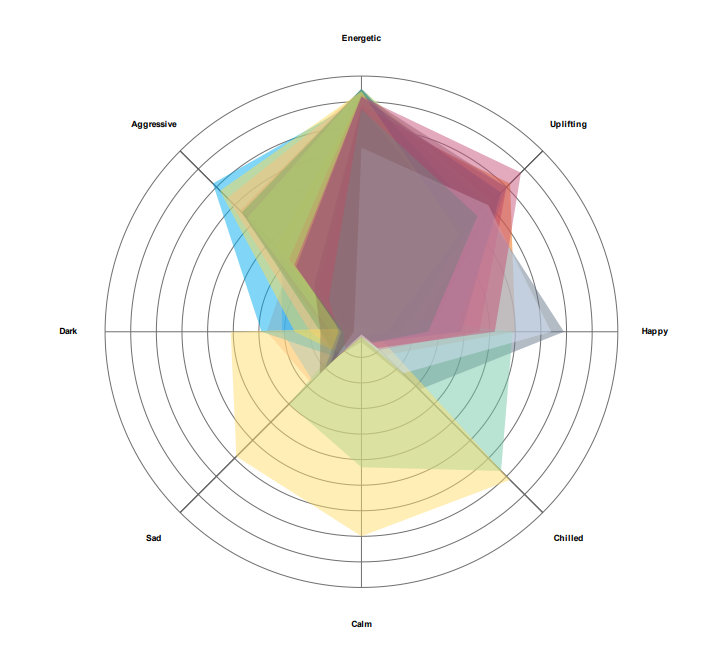

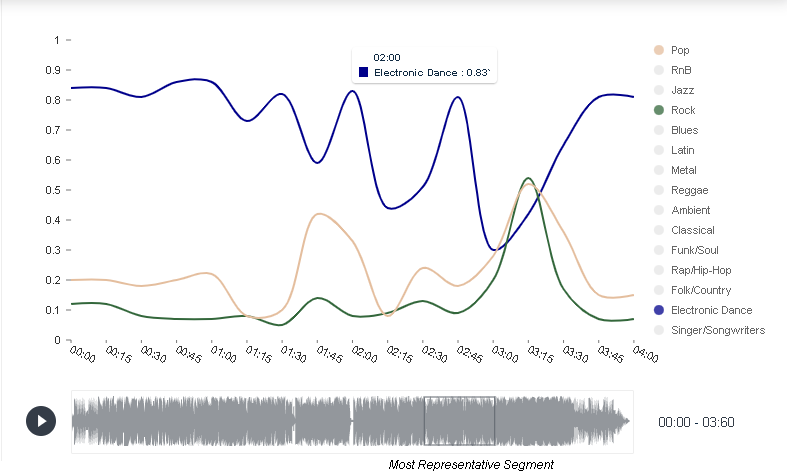

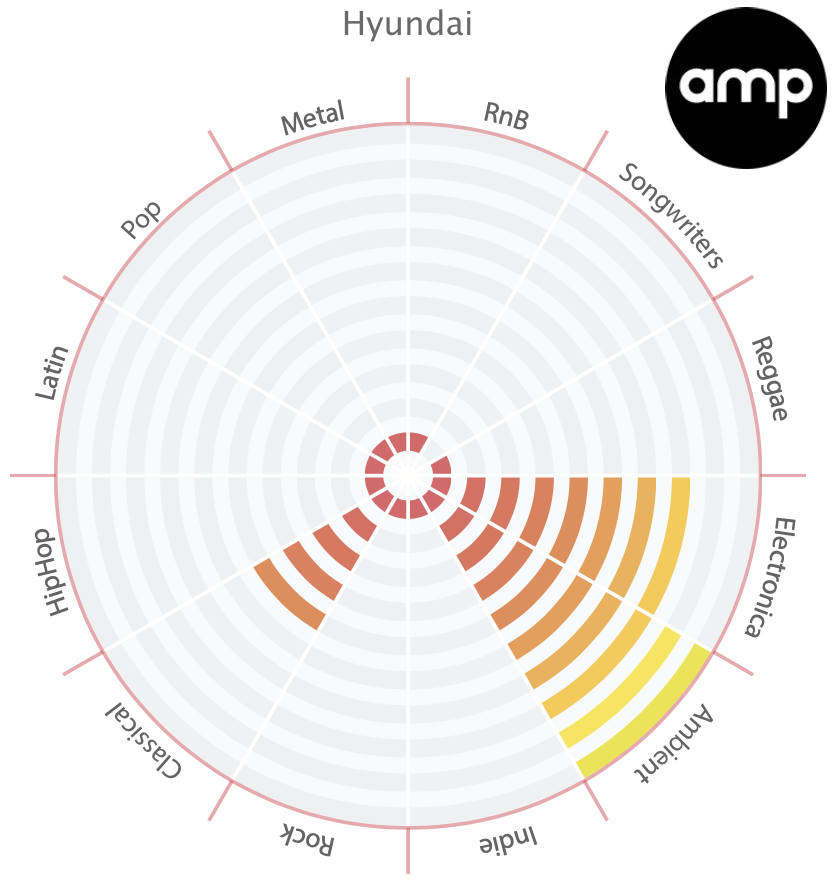

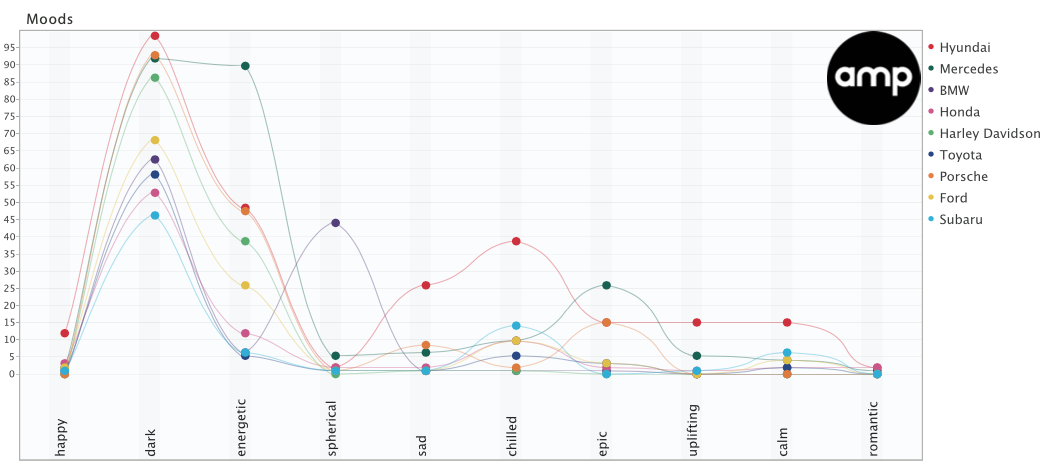

Usually, the keywords you choose represent your idea of what you’re searching for. But you don’t have full control over the search. With Dynamic Keyword Search, you can increase the precision of the search results by adjusting the impact of the keywords on the search. So you can express exactly what you’re looking for. There are 1,500 keywords to choose from representing such characteristics of the song as mood, genre, situation, brand values, and style. These keywords’ impact on search can then be adjusted on the scale from -1 to 1 from no impact at all to “heavy impact”.

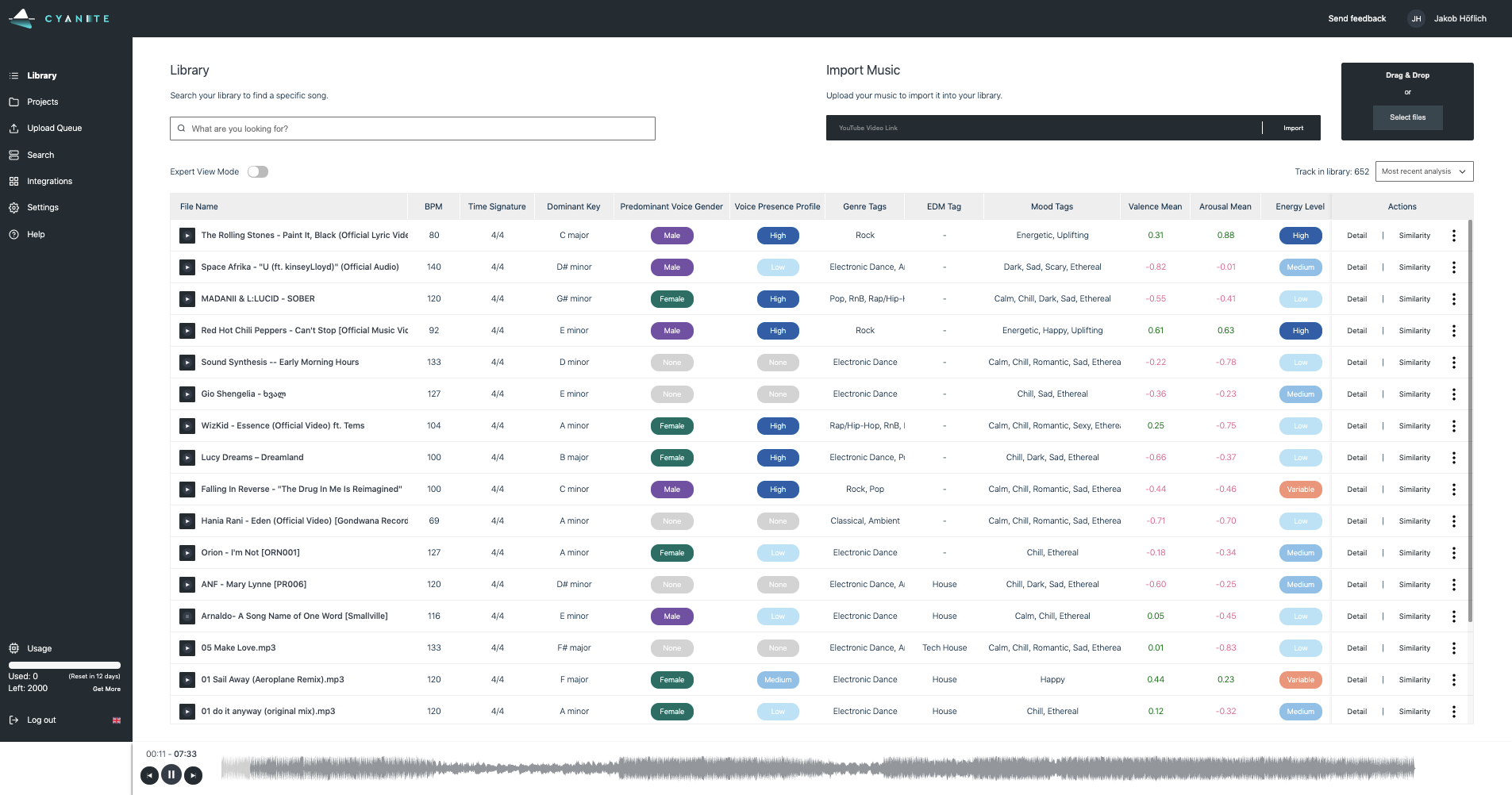

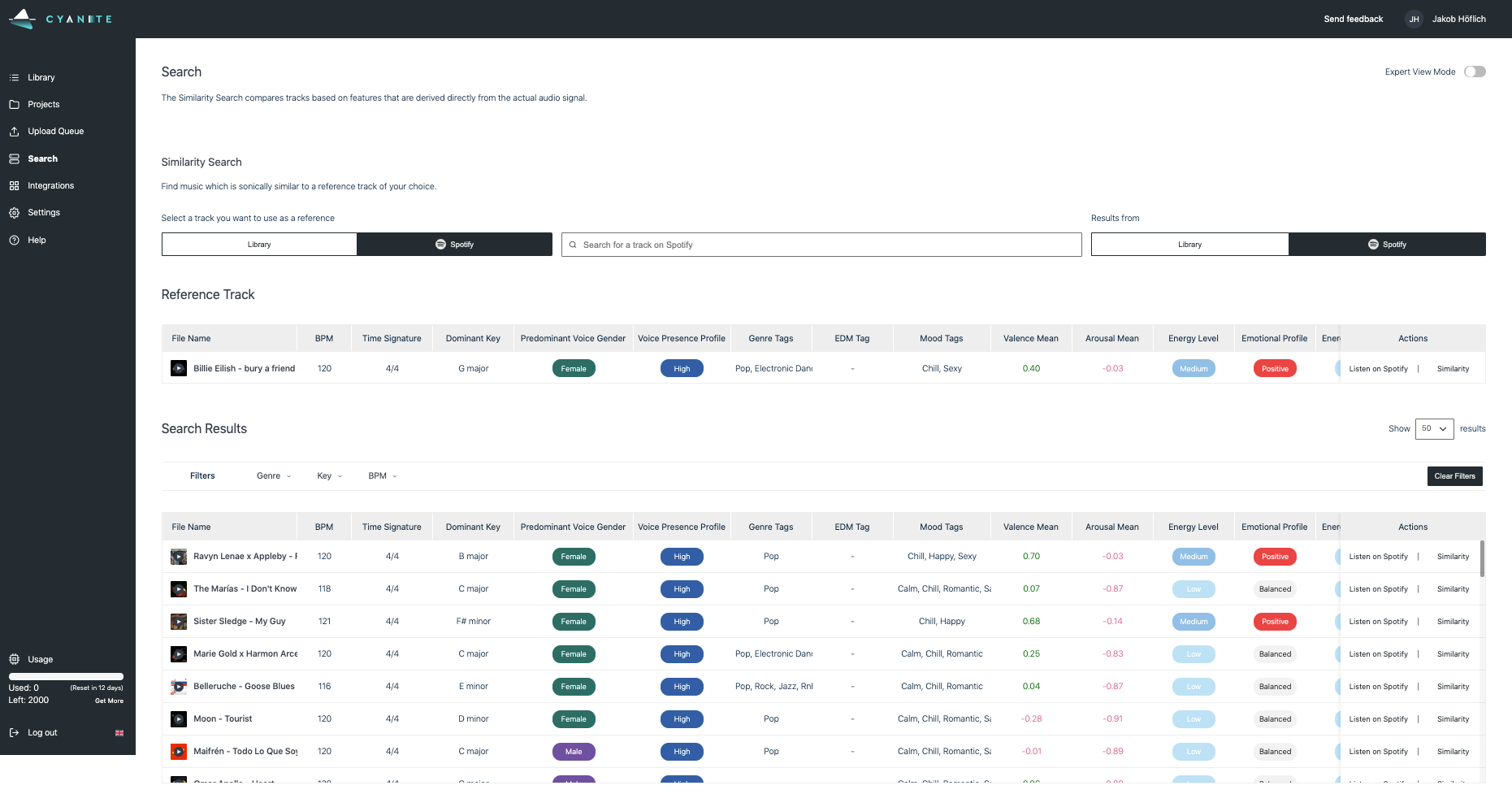

Cyanite Dynamic Keyword Search interface

Not all playlists are created equal. Some are better than others. This study outlines 5 characteristics of playlists that can indicate a good or bad playlist. The authors of the study assumed that user-generated playlists could be an indicator for the algorithms to create good playlists. Here are the 5 playlist characteristics they outlined:

- Popularity – most user-generated playlists feature popular tracks first. This, however, is not too obvious though but grabbing the attention spans of the listeners from the start is important.

- Freshness – playlists should contain recently released tracks. Most playlists in the study contain tracks released on average in the last 5 years.

- Homogeneity and diversity – playlists on average cover a very limited number of genres so playlists should be rather homogenous. However, diversity plays a significant part in listeners’ satisfaction so it should be incorporated into the playlist as well.

- Musical Features – in terms of energy, playlists with a narrow energy spectrum with a low average energy level are preferred, but there can be some high-energy tracks in the list.

- Transition and Coherence – the similarity between the tracks defines the smoothness in transition and coherence of the playlist. Usually, user-generated playlists have a better similarity in the first half and a lesser similarity in the second half.

As the study deals with a variety of user-generated playlists, it can’t be said that all of them were equally good playlists. But the criteria outlined above can help improve playlists by understanding the character of the playlist. With Dynamic Keyword Search, you can control such criteria as homogeneity and diversity, musical features such as energy level, and similarity between the tracks to ensure transition and coherence.

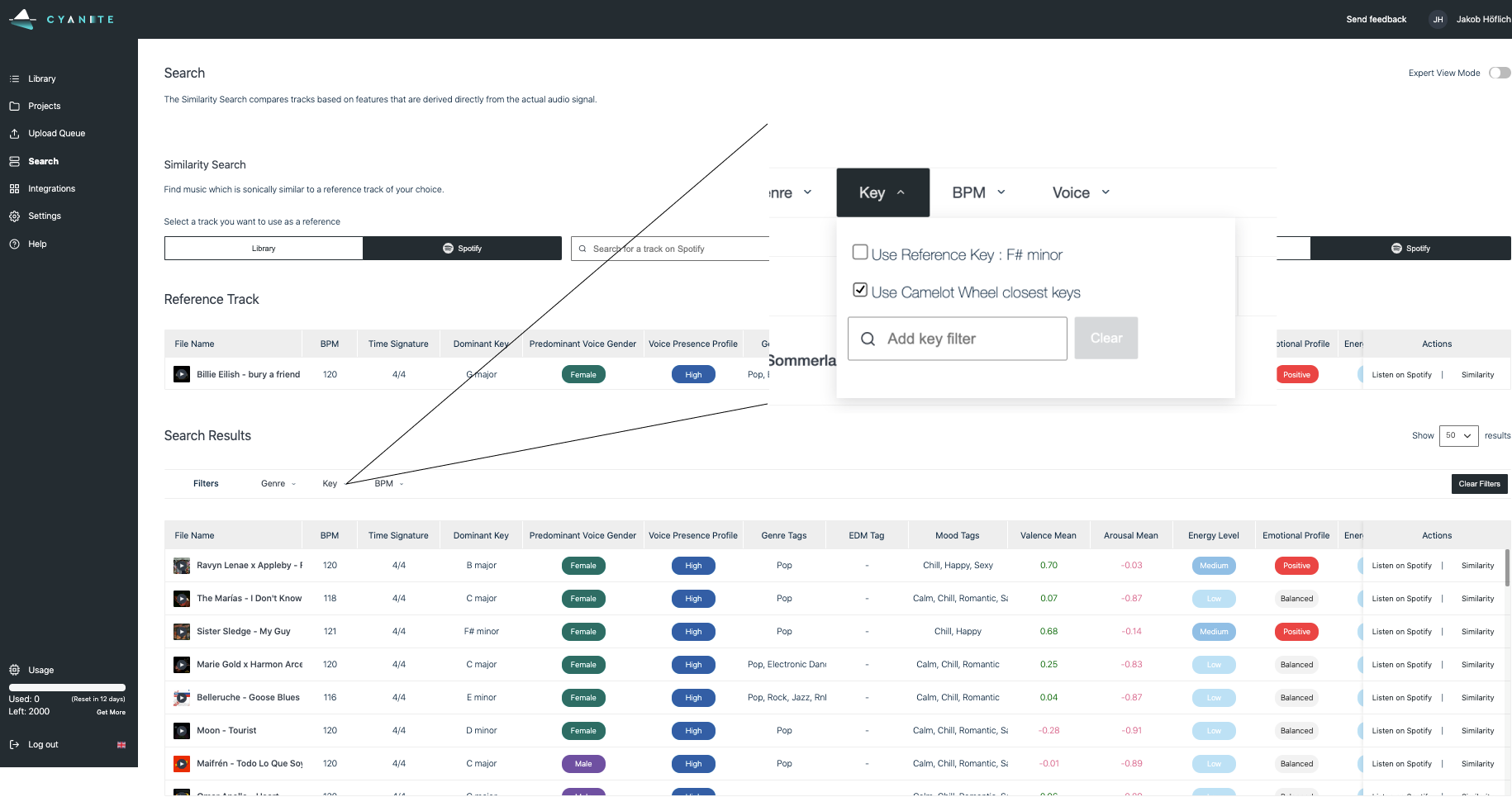

PRO TIP: To improve a playlist’s transition and coherence you can combine the Dynamic Keyword Search with our Similarity Search to further filter music on Camelot Wheel. The Camelot Wheel indicates which songs transition harmonically well giving you an extremely powerful tool to perfect the song order. You can find a deeper explanation of that in this article.

Here is how to access Dynamic Keyword Search in the Cyanite app. This feature is also available through our API.

- Go to Search in the menu and select the Keyword Search tab. Choose whether to display results from the Library or Spotify.

- Select keywords from the Augmented Keywords set. For example, these are some of the keywords in the list: joy, travel, summer, motivating, pleasant, happy, energetic, electro, bliss, gladness, auspicious, pleasure, forceful, determined, confident, positive, optimistic, agile, animated, journey, party, driving, kicking, impelling, upbeat. We recommend selecting up to 7 keywords out of 1,500.

- Adjust the weights for each keyword from 1 to -1 to define their impact on search. For example, let’s set the search input as sparkling: 0.5, sad: -1, rock: 1, dreamy: 1

- Scroll down for search results. The search results will return tracks from the library that are dreamy, slightly sparkling, and not at all sad. They will also all be rock songs.

Dynamic Keyword Search can be requested from our support team.

There are various ways to create playlists from manual creation to automatic and assisted techniques. An assisted approach that combines automatic and manual creation has proved to be the most effective in playlist creation. It meets almost all the editors’ needs such as providing control over the process, maintaining a high level of engagement and trustworthiness, and offering a good selection of songs. However, the automatic approach is fast developing and algorithms might substitute human work completely in the future.

Our Dynamic Keyword Search feature can help you create playlists as one of the assisted techniques. It can provide search results that take into account the search intent in terms of keywords and the impact of those keywords on search. This doesn’t mean that Dynamic Keyword Search replaces the manual work completely, but it can help artists, labels, and catalog owners do the creative work and engage fans and listeners with the support of the right tools to save time, money, and effort. This is what we’re striving to achieve here at Cyanite – to help you fully unlock your catalog’s potential.

Let us know if this article has been helpful and stay tuned for more on the Cyanite blog!

I want to try Dynamic Keyword Search – how can I get started?

If you want to get the first grip on Cyanite’s technology, you can also register for our free web app to analyze music and try similarity searches without any coding needed.